FWIW, it has helped me to add “write the most concise and idiomatic elixir code possible” to any prompt (or to the project’s general instructions). Especially when learning Elixir, I often was writing code based on old habits (too many if statements etc), and the AI code really accelerated my learning. Also, asking it to write my own “bad” code using more idiomatic Elixir was often enlightening.

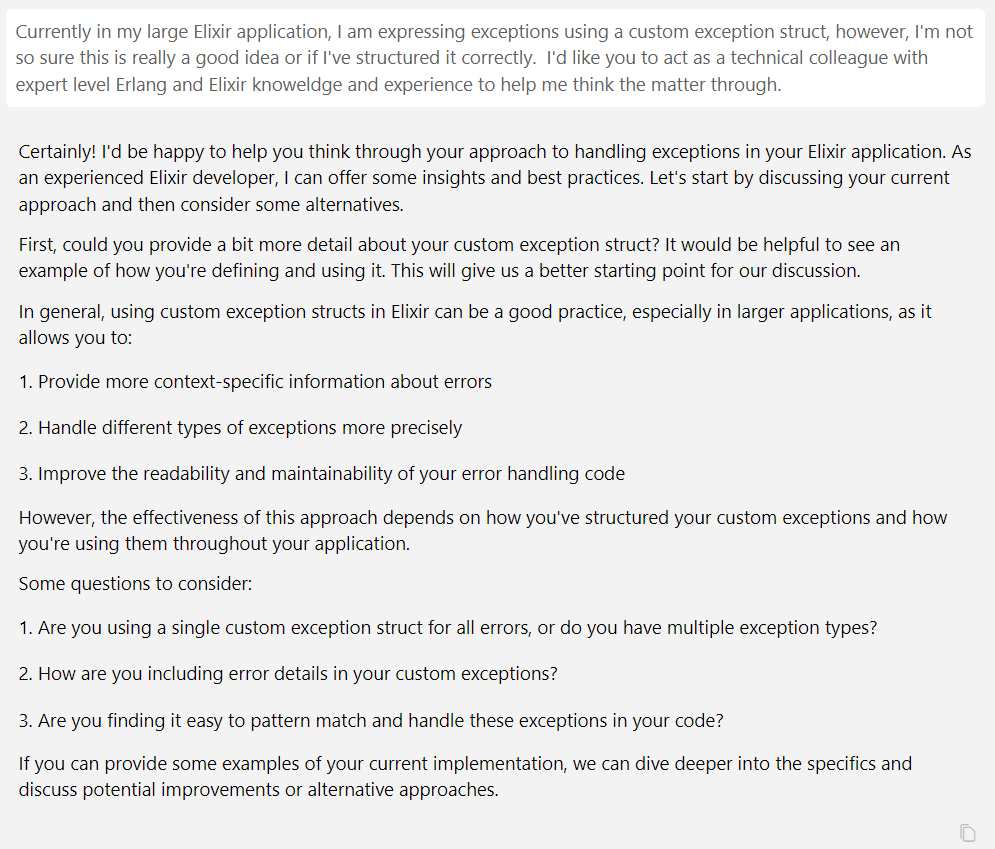

Getting back to your original question about how well LLMs do with Elixir, what follows might be a useful example. In this case I decided to try something I said I never do: use the LLM as a brainstorming aid. I’m doing some broad refactoring across my codebase and some questions (admittedly of a bike-shedding quality) are vexing me a bit. It’s a short interaction, but I think it can give some hints about how well these tools work with Elixir. I’m putting these in screenshot style because it’s a bit easier to see who’s talking and some graphical elements which show the UX for the interaction. (My apologies if this looks like crap on your side, devices, etc.)

My prompts are in the white areas immediately after the “First/Second Interaction” headings. The LLM responses are in gray-backed areas when using a speaking voice and in white-backed areas when showing code examples.

First Interaction:

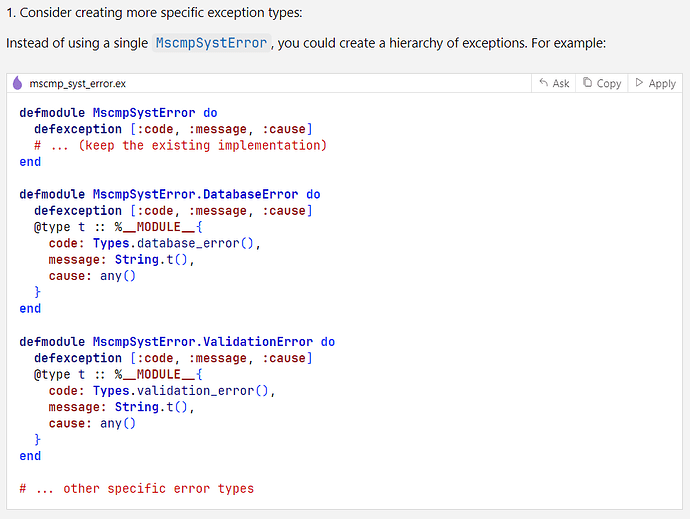

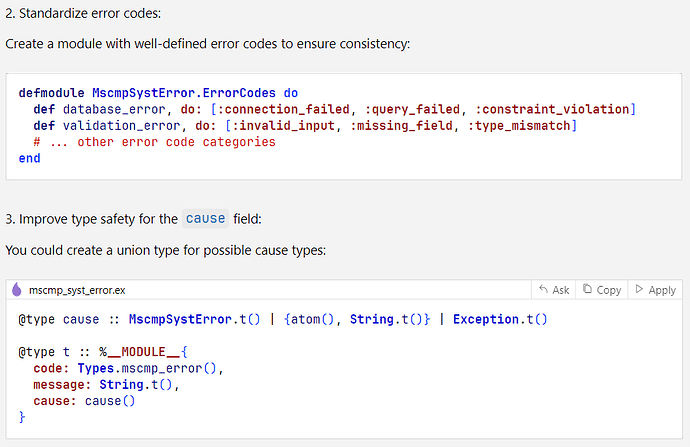

Second Interaction (referenced code: mscmp_syst_error.ex):

From an a basic Elixir language standpoint, it seems competent. I don’t immediately see any basic issues like syntax issues. it handles modules, exceptions, typespecs, typical representation of functions with their arity (i.e. get_root_cause/1) and other constructs/conventions correctly. The code I gave it for context appeared to produce relevant results for the subsequent responses.

I think it missed the more conceptual/architectural level of interaction I was looking for; that could well be an issue with my prompting though I do think it would have gone to implementation details quickly either way. Also I think there are a couple stray things in its suggestions… for example the MscmpSystError.ErrorCodes module doesn’t appear to really have any point the way its expressed. I think defining those recommended lists as types would be better and the one place such a MscmpSystError.ErrorCodes module could pay off, in the suggested “new” functions as validation of the code values on new exception creation, isn’t part of the recommended solution. Finally, I have to admit seeing the closing paragraph made me smile a bit.

So I’ll reiterate, it has this junior quality about it, but it’s not giving me wildly stupid answers either.

LLMs write super verbose code that feels like java in an elixir trench coat whener I try to use it. Maybe I don’t prompt it well enough?

Most of my LLM success comes from making html templates in tailwind ![]()

Most likely, but then the relevant question becomes: “Do I want to keep honing my programmer skills or should I re-specialize into becoming a better prompter?”

There are only so many hours per day after all.

This is one of my frustrations with the effectively “zero-shot” prompting you get if you don’t chat with the LLM. There doesn’t seem to be a magic prompt that will work to produce high quality code all the time. On a relatively few occasions I got really good code right off the bat, but more often it takes a few or more iterations to get code that I find acceptable to commit. This longer process looks less “magical” than some of the (probably cherry picked example) videos that show off entire apps being built with one voice command, but I’ve found it is quite reliable.

I would be curious to see what happened if you provided the entire (or large parts of the) codebase to it. I’ve found the prompt quality matters much less when it has full context and I can iterate to improve the prompt.

If i remember correctly i added module docs in EEP 48 format to the prompt and that made a big difference.

I was trying something with the Erlang :zlib module and with the docs it actually managed to use functions that existed and even used the right arguments. Most of the time.

But that was a few months ago, maybe i should give it another try.

If you didn’t work with Claude 3 Opus or 3.5 Sonnet, it’s definitely worth a try. GPT-4o is somewhere in between. o1 is supposedly better, but I haven’t worked with it.

So were you extracting the EEP metadata from the .beam file and passing it in as text? If it provided enough information that an ordinary human programmer could write code to it, so probably can Claude 3.5 Sonnet, and others at that level.

My difficulty with documentation so far has been size. You really want your entire code + message history to fit in the context window, and some docs can be quite large.

For example, I tried experimenting with the OpenAI OpenAPI spec to see if I could use an LLM to generate the openai_ex library from the spec. Turned out to be too large for the context window (from what I recall, the spec alone was too large). I toyed with extracting one API endpoint at a time from the full spec and generating the corresponding code. I couldn’t quite manage to do a clean extraction with all the information, even with LLM assistance. I eventually gave up because it was too much work for such a simple API. It might be worth trying out in a higher value situation.

I feel I should add a caveat to my generally positive attitude about LLMs.

Even now LLMs routinely make mistakes. Sometimes they hallucinate, sometimes they write buggy code, and sometimes (not often) they misunderstand what you asked for. If you cannot read and understand every line of the code they produce, the code is in theory (as well as practice) unreliable and should not be committed.

Fortunately, they are quite good at explaining their code and very quick to admit and correct mistakes that are pointed out (and quite apologetic too ![]() ). It’s one of many reasons why the full interactive chat experience is the one that I find truly valuable for coding.

). It’s one of many reasons why the full interactive chat experience is the one that I find truly valuable for coding.

I’m pretty good at Elixir (imo ![]() ) so when I turn to an LLM for Elixir stuff, it usually fails miserably. It couldn’t translate a recursive CTE to Ecto: https://elixirforum.com/t/help-translating-recursive-cte-query-to-ecto and it generally fails pretty hard at writing macros in my experience (beyond simple

) so when I turn to an LLM for Elixir stuff, it usually fails miserably. It couldn’t translate a recursive CTE to Ecto: https://elixirforum.com/t/help-translating-recursive-cte-query-to-ecto and it generally fails pretty hard at writing macros in my experience (beyond simple use macros).

That said I use LLMs pretty much everyday. Since I’m only intermediate with Go, I find it extremely useful for writing little snippets or explaining how some libraries work, which I find it does a better job at than me just reading the library docs. Or things like “show me how to do multiple producers, single consumer using Go routines, and I want the main thread to be the single consumer.”

Also, I find LLMs fantastic for brain storming about problems and suggesting datastores or libraries to solve those problems.

Lastly, I turn to LLMs for documentation before going to official documentation pages. For example, Postgres, Elasticsearch, Cassandra, Yugabyte… I ask an LLM before going to the official docs. I have enough experience to intuitively tell when the LLM is fibbing, and usually all it takes is “are you sure, that doesn’t seem to make sense” or linking to an official doc page so it can read the docs itself and correct itself.

I use LLMs so frequently, I can’t imagine working without them now. It would be like telling me “You can no longer Google or use SO.” ![]()

I’ve had excellent results with Cursor on some personal projects of mine; my problem however is I don’t want to give up Zed for Cursor. I need the two technologies to merge! ![]()

I haven’t used either Zed or Cursor, but isn’t Zed working on that? Zed AI - Code together with LLMs

On the main page there’s full video showcasing it. ![]() https://zed.dev/

https://zed.dev/

Have you tried Claude 3.5 Sonnet?

Wow! That’s a really well thought through design for the chat UX. Much better than any other IDE integration I’ve seen so far. A great combination of convenience and control!

Thanks for the link @pawoc50825 and for bringing it up @barkerja. I hadn’t heard of zed before.