Hi, I need some help with text pocessing

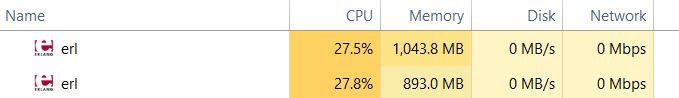

I’m playing with a new Experimental.Flow and comparing the Flow file processing time&memory consumptuion to a simple Eager solution. I have a ~34mb TXT file made from concatenating 50 Project Gutenberg’s books. I’m using a pretty straightfoward code to read the file, split into words list than “clean” each word from non-unicode characters, downcase and finally buid a map with the wordcound. The problem is that it takes about 70s for my Eager code to process a 34mb file and Windows Process manager show two ERL processes consuming more than 1Gb of memory at the peak! Can You check my code, maybe I’m doing something fundamentally wrong?

def process_eager(path_to_file) do

path_to_file

|> File.read!()

|> String.split()

|> Enum.reduce(%{}, &words_to_map/2)

end

defp words_to_map(word, map) do

word

|> String.replace(~r/\W/u, "")

|> filter_map(map)

end

defp filter_map("", map), do: map

defp filter_map(word, map) do

word = String.downcase(word)

Map.update(map, word, 1, &(&1 + 1))

end

I was ready to get a memory consumption about 5 times of the file size for the Eager processing but not the 30!

3 Likes

Try removing Map.update(map, word, 1, &(&1 + 1)) and see how long it takes and how much memory it consumes. Remember maps are immutable, so every operation builds a new map. And even though those maps will share their key-values and part of the structure, there will be some shedding. Given you are performing millions of update, that is going to exert pressure on CPU and memory consumption until GC is performed.

My recommendation for those cases is to use ETS tables. There is a section on Flow docs about such optimizations. Another reason for high CPU usage is the use of regexes. Although I wouldn’t expect any of those to justify taking 70 seconds.

Thanks @josevalim !

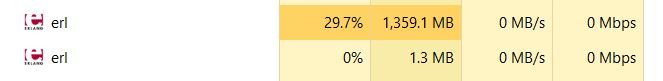

With Map.update... commented out this code takes around 13 sec to process a file and cosumes about 1.35Gb in one ERL process at a peak for a short time…

I will provide a github link for my code & files later today, when I get home - if someone interested  I will also look into how to replace

I will also look into how to replace Enum.map with ETS for an Eager and Stream solutions…

1 Like

Here is the link to my project repo elixir_flow

You can fint test TXTs of different sizes in a files dir (in 7zip archives). Eager, Stream and Flow files processing code is in lib\flow.ex and You start processin with mix run try_me.exs

I’ve used this repository as a supplementary project for my Kyiv Elixir Meetup 3.1 talk about Flow. Now repository is updated with slides from my presentation!

1 Like

Added an option to use ETS as the accumulator on reducer step for different implementations. So no You can compare Map and ETS speed and memory consumption on a different sized datasets!

1 Like

![]()

I will also look into how to replace

I will also look into how to replace