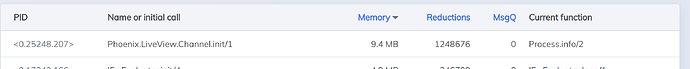

I have an application running using GenStages, which are continuously making HTTP request.

- to a camera to fetch a JPEG

- Post that JPEG to a cloud

All operations are being performed using Finch/Mint. in my Phoenix Live Dashboard.

Is there any way possible to clear binaries forcefully? I am not sure its Finch issue or else. but I am just keen to clear binaries. While using HTTPoison binaries were never this large. any help and heads-up would be thankful.

PS: Process.list |> Enum.each(&:erlang.garbage_collect/1) doesn’t make any such difference.

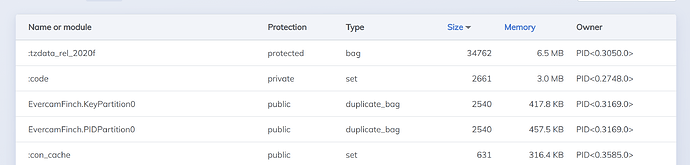

iex(evercam_media@127.0.0.1)1> :erlang.memory()

[

total: 12432509744,

processes: 278680520,

processes_used: 277229600,

system: 12153829224,

atom: 2064777,

atom_used: 2044302,

binary: 11963568160,

code: 52877562,

ets: 108036616

]

iex(evercam_media@127.0.0.1)2> Process.list |> Enum.each(&:erlang.garbage_collect/1)

:ok

iex(evercam_media@127.0.0.1)3> :erlang.memory()

[

total: 12041845272,

processes: 78801624,

processes_used: 77435768,

system: 11963043648,

atom: 2064777,

atom_used: 2044302,

binary: 11772272640,

code: 52877562,

ets: 108485960

]

iex(evercam_media@127.0.0.1)4>