Thanks for putting together a detailed & well-presented proposal ![]()

I’m for the most part happy with it but would like to share a couple aspects of it that felt a bit ‘off’ at first encounter & would love to hear what others think:

-

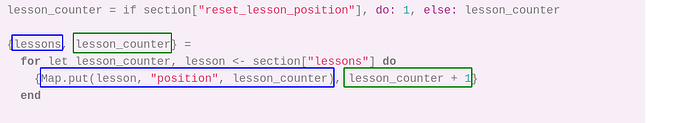

The implicit asymmetry between return value of single iteration and return value of whole comprehension as shown in screenshot below. The fact that first element of tuple accumulates via mapping while second element accumulates via reduction is not explicit and tripped me up

-

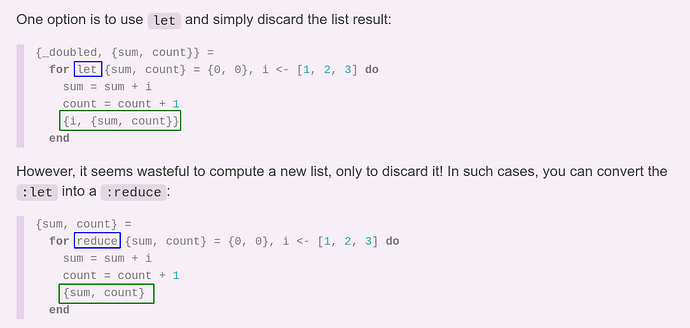

The case for

for reducedoesn’t seem very compelling. AFAIK the same functionality can be accomplished with current:reduceoption and it’s not clear to me what advantage the new syntax brings in this case?

-

Initialization before the comprehension: as others previously pointed out, I can see this leading to some confusion. For ex: seeing

for let lesson_counter, lesson <- section["lessons"] dosomewhere in the codebase withoutlesson_counter’s initialization co-located, when it’s a variable that will potentially be updated in each iteration -

One of the great things about Elixir is the focus on explicitness and I’m a bit concerned we would be giving up some ground here with some aspects of the proposed solution, ex: use of tuple as return value with implicit reliance on position within the tuple for things like error messages. The examples used reductions over simple values like integers but would

ComprehensionErrorfor instance still work with values like nested tuples that could end up looking like valid output?