That is a lot of context switching when you have over a dozen files and folders versus one to a few. How do you justify a file, let alone an entire folder for an add or delete function?

All major IDEs, Emacs and VIM included, make this quite trivial.

What I meant is that not all modules are implementing resources CRUD. I don’t think I would want that style applied to the File or Enum modules

edit @Exadra37 (I do not want to post again to let people participate) I think Erlang/Elixir is the exception to me to have huge module with lots of functions and more than the classic 400 lines “maximum-average” I have in any other language ; because of functions purity.

Each action is a different context for me, thus it as relief to my brain, not a burden.

This leaves me with modules that have a few lines of code, thus they are very easy to reason about without getting lost.

What I cannot justify at all is to work in files that have a lot of responsibilities going on, that span for hundreds or thousands of lines, were I have then to spend a lot of hours or days just to fix a bug or figure out how to add a new feature.

This is not theoretical rhetoric, this was my experience at my previous job.

The countless time wasted in that huge files off OOP code that made head spin just to try to understand the flow of the code, that often made me to have to resort to paper to draw flows of the code, was the drive to come up with this architecture that I call Resource Action Pattern.

This architecture his such a relief for my brain that i don’t have words to describe the pain it took away from me ![]()

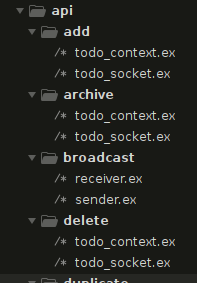

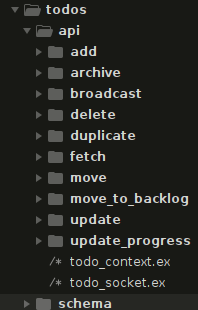

It’s not just one file:

The todo_socket.ex handles incoming socket requests, and if I decide to use the Todo from the CLI then I would have a todo_cli.ex for handling the requests from the terminal. All of them use the todo_context.ex for the business logic.

Also, if you look again to the folder structure it’s acting as documentation for the project, because you just open it and you see all the resources available in your project and what actions are possible, and all this without reading a single line of docs, that as all we know get out of sync very quickly in our professional projects.

This folder structure also makes very easy to newcomers to the project to start working on it to fix bugs or add new features, because its clear were to look for the bug that is happening when you add a resource, delete it, etc, or when you need to add a new feature is pretty obvious that you need to create a new folder for it ![]()

I had this discussion once with Jose, and yes the core libraries, or for the matter some other libraries may be an exception for using the Resource Action Pattern, but even in my helper libraries I tend to keep the code compliant with my pattern.

I am traumatized by huge files of code, therefore they need to have a really strong reason to exist in a project of mine ![]()

Interesting, do you have a blog post about this pattern? I am fine with many little files in many folders, but:

- how do you avoid code duplication?

- it seems that you have many files with the same name in different folders. Will that cause confusion sometimes?

No, I don’t have any. Just an Organization in Github and Gitlab that I started with some repos, but that I never finished.

I place the code that is common to more then 1 action in the root of the folder for that resource, like here:

So the todo_context.ex and todo_socket.ex are where common code goes, and one of the few places where I don’t go by the rule of 1 public method per module.

Always remember that code duplication is always better then the wrong abstraction. In doubt, don’t abstract until you understand better the domain you are working on, because later you will be able to do better abstractions to avoid code duplication.

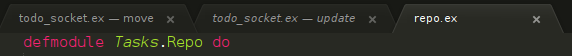

Not on my editor:

But, the naming convention used for naming the files is whatever it makes sense for you.

For me the important bit to drive clean code and clean architecture is to keep the folder structure resource -> action -> module.ex

(is a bit bored during Sunday afternoon) … Oh, I know! I’ll necro a thread! ![]()

You are comparing two polar opposites (200 files x 20 lines each vs a single file with 4000 lines). In all Phoenix projects I worked on we had a Users context that never went above 400-500 lines of code which is still very manageable with a good IDE (or even for me with Emacs and without any tree-view-on-the-left addons).

Obviously I am not telling you how you should feel about your style – large amount of small files is for me a complete “nope” however. I find it reassuring that something like MyApp.Domain.Users contains every operation I might need to operate on the users in the system. And if the file grows big, I’ll split it apart and put a bunch of defdelegates in the central context to keep the perception that there is a single doorway to the user functionality in place.

That, plus exercising some discipline on what functions are public or private nets me exactly the same benefits you see from your approach, only I have to open much less files during my workday.

As someone who gets headaches seeing huge classes, I don’t feel that same pain with modules full of functions. The really great thing about functional is that if a module gets to unwieldy, you just break it out into another module and delegate to it. While you still have to come up with a name for it, you don’t have to worry about what pattern you’re using to extract it (“service” or “manager” or any of that utter nonsense) and then, just as @dimitarvp mentions, defdelegate to it. The cost of making a naming mistake in this instance (no pun intended) is also very, very low. In fact, my root contexts generally just have a few functions with a bunch of delegates and it’s dead simple to see exactly what the entire context does with a quick scan, function signatures and all.

Yep. “Service”, “manager” and “context” in particular are words that have been so abused over the years that these days they mean absolutely nothing to me. Mention them in a dev meeting and you’ll get blank stares from yours truly. ![]()

This is a great (and a very important) point! In my view, a large number of micromodules is basically just another extreme, not particularly better than a small number of megamodules. In both cases I find it hard to see the forest for the trees. Personally I prefer a balanced code, that has a “reasonable” amount of “reasonably sized” modules. This is all very hand-wavy, but I don’t think we can use a precise number (i.e. preferred LOC per module) as a rule. As a very rough guideline, I think that modules > 1000 LOC (not including user-facing documentation) can often be partitioned in a meaningful way. OTOH, a small module with a single public function is likely an overkill. Occasionally straying away from those guidelines is fine, but if the codebase ends up with a lot of mega- or micro-modules, then it’s probably under- or over-designed.

Even more important than the size is to look for cohesion. Things in side the same module should logically strongly belong together, i.e. deal with the same logical concept. To get that I start by stashing functions in a single “junk drawer” module (e.g. top-level context), and then refactor once the module starts feeling bloated. This is to me the essence of the agile design. Don’t start with an overelaborate design upfront, but instead make educated decisions after the fact, reasoning about the existing code that supports today’s features, and identifying groups of behaviour.

The boundary tool recently got a helper mix task called boundary.visualize.funs that can assist with such refactoring. The task produces a dependency graph of functions inside a module, which can help the developer make a decision about a module split. Roughly speaking, a frequent sink, i.e. one or a few functions called by many others, might indicate a potential for a separate abstraction. Likewise, independent verticals (groups of functions that do not call each other) could sometimes be worth splitting (though not always, e.g. I prefer to keep the readers and the mutators of the same “thing” together, and they typically don’t depend on each other).

I arrived at the same way of doing work about 8 or so years ago. I wanted my code to read like plain English and I have bent rules to achieve this goal. During this process I also discovered the “junk drawer” module pattern. It’s the way our brain does things anyway – and is reflected in how many of us “organize” our homes as well. ![]()

This has also led me to use module/function names like:

MyApp.Bussiness.Cart.addMyAppWeb.Views.Util.line_item_containerMyApp.Schema.UserMyApp.Business.Users.reset_password

etc. And, as said above, utilize defdelegate so I can have one convenient place in my brain to look for functions related to X or Y in the project.

Kudos and really great work on that piece of software. ![]() I keep looking for excuses to use it and that might be the one.

I keep looking for excuses to use it and that might be the one.

Yeah, I also think it’s similar to how I work in general life, compartmentalizing things when they become too large.

You can use this particular mix task without needing to setup boundaries or add the boundary compiler. I.e. just add the lib as a dependency (I think even runtime: false is enough) and invoke the task on some module.

A powerful type system is a good thing to have but it’s even of lesser value than tests when it’s coming to proving the logical correctness of the software.

At the end of the day, only good software development practices can save it.

And it’s about working with your teammates on software as a team.

I see this trend of techies trying to solve socio-technical problems with more tech and I think about how pervasive that practice is.

To quote “When Coffee & Kale Compete”.

I feel that way especially with software because we have the analytics and the geeks who are building the software; they’re all about tracking and logging and all these data…I always give the analogy of being a retail shop owner and hiding in the back room and trying to learn from your customers by watching the closed-circuit television.

You could watch [customers] come in, walk around your store, pick up things, put them down, try things on…or you could just walk out and ask them, “Hey, what brought you in here today? What are you looking for? What other places did you try in the past?”

And I believe this is at least a part of the reason why people are migrating from Elixir to Go and then to Rust

There is some noticeable brain-drain occurring, especially towards Rust and WASM.

The hell one would prefer a language with rudimentary concurrency primitives for networking heavy-services? That’s really above my head.

I remember a conversation when crypto guys discussed that their node has a limit of 40 connections because async I/O in Rust sucks. They worked in a company that I hugely respect and which was valued above $500m.

Sure, the trade-off there is that Rust became the de-facto standard language for the new wave of crypto projects and it has all the cryptographic libraries which will be ridiculous to reimplement in Elixir (At least it was probably unfeasible before Nx).

But the point is that a team of developers working with a dynamic type system will deliver a better crafted piece of software that will serve their customers better than a group of individual developers working with a static type system.

TLDR: groups of individual contributors who don’t know how to write software together, as a team seeking shelter in their tools.

That doesn’t have to do anything with language.

disclosure: I do love Rust’s type system and it’s the next language that I want to learn because it will definitely expand my worldview.

But not as nearly as Elixir who added notion of time to my everyday practice.

This actually works for a lot of issues. The people who tend to write code negligently and without much care what gets passed to where don’t survive for long coding Rust, OCaml, Haskell, and the other compile-time typed languages.

Even outside that area, enforcing a simple pre-commit GIT hook – which is a tooling solution, not a programming language one – can do wonders for the productivity of the team.

I relate to the argument that not all social / economic / politic issues can be solved by tech. That’s true. But it’s a bit too extreme a view for my taste because my practice has shown that forcing people to “behave” with good tooling (or a strict programming language) works.

Strange example. I’d definitely leave the store and not return there anytime soon. There are acceptable and unacceptable ways of gathering metrics / analytics.

I’ve been in one such company last year. Rust’s async has been improving literally every week, for months. We ended up having one Kubernetes pod with 4 vCPUs and 8GB RAM with a NVMe SSD storage that peaked at about 150,000 parallel requests.

Rust’s async used to suck but the community is extremely dedicated and things are improving with an impressive speed.

I agree it’s not OTP, of course; nobody has beat that so far. But Rust is getting fairly close, trust me.

I’ve seen this but I’ve seen the other extreme as well. This can’t be generalized.

Agreed. Good devs find ways around the problems in their ecosystem and even make them work to their advantage. Example: we might not have compile-time typing guarantees in Elixir but between mocks and property tests you can gear your system with a very heavy anti-bug armor.

Bad devs will screw up no matter what they work with.

That one I fully agree with. Elixir is extremely productive. You can test your idea with just a few lines in iex and prototype a solution in minutes.

Rust’s iteration cycles are very punishing in terms of an up-front cost and this can be quite annoying.

The people who tend to write code negligently and without much care what gets passed to where don’t survive for long coding Rust, OCaml, Haskell, and the other compile-time typed languages.

I’m talking about empowering customers with new capabilities. That’s on the other level than codebase aesthetics and the absence of errors related to type conversion.

If someone doesn’t have the skills to adhere to the quality bar you do pair-programming, let them have time to learn and practice deliberately until a problem is solved.

They will either raise to the brighter part of the hill or will continue their journey where they don’t have to be under the light constantly.

But it’s a bit too extreme a view for my taste because my practice has shown that forcing people to “behave” with good tooling (or a strict programming language) works.

I stick to the theory that people want to become better at what they’re doing. And either developing software is not important for them or the company doesn’t give them a chance to unveil their potential.

But it’s a bit too extreme a view for my taste because my practice has shown that forcing people to “behave” with good tooling (or a strict programming language) works.

Practice can show only that something is not inherently wrong, not that something is completely right.

Rust’s async used to suck but the community is extremely dedicated and things are improving with an impressive speed.

That’s great to know!

This can’t be generalized.

I’m willing to bet my last penny on that. Give complicated enough domain space, decently sized company, and two-three years timeframe. Team will always win over a group of individual contributors.

That one I fully agree with. Elixir is extremely productive. You can test your idea with just a few lines in

iexand prototype a solution in minutes.

I meant long-lived processes that outlive individual I/O requests/response cycles. They add a dimension of time to the app.

Rust’s iteration cycles are very punishing in terms of an up-front cost and this can be quite annoying.

Not only Rust. Again, Rust is a great language. There is no intention to start a flame or language-politics discussion here.

Software is an implementation of a conceptual model and every day we learn more and more about it.

Compare yourself after one year in a project and after two years.

You are more knowledgeable and understand how abstractions should be structured much better than you did before.

But how many teams update their codebases to reflect their updates in their conceptual models?

Many of them prefer to go through hoops of mental-mapping while writing code.

And that’s what makes people neglectable to their professional duties and become disappointed in their software development careers.

Even worse - junior developers are being poisoned into thinking that it’s the only possible way to go.

And then you have to add tools to “force” people into doing things, instead of having them as just a safeguard against a silly mistake.