I’ve decided to create this topic to discuss optimization possibilities for something like Phoenix LiveView. I’ve created this topic under the “Libraries” tag, because I might actually write a library based on this. Because my totally vaporware project needs a name, let’s call it PhoenixUndeadView. Why UndeadView? Because I want my views to be as “dead” as possible, so that they are easy to optimize and minimize data transmission over the network.

There isn’t any code to look at yet, because all I have is small experimental code fragments.

Key ideas

My efforts to (prematurely) optimize something like LiveView center around a couple ideas. The initial render should work just like a normal template, ideally in a way that’s indistinguishable from normal templates. It should use the same template language as the rest of the framework, and as much as possible the same HTML generation helpers (those in Phoenix.HTML, for example).

Basic architecture

According to Chris’s talk, LiveView works by rendering state changes into HTML and sending the entire HTML to the client, and diff the HTML on the client using morphdom, a Javscript library that produces optimal diffs between two DOM trees (which preserves cursor positions, CSS transitions, etc. Some custom work is probably needed to support input fields where the user is typing, but the bulk of the work is done by morphdom. In any case, this requires writing a fair amount of Javascript, which is always a source of integration problems with the Elixir codebase, but that’s part d the reality of web development.

I really like this architecture, and I’ve had this idea independently as soon as Drab appeared, but I’ve never worked on it because sending that much HTML back to the client has always seemed too wasteful.

But recently, I’ve thought of some ideas to optimize the amount of data that needs to be transferred.

Optimization ideas

Phoenix exploits iolists as a good way to cache the parts of the template that remain the same. Only dynamic parts must be regenerated. See here and here if possible. The main idea behind Phoenix’s use of iolists is that what is dynamic must change and what is static must remain the same. How does this apply to something like LiveView? When the state changes, only the parts of the HTML that change should be sent over the network.

Currently, I’m not worried about generating only the minimal changes, but I think that sending minimal changes is probably very beneficial. However, generating the entire string and trying to deduce the parts that have changed is probably not efficient. That’s why I think that separating the static from the dynamic parts at compile-time would be beneficial.

The idea would be to do the following:

- cross-compile the EEx template into a javscript function which takes the dynamic substrigs as arguments (not the arguments to the template function!). For example, the template:

Hello <%= @username %>! How are you? It's been <%= @idle_days %> since we've seen you!

could compile into:

function renderFromDynamic(dynamic) {

var fullString = "Hello "

.concat(dynamic[0])

.concat("! How are you? It's been ")

.concat(dynamic[1])

.concat(" since we've seen you!");

return fullString;

}

At the same time, compile the EEx template into something that only generates the (already escaped) dynamic strings. I thought this was very easy, but now I’ve come to think it’s very hard in the sense that you almost have to write your version of an Elixir compiler for this to work as it should. EEx templates map into quoted expressions with varable rebindings, and other things which make it harder to extract parts of the quoted expression into a list. In the case above, the template could be compiled into something like:

def render_dynamic_parts(conn, assigns, other_args) do

[~e(<%= @user %>), ~e(<%= @idle_time %>)]

end

Then, each time the state changes, send only the dynamic parts to the client (as a JSON array of strings; JSON arrays are reasonably compact), and have the Javascript function above render the full string on the client and diff the result with morphdom or something else.

This way we retain the principles of phoenix templates: what is constant never changes and what is dynamic always changes

Problems

EEx templates are extremely powerful. In fact, the entire Elixir language is embedded in such templates. That means writing your own parser or tokenizer to EEx templates is very hard. Like above, it’s the same as writing a full Elixir tokenizer (with some small changes).

The usual way to write EEx engines is to hook into the Engine behaviour and customize the necessary callbacks. Again, this seems hard but it can be done.

Our goal here is to identify the constant and dynamic parts while we compile the template. If you have a dynamic segment, then everything “inside” that segment is also dynamic (no matter how many constant binaries you can extract from that segment), because we only want to split the template into static and dynamic parts one level deep.

This has a small problem. HTML helpers such as form_for, which is used like this

<%= form_for ..., fn f -> %>

<%# other stuff %>

<% end %>

produces a widget which is 100% dynamic, with no opportunities for optimization. Even if the inside of the form is mostly constant (which in practice, it is). This means that any clever optimizations one might come up with will fail in one of the primary use cases for LivewViews, which is realtime form validation…

This means we can’t use the “normal” HTML helpers in Phoenix.HTML. There is a simple solution, though. The idea came from looking at nimble_parsec. NimbleParsec uses simple functions (no macros!) to generate a parser AST, which are compiled into Elixir expressions in a separate step.

Transposing this to the templates, it makes sense to write a series of compile-time helpers, which are meant to do as much work at compile time as possible. We can’t maintain total compatibility, but we might be able to salvage part of the API and maintain a reasonable level of compatibility.

I would have to write a series of helpers like PhoenixUndeadView.HTML or something like that. The goal is to resolve as much as the static template at compile-time as possible. I’ve been writing HTML helpers a lot, and the truth is that most of them are mostly static (or can be rewritten in a way that makes them mostly static).

Compiling EEx templates into a reasonable format

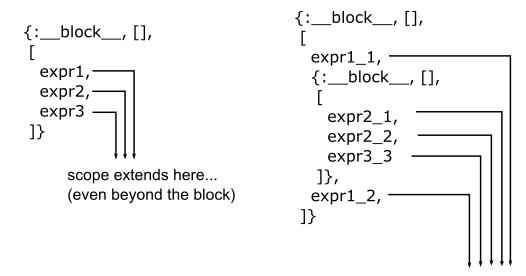

As I said above, compiling EEx templates into a format that makes it easy to separate the static and dynamic parts is not trivial. I’m thinking of developping a more convenient intermediate representation (probably falttened iolists, which are actually very easy to use directly, although they’re a little weird synctatically) and try to compile EEx templates (or similar ones) into that format.