Hey everyone,

Excited to share a new open-source project built with Elixir. Sequin combines Elixir and Postgres into a feature-rich message queue.

We (the maintainers) were searching for the goldilocks message stream/queue. Kafka’s great when you need tremendous scale, but the trade-off is that it has few features and is hard to debug/configure/operate. SQS also has limited features, and we don’t like the dev/test story. RabbitMQ was closest to what we were seeking, but was the most unfamiliar to the team, and looked like it would be a black box.

Databases and messaging systems are at the heart of most applications. But having both adds complexity. We already know and love Postgres – can’t it do both?

Teams pass on using Postgres for streaming/queuing use cases for two primary reasons:

- They underestimate Postgres’ ability to scale to their requirements/needs

- Building a stream/queue on Postgres is DIY/open-ended

Getting DIY right requires some diligence to build a performant system that doesn’t drop messages (MVCC can work against you). We also wanted a ton of features from other streams/queues that weren’t available off-the-shelf, namely:

- message routing with subjects

- a CLI with observability

- webhook and WAL ingestion (more on that in a bit)

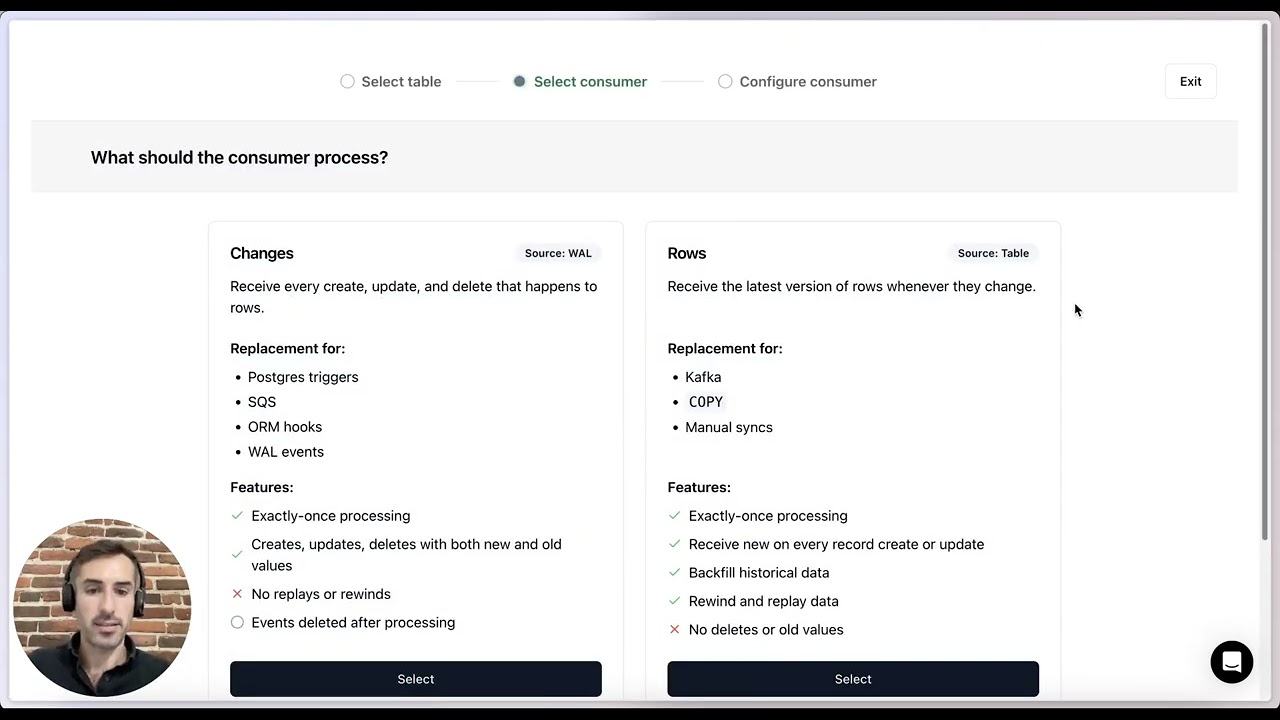

So, we built something that leans into Postgres’ strengths. We love the stream-based model, where messages persist in one or more streams. Each of those streams have their own retention policies (like Kafka). And then you can create consumers that process a subset of messages based on filters exactly-once.

We didn’t like the idea of a Postgres extension. We wanted something we could use with any existing hosted Postgres database. And the Elixir layer gives us a lot of performance benefits. We use Elixir to do stuff that Postgres is bad at, like cache and keep counters.

Plus, who doesn’t want to build in Elixir as much as they can ![]()

–

Because it’s all Just Postgres™, observability comes out-of-the-box. But we love a good CLI, so we’ve already built out a lot there to make debugging/observing easy (sequin observe is like htop for your messages).

We think killer use-cases for a Postgres-based stream are (1) processing Postgres WAL data and (2) ingesting webhooks.

For (1), we built on the shoulders of giants (h/t Postgrex, Realtime, Cainophile). It’s really neat to be able to process the WAL as a message queue!

For (2), we’re planning a way to expose HTTP endpoints so you can go from API → Sequin endpoint → Postgres → your app.

–

Under the hood, Sequin uses a very simple and familiar schema for the stream. We tried a lot of fancy stuff, but the simplest route turned out to be the most performant.

Messages flow into the messages table (partitioned by the stream_id). In the same transaction, messages are fanned out to each consumer that’s filtering for that message (consumer_messages).

In terms of performance, this means Sequin can ingest messages about as fast as Postgres can insert them. On the read side, consuming messages involves “claiming” rows in consumer_messages by marking them as delivered, then later deleting those rows on ack.

We benched on a db.m5.xlarge (4-core, 16GB RAM, $260/mo) RDS instance and the system was happy at 5k messages/sec, bursting up to 10k messages/sec. Your laptop is beefier than this, obviously bigger machines can do more.

–

We still have a lot to build. It’s pre-1.0. And we’re curious if the model of “combine a stateless docker container (Elixir) with your existing Postgres db” will resonate with people.

You can see an example of using Sequin with Broadway here:

https://github.com/sequinstream/sequin/tree/main/examples/elixir_broadway

We’re looking forward to feedback and are happy to shape the roadmap according to your real-world needs! Leave a comment or send me a DM if there’s anything you’d like to see