Before moving forward with integration with OpenTelemetry & Cloud Traces in Google Cloud, we’ve implemented a very simple way to match “slow queries” to the code. It’s based on small piece of code that:

- creates an ETS table,

- attaches a telemetry handler to write queries and stacktraces (and a single sample of query parameters for that query) to the ETS table,

- a function to scan ETS table for a matching query using a string fragment of the query.

Sample parameters are not really necessary, but are a convenience to be able to later quickly re-run the query, or assemble an EXPLAIN (...) from it. On a typical day our app issues maybe under 1000 unique SQL queries. The in-memory size of ETS table I’ve seen so far was well under 20 megabytes.

The script looks like this:

defmodule EctoStacktraces do

def setup() do

:ets.new(:queries_and_stacktraces, [:set, :named_table, :public])

:telemetry.attach("ecto-stacktrace-tracking", [:my_application, :repo, :query], &handle_event/4, %{})

end

def filter(string) do

{:ok, regex} =

string

|> Regex.escape()

|> Regex.compile()

find = fn {query, _stacktrace, _cast_params, _measurements} = item, acc ->

if String.match?(query, regex) do

[item | acc]

else

acc

end

end

:ets.foldl(find, [], :queries_and_stacktraces)

end

def handle_event([:my_application, :repo, :query], measurements, metadata, _config) do

:ets.insert(:queries_and_stacktraces, {metadata[:query], metadata[:stacktrace], metadata[:cast_params], measurements})

end

end

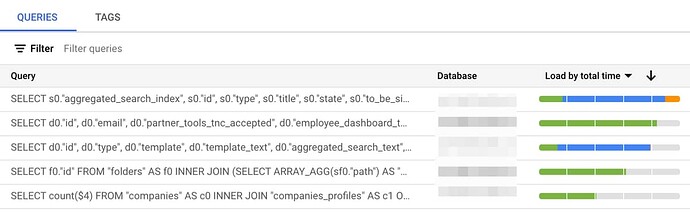

Knowing a slow query reported by tools like “Query Insights” (feature of Google Cloud SQL):

…we’ve been able to track suspicious down to the code using this technique:

-

run:

EctoStacktraces.setup()

This is done either by connecting to a running node remotely, or as part of application.ex for example,

-

some time goes by to let the slow query manifest itself,

-

then, knowing INNER JOIN (SELECT ARRAY_AGG(sf0."path") is part of the slow query:

[{query, stacktrace, _sample_params, _measurements}] =

EctoStacktraces.filter(~s{INNER JOIN (SELECT ARRAY_AGG(sf0."path")})

IO.inspect(stacktrace)

This part INNER JOIN (SELECT ARRAY_AGG(sf0."path") is taken from a service that reports slow queries, in our case it’s “Query Insights” feature in Google Cloud:

The above is very simple and obviously doesn’t survive process exit, for example detaching from a remote session, or restarting the application. But it helped us move forward with optimising several long-standing hard-to-locate SQL queries in the app. Also, looking at some of the stacktraces helped reveal code that hides calls to DB in private functions, that don’t show up in the stacktrace