Without invoking Rust via NIF, what is the fastest pure Elixir solution to this problem ?

-

I want to have 1GB of contiguous memory. Consists entirely of u32’s.

-

Any process can write to any index, as long as (1) it’s u32 aligned and (2) the u32 is written atomically.

-

Any process can point to any starting position, and say “send the next 1024 bytes out over tcp” (assume everything is within range).

====

So note, if a process is writing 10 numbers; I am okay if the process writes 5, some other process does a read, then the writer writes the remaining 5. So I don’t care of the reads are “inconsistent” as long as every u32 is written atomically.

====

Question: Is there an Elixir builtin for solving this problem, or does this go into NIF territory ?

Mnesia doesn’t solve your problem?

Does mnesia or ETS store the u32s in contiguous memory so that we can just push it from memory to tcp socket ?

With these specific requirements I do not know of a built in datastructure that would work this way. The BEAM tends to push you to avoid solutions involving 1 gig of shared mutable memory.

3 Likes

Thanks for confirming the negative result. This was my intuition given the shared nothing - message passing nature of BEAM, but wanted to double check.

I’m still too much of a newbie to have explored things like Mnesia and ETS in much depth, but… using the bits Elixir is famous for, would it be feasible for your purpose to have one process in charge of reading and writing the memory (so it’s not shared mutable state any more, which is the devil), and have the other processes tell this one to read what’s at, or write a value to, a given location?

The closest to what you are asking is the counters module: Erlang -- counters. However, they are:

If your answer is “make a copy”, then I can see that module being augmented to provide a to_binary conversion. If you don’t want a copy, it is hard to see it happening.

1 Like

@josevalim : Here’s the XY problem.

Imagine we are fighting in space, and your ship launches 1000 missiles at my ship, where each missile is { x: f32, y: f32, z: f32; }. So now we have:

S0[ 1000 ][ 3 ] ; // state of the missiles @ time t = 0

S1[ 1000 ][ 3 ] ; // state of the missiles @ time t = 100 ms

R[ 1000 ][ 3 ]; // state the reader actually reads

the ‘guarantee’ I care about here then is:

forall i, j: R[i][j] = S0[i][j] or R[i][j] = S1[i][j]

====

So I don’t require that we read all of S0 or all of S1. I am okay if some of the missiles are from S0 and some are from S1. Furthermore, I’m also okay if, within a single missile, x & y are from S0, while z is from S1.

As long as we don’t read a partially updated f32, I’m happy.

====

So now imagine we have a few gigs of data of this level of ‘synchronization’ – where as long as each f32 itself is atomically written to, we don’t care if it comes from S0 or S1.

Furthermore, imagine we have lots of readers/observers that grabs contiguous subsections of the data and blasts it out via UDP.

====

At this point, I’m 99.99% sure RustLang / GoLang is the right way to go, but am open to hear cool Elixir hacks.

Mutable space ships… with missiles

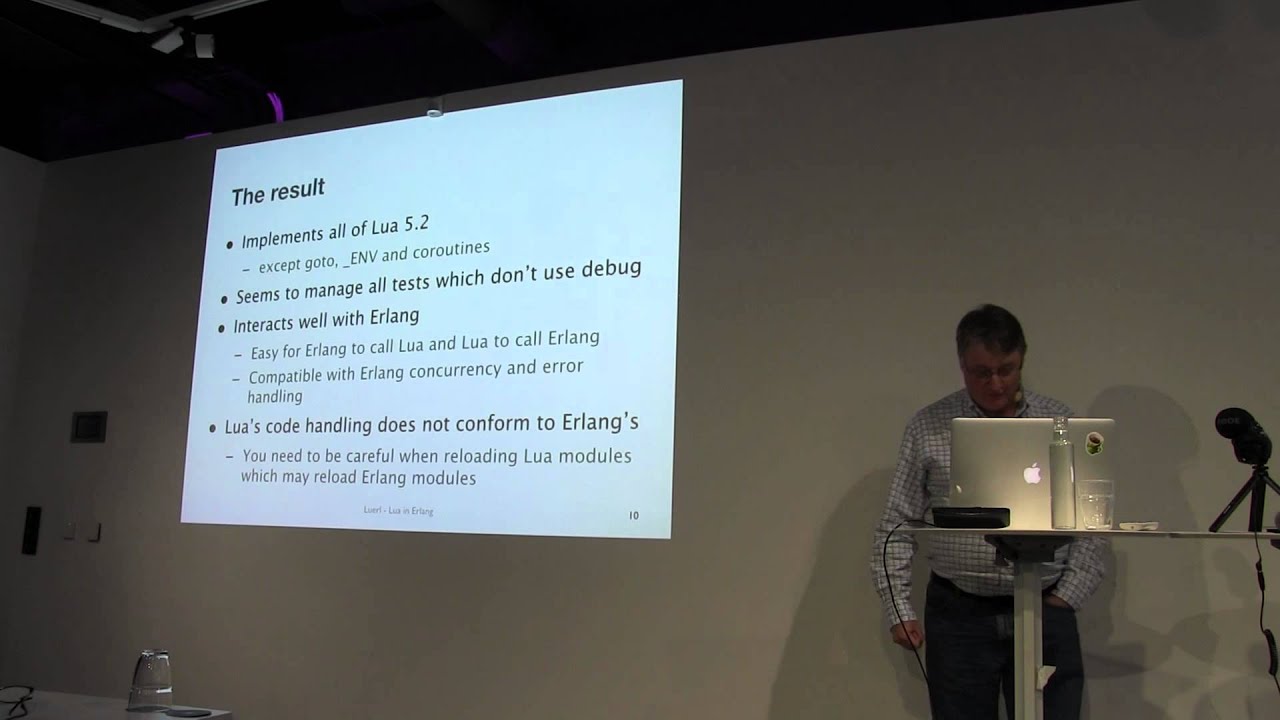

That does remember me a video about luerl, from @rvirding

It might not solve your contigous memory space, but it’s like a mutable universe in the BEAM.

Is it normal for game worlds to have this level of asynchronous updates? How do you plan to handle collisions if you can’t atomically look at multiple cells at once?

Even if you have a centralized source of truth, you might still have async updates.

For example, you have some beefy ‘central truth’ machines with 512GB of RAM. But you don’t want this client directly hitting this machine, so this machine pushes data out to “worker nodes”, which then pushes state out to clients.

Central Truth => many worker nodes

worker node => many clients

Here, each client is querying a different subset from the worker node.

Here, it is not unreasonable for the worker node to simultaneously read & write at the same time.

Sure, but that architecture is fundamentally different because access to that 1gb memory is no longer lock free. If client nodes can submit changes over the network then they aren’t doing atomic writes to direct memory locations.

I don’t understand how the distinction is relevant.

====

-

Look at a worker node.

-

We have some processes that are taking data from “Central Truth” and writing to the worker node’s memory.

-

We have some processes that are taking data from the worker node’s memory and writing it out via UDP to clients.

-

Access to the worker node’s memory is lock free.

====

We allow readers to read while writers are writing.