I’ve been tinkering with this myself for a couple of days. I was able to create the presigned url that allowed me to upload to Backblaze directly, however for some reason the uploaded file was corrupted all the time when I tried accessing the file even when the file size and everything else seemed exactly the same.

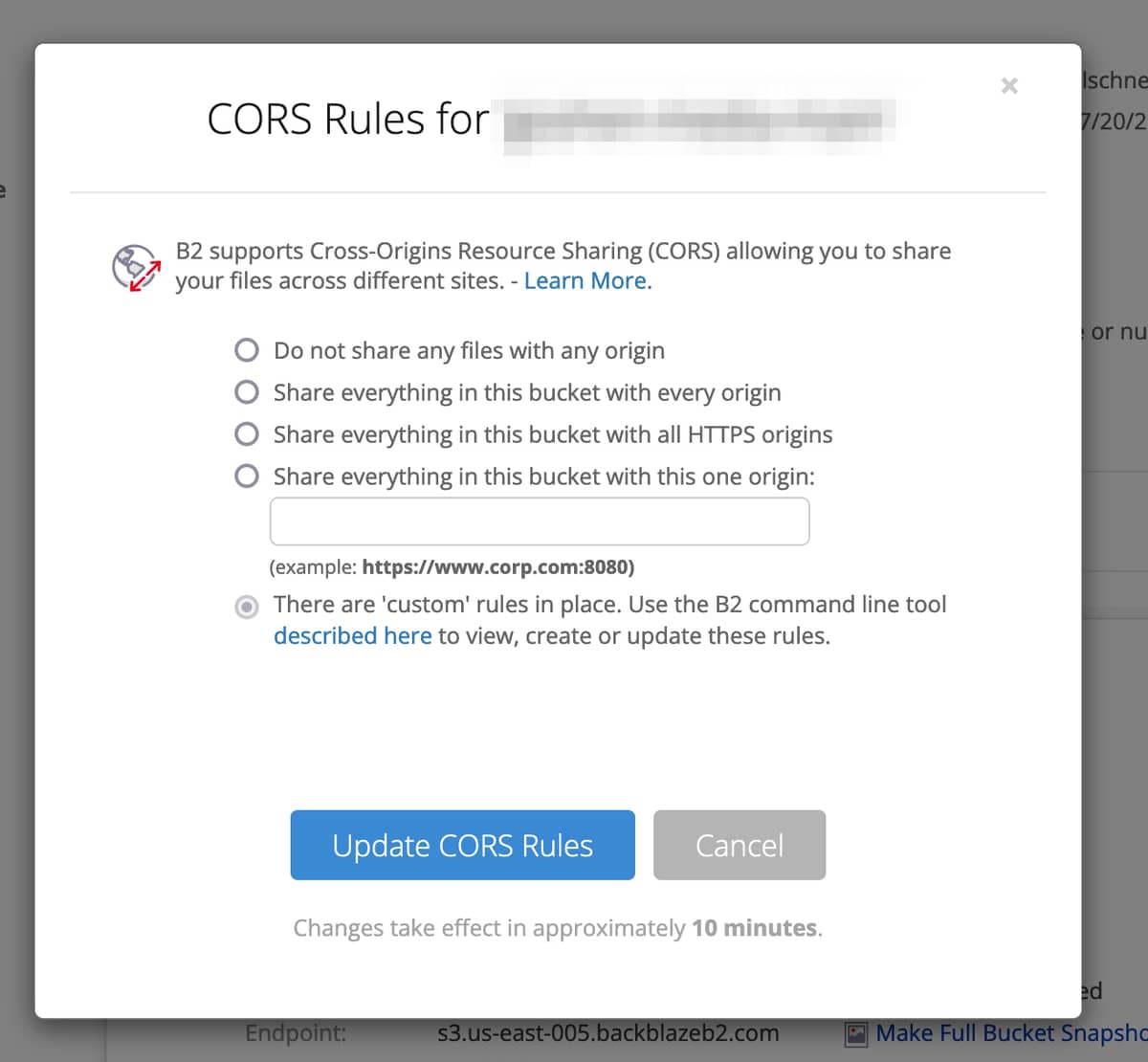

- Use b2 command line to update the cors rule.

b2 update-bucket --corsRules '[

{

"corsRuleName": "downloadFromAnyOriginWithUpload",

"allowedOrigins": [

"*"

],

"allowedHeaders": [

"*"

],

"allowedOperations": [

"s3_put", "s3_post", "s3_head", "s3_get"

],

"maxAgeSeconds": 3600

}

]' your_bucket_name allPrivate

def presigned_upload(opts) do

key = Keyword.fetch!(opts, :key)

max_file_size = opts[:max_file_size] || 10_000_000

expires_in = opts[:expires_in] || 7200

content_type = MIME.from_path(key)

uri = "https://s3.eu-central-003.backblazeb2.com/your_bucket_name/#{URI.encode(key)}"

url = :aws_signature.sign_v4_query_params(

b2_access_key_id,

b2_application_key,

"eu-central-003",

"S3",

:calendar.universal_time(),

"PUT",

uri,

ttl: expires_in,

uri_encode_path: false,

body_digest: "UNSIGNED-PAYLOAD"

)

{:ok, url}

end

- Use

PUT in the js xhr upload

const formData = new FormData()

const {url} = entry.meta

formData.append("file", entry.file)

const xhr = new XMLHttpRequest()

onViewError(() => xhr.abort())

xhr.onload = () => {

xhr.status >= 200 && xhr.status < 300 ? entry.progress(100) : entry.error()

}

xhr.onerror = () => entry.error()

xhr.upload.addEventListener("progress", (event) => {

console.log(event)

if(event.lengthComputable){

let percent = Math.round((event.loaded / event.total) * 100)

if(percent < 100){ entry.progress(percent) }

}

})

xhr.open("PUT", url, true)

xhr.send(formData)

Trying the above did work for uploading from the liveview but as I said, the file itself in the storage bucket was corrupted. I gave up on this method after trying other permutations and combinations via different signing libraries like ex_aws, which all worked for uploads with the same file corruption issue in the bucket. Ultimately I rolled my own signing solution and use the b2 native apis with Cloudflare Workers (which I anyways needed to take advantage of the unmetered download bandwidth for the CDN alliance between Backblaze and Cloudflare). This is what I’m doing currently.

- Have a shared secret key (on phoenix app and cloudflare worker) used for signing.

- Use custom json as message for adding the details that needs to be verified for uploading

- Sign using the hmac algorithm

def presigned_upload_url(opts) do

key = Keyword.fetch!(opts, :key)

secret = "shared_secret"

expires_at = DateTime.add(DateTime.utc_now(), 2, :hour) |> DateTime.to_iso8601()

message =

Jason.encode!(%{

"uid" => uid,

"file" => key,

"exp" => expires_at

# add more details like content size etc which you can verify during upload

})

signature = :crypto.mac(:hmac, :sha256, secret, message) |> Base.encode64()

path = "#{signature}|#{message}" |> Base.encode64()

{:ok, "https://your_cloudflare_workers_location/file/#{path}"}

end

- On cloudflare worker side

- Receive the request and verify the signature using the same secret key

- If the signature is verified proceed with upload/download using b2 native api

- Save the response for b2 native api authorization in the Cloudflare KV for a day to save cost

// signingKey.ts

export default async function signingKey(signingSecret: string) {

return await crypto.subtle.importKey(

"raw",

new TextEncoder().encode(signingSecret),

{ name: "HMAC", hash: "SHA-256" },

false,

["sign", "verify"]

);

}

// ------------------

// verifySignature.ts

export default async function verifySignature(

signingKey: CryptoKey,

signature: string,

message: string

) {

const sigBuf = Uint8Array.from(atob(signature), (c) => c.charCodeAt(0));

return crypto.subtle.verify(

"HMAC",

signingKey,

sigBuf,

new TextEncoder().encode(message)

);

}

// ------------------

// formatPayload.ts

import { mapValues } from "lodash";

export type FormattedData = {

uid: string | null;

exp: Date | null;

file: string;

cdn: boolean;

};

export type FormattedPayloadResponse = {

signature: string;

message: string;

data: FormattedData;

};

export default function formatPayload(

payloadBase64: string

): FormattedPayloadResponse {

const decodedPayload = atob(payloadBase64);

const [signature, message] = decodedPayload.split(DELIMITER, 2);

const data = formatData(JSON.parse(message));

return { signature, message, data };

}

const DELIMITER = "|";

const DATA_FORMATTERS = {

uid: (val: string) => val ?? null,

exp: (val: string) => (val ? new Date(val) : null),

file: (val: string) => val ?? null,

cdn: (val: string | number) => (val === "0" || val === 0 ? false : !!val),

};

function formatData(data: Record<string, string>) {

return mapValues<typeof DATA_FORMATTERS, any>(DATA_FORMATTERS, (fn, key) =>

fn(data[key])

);

}

// ------------------

// isValidPayload.ts

import { get } from "lodash";

import { FormattedData, FormattedPayloadResponse } from "./formatPayload";

export default function isValidPayload(

formattedPayload: FormattedPayloadResponse

) {

const signature = get(formattedPayload, "signature");

const message = get(formattedPayload, "message");

const data = get(formattedPayload, "data");

return !!signature && !!message && isValidData(data);

}

function isValidData(data: FormattedData) {

const filePath = get(data, "file");

const expiresAt = get(data, "exp");

const isCdn = get(data, "cdn");

const filePathParts = filePath.split("/");

const filePathValidForCdn = isCdn ? filePathParts[1] === "public" : true;

return (

!!filePath &&

filePathValidForCdn &&

(!isCdn ? isExpiryDateValid(expiresAt) : true)

);

}

function isExpiryDateValid(expiresAt: Date | null) {

return !!expiresAt && !isNaN(+expiresAt) && expiresAt.valueOf() >= Date.now();

}

// ------------------

// use something like Hono https://hono.dev/ to run a lightweight web server on the Cloudflare workers

// middleware/verifySignedRequest.ts

import { MiddlewareHandler } from "hono";

import { HTTPException } from "hono/http-exception";

import { Env } from "../env";

import { formatPayload, isValidPayload, signingKey, verifySignature } from "../utils";

export default function verifySignedRequest(

pathParamName = "payload"

): MiddlewareHandler<Env> {

return async (ctx, next) => {

const payload = ctx.req.param(pathParamName);

if (!payload) {

throw new HTTPException(400, { message: "INVALID_REQUEST" });

}

try {

const formattedPayload = formatPayload(payload);

if (!isValidPayload(formattedPayload)) {

throw new HTTPException(400, {

message: "INVALID_REQUEST",

});

}

const { signature, message, data } = formattedPayload;

const key = await signingKey(ctx.env.SIGNING_SECRET);

if (!verifySignature(key, signature, message)) {

throw new HTTPException(401, {

message: "INVALID_SIGNATURE",

});

}

ctx.set("signedRequestData", data); // use this later for downloads or uploads as per the need

} catch (error) {

throw new HTTPException(400, { message: "INVALID_REQUEST" });

}

// request is valid, proceed and use for uploads

await next();

};

}

// for uploading

app.put("/file/:payload", async (c) => {

const formData = await c.req.formData();

const file = formData.get("file") as unknown as Blob;

const signedRequestData = c.get("signedRequestData");

const data = await b2.api.uploadFile({

KV: c.env.KV,

baseUrl: c.env.B2_API_URL,

keyId: c.env.B2_KEY_ID,

applicationKey: c.env.B2_APPLICATION_KEY,

bucketId: c.env.B2_BUCKET_ID,

file: file,

fileName: signedRequestData.file,

});

return c.json(data); // or some formatted subset of data you want to expose

});

I have deployed it on Cloudflare workers and testing it for signed upload/download use cases from phoenix liveview and it’s been working perfectly. The best part is I’m able to use Cloudflare’s builtin caching for downloads via fetch api to completely bypass the backblaze’s server in most cases for a file access request. All of this is still WIP at my end but let me know if anyone wants to have a peek into my private repo, I can give you access for a while to see the complete setup for Cloudflare workers.