TL;DR

Better way and Efficient methods to build a massive data structure (like a snapshot), or this is job for another language?

In-deep

In a Redis server, we have segmented objects by parameters. The base object is institute, this have a base json object with parameters like address, phone number, code, id, etc. Then, this institute have nested keys with data (json object), like institute:<code>:teachers, institute:<code>:executive and others.

There is a builder that create this massive structure by many institutes with a list of institute codes, one by one, this generate a base structure and merge and add the nested objects.

A little example:

%{

"institute-a" => %{

address: "Street A 453",

id: 12,

status: "active",

online: true,

location: %{

country: "United State",

state: "CA",

city: "Los Angeles"

},

teachers: %{

"john-doe" => %{

age: 35,

asignatures: ["Art", "Music"],

id: 999

},

...

},

courses: %{

"A1" => {

students: %{

"carol-doe" => %{

age: 15,

grades: %{

"music" => "A",

"arts" => "B+"

},

....

}

}

}

}

},

....

}

The duration to build this structure was nice (5 secs), but more institutes was added to the Redis server, already we have 500 institutes.

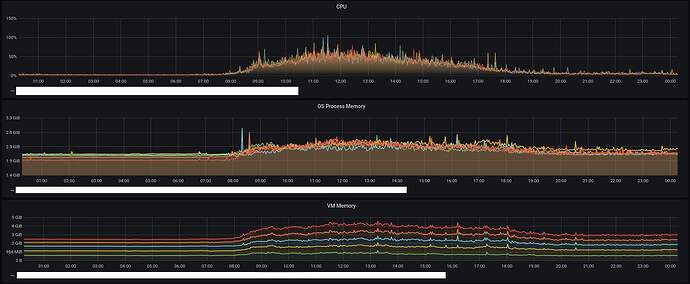

I try many forms, but, when the data is so massive, the request time is like 15~20secs to show this request data or send as response in a channel event.

I know elixir is not friendly with this kind of process, this is temporary, but I need ideas to be more efficient and respecting the elixir way to code.

I try to make clean this process, but situations like this, I think is required to storage on memory the data if is concurrent its use to use quick (Redis data could be a backup data). The problem is when this data is updated, change or delete. The process will be processed in Redis and Cache in paralelle (Like Sync data).

I try to make clean this process, but situations like this, I think is required to storage on memory the data if is concurrent its use to use quick (Redis data could be a backup data). The problem is when this data is updated, change or delete. The process will be processed in Redis and Cache in paralelle (Like Sync data).