Hi everyone,

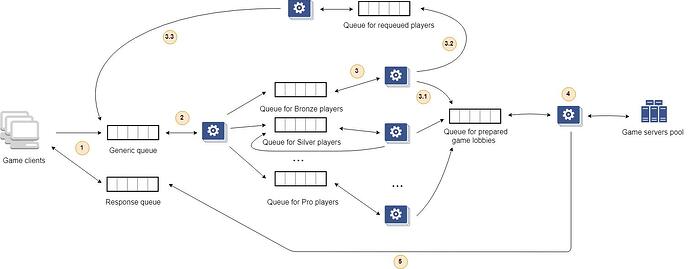

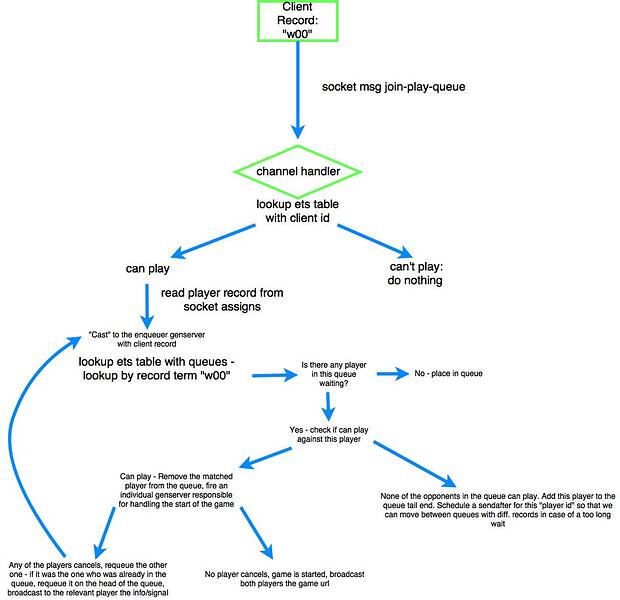

I’m wondering about building a pipeline with GenStage and RabbitMQ queues for processing data about the players which are searching for a game with similar skill (or rating) for one of my open source project, but slightly stuck on the design phase. The basic idea it’s pretty straightforward:

-

Client put a message into the certain message queue as the request for search a game for him. In any of those messages will be specified

reply_tothat will be using in the last stage for getting understanding where to left the final response. -

And here starting the most interest part of the whole topic: a processing a data about the players. From the some point of view it is none trivial task, but I’ve tried to solve it with the following way:

2.1. The client’s message from the first step is writting into the “generic queue” which is storing all requests for searching a game with opponents.

2.2. The publisher/consumer workers is consuming a message, sending a request to the database for getting an additional information about the player and put the message into the next queue, depends on the rating or skill of the player.

2.3. The next group of publishers/consumers are consuming messages only from the certain queue and processing them (for example a group of workers that processing the players only with an average skill):

2.3.1 Because each worker is linked to the specific queue, it will extract message in sequence and try to analyze it. If the player, the information about which was specified in the message body, is according to the matchmaking algorithm, then the selected player will be saved in the memory of the worker and the extracted message deleted. This process is repeating until the worker have not enough players to fill the game lobby. And when it will completed, a list of players will be transferred as a message to the next queue.

2.3.2 Otherwise the message will be published the special queue, created for requeuing players into generic queue.

2.3.3 Special type of workers are re-publishing messages to the generic queue, which are coming from the publishing node from the 2.3.2 step.

2.3.4. A worker is extracting the published message on the previous step, and creating a new game server (or choosing one from the already existing). After that it will broadcast the server IP-address, port and connection credentials to each player mentioned in the list via particular response queues, that were specified by clients in the first step.

2.3.5 Each client is getting the response from response queue and connecting to the game.

The same thing but demonstrated with the picture:

- After when the processing will be done, the worker will extract the response and send it to the certain response queue (that was specified on the first step).

and I have a couple questions, that still raising while I’m designing it:

- Does it a good idea to build this up? Or better to go an another way, when it will be splited up onto small applications?

- It will be great to scale it up when will be necessary in runtime, because we actually don’t know how many requests will come for processing concurrently. Hovewer, it will be good to configure and use a backpressure of GenStage? Or it will be an overkill?

- How to deal with a case when necessary to store a list of players in the workers while collecting players into one group before putting them into one game lobby?