Hey folks!

Given an Excel column name (A, B, C, D…AA, AB, AC,… AAA…, etc.). Return a corresponding integer value (A=0, B=1,… AA=26…), etc.

Can you propose a walk-through, not a complete solution, to determine the correct way to implement it?

Thank you

dli

December 11, 2024, 2:52pm

2

A little pointer if you want to pattern-match from left to right:

def column_to_int(<<char, rest::binary>>, acc)

char is an ASCII integer (A = 65, Z = 90).

dli

December 11, 2024, 3:43pm

4

Yeah, this is the accumulator. You’re not wrong about the power of 26

You’d kick off the recursion like this: column_to_int("ABZ", 0)def column_to_int(str), do: column_to_int(str, 0)

Do I need to have a Map of mappings: %{“column_name” => coulumn_number} for all the 26 letters then?

dli

December 12, 2024, 8:23am

6

No, the accumulator should be the rolling sum as integer. Once added to the sum, you “forget” about the current character and move on to the next one.

Let me know if you want the full solution!

1 Like

I’d prefer to understand the algorithm itself. So if possible, put the steps to follow so that I can figure out which functions to use. Thank you

I seem to start understanding now why and how the power of 26 should be used.

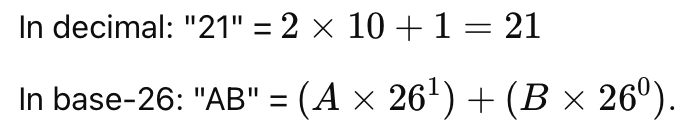

It should be presented like this, I think if we compare it to a decimal system:

Now we need to translate it to Elixir code

I have this initial solution that works for some columns but fails for others

def run(column \\ "ABD") do

letters =

column_name_length(column)

column

|> String.to_charlist()

|> Enum.with_index()

|> Enum.reduce(0, fn {char_code, index}, acc ->

acc +

(char_code - 65) * Integer.pow(26, letters - index - 1)

end)

end

defp column_name_length(column) do

column

|> String.to_charlist()

|> length()

end

It works for “ABD” and returns the correct result of 29. But it fails for “AB” and returns 1 instead of 27.

I didn’t use the recursion yet, just trying to find an ugly solution.

dli

December 12, 2024, 11:09pm

10

|> Enum.reduce(0, fn {char_code, index}, acc ->

acc +

(char_code - 65) * Integer.pow(26, letters - index - 1)

end)

Each iteration adds Integer.pow to the previous result. This alone makes the result grow way too fast.

Instead, in Enum.reduce, multiply acc by 26, then add the letter’s index in the alphabet + 1. You don’t need Enum.with_index.

From the result, you need to subtract 1 to make it zero-based.

Example: Column AGH

A → acc = 0 → 0 * 26 + 1 → return 1

G → acc = 1 → 1 * 26 + 7 → return 33

H → acc = 33 → 33 * 26 + 8 → return 866

Result = 866 - 1 → 865

This works because

((0 * 26 + 1) * 26 + 7) * 26

= 1 * 26^2 + 7 * 26^1 + 8 * 26^0

= 33 * 26 + 8

The recursive version does exactly the same but with pattern matching instead of String.to_charlist.

Hmm, I tried to implement a recursion and follow the the formulas you suggested as follows, but still miserably failed:

def column_to_int(<<char, rest::binary>>, acc) when rest == "" do

IO.puts("Last char: #{char}, acc: #{acc}")

acc

end

def column_to_int(<<char, rest::binary>>, acc) do

IO.puts("char: #{char}, rest: #{rest}, acc: #{acc}")

acc = (char - 65) * 26 + 1

column_to_int(rest, acc)

end

Here is the output:

char: 65, rest: GH, acc: 0

char: 71, rest: H, acc: 1

Last char: 72, acc: 157

157

Still stuck with it…

dli

December 14, 2024, 9:37pm

12

Instead, in Enum.reduce , multiply acc by 26, then add the letter’s index in the alphabet + 1. You don’t need Enum.with_index .

Did the Enum.reduce version work?

In the recursive version, you are multiplying the current character int by 26, which never changes the power of the number.

Multiply the accumulator instead!

belgoros:

def column_to_int(<<char, rest::binary>>, acc) when rest == "" do

IO.puts("Last char: #{char}, acc: #{acc}")

acc

end

def column_to_int(<<char, rest::binary>>, acc) do

IO.puts("char: #{char}, rest: #{rest}, acc: #{acc}")

acc = (char - 65) * 26 + 1

column_to_int(rest, acc)

end

OK, I got the first implementation using reduce working now:

def run(column \\ "A") do

result =

column

|> String.to_charlist()

|> Enum.reduce(0, fn char_code, acc ->

acc * 26 + (char_code - 65) + 1

end)

result - 1

end

Yay

def column_to_int(<<char, rest::binary>>, acc) when rest == "" do

IO.puts("Last char: #{char}, acc: #{acc}")

acc

end

def column_to_int(<<char, rest::binary>>, acc) do

IO.puts("char: #{char}, rest: #{rest}, acc: #{acc}")

acc = acc * 26 + 1

column_to_int(rest, acc)

end

Output:

iex(2)> ExcelColumn.column_to_int("A", 0)

Last char: 65, acc: 0

0

iex(3)> ExcelColumn.column_to_int("B", 0)

Last char: 66, acc: 0

0

dli

December 15, 2024, 12:19pm

14

Look closely, there is an important difference:

Enum.reduce(0, fn char_code, acc ->

acc * 26 + (char_code - 65) + 1

end)

vs.

def column_to_int(<<char, rest::binary>>, acc) do

IO.puts("char: #{char}, rest: #{rest}, acc: #{acc}")

acc = acc * 26 + 1

column_to_int(rest, acc)

end

You literally need to copy acc * 26 + (char_code - 65) + 1 over from the Enum version

Still fails with this change:

def column_to_int(<<char, rest::binary>>, acc) when rest == "" do

IO.puts("Last char: #{char}, acc: #{acc}")

acc

end

def column_to_int(<<char, rest::binary>>, acc) do

IO.puts("char: #{char}, rest: #{rest}, acc: #{acc}")

acc = acc * 26 + (char - 65) + 1

column_to_int(rest, acc)

end

Output

iex(1)> alias ElixirDraft.ExcelColumn

ElixirDraft.ExcelColumn

iex(2)> ExcelColumn.column_to_int("A", 0)

Last char: 65, acc: 0

0

iex(3)> ExcelColumn.column_to_int("C", 0)

Last char: 67, acc: 0

0

iex(4)> iex(4)> ExcelColumn.column_to_int("AGH", 0)

char: 65, rest: GH, acc: 0

char: 71, rest: H, acc: 1

Last char: 72, acc: 33

33

Ah, it seems I got it with recursion too:

# A → acc = 0 → 0 * 26 + 1 → return 1

# G → acc = 1 → 1 * 26 + 7 → return 33

# H → acc = 33 → 33 * 26 + 8 → return 866

# Result = 866 - 1 → 865

def column_to_int(<<char, rest::binary>>, acc) when rest == "" do

IO.puts("Last char: #{char}, acc: #{acc}")

acc * 26 + (char - 65)

end

def column_to_int(<<char, rest::binary>>, acc) do

IO.puts("char: #{char}, rest: #{rest}, acc: #{acc}")

acc = acc * 26 + (char - 65) + 1

column_to_int(rest, acc)

end

Correct, @dli ?

dli

December 15, 2024, 9:31pm

17

Or you do it like this:

def column_to_int(str), do: column_to_int(str, 0)

defp column_to_int(<<>>, acc), do: acc - 1

defp column_to_int(<<char, rest::binary>>, acc) do

column_to_int(rest, acc * 26 + (char - ?A) + 1)

end

A few notes:

<<char, rest::binary>> = "A" creates these bindings: char = "A", rest = "" (try this in iex / command line)<<char, rest::binary>> = "" does not match, but <<>> = "" matches (<<>> is an empty bitstring, you could substitute it with "")?A returns the codepoint of “A” which is 65 (docs ), avoiding the “magic number” of 65The solution above also handles empty strings, returning 0

Only column_to_int/1 is public, the recursive implementation is defined as private function. The user does not need to care about the accumulator or its initial value.

Hope this helped! Enjoy Elixir, there are lots of quirks that are initially weird but make a lot of sense in the long term

Yay