I suspect I found the problem. I strongly believe related to hot-reloads with Phoenix.

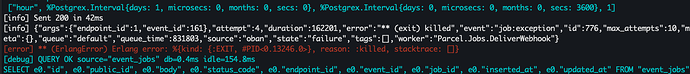

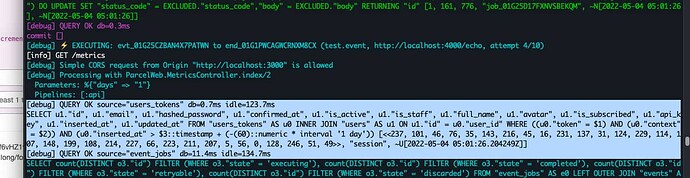

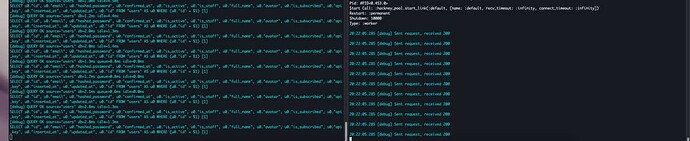

I came back from sleep (long-running session) and triggered two consecutive hot reloads in the dev server. Jobs started getting killed, and thanks to @cmo’s logging snippet I started seeing this error pop up:

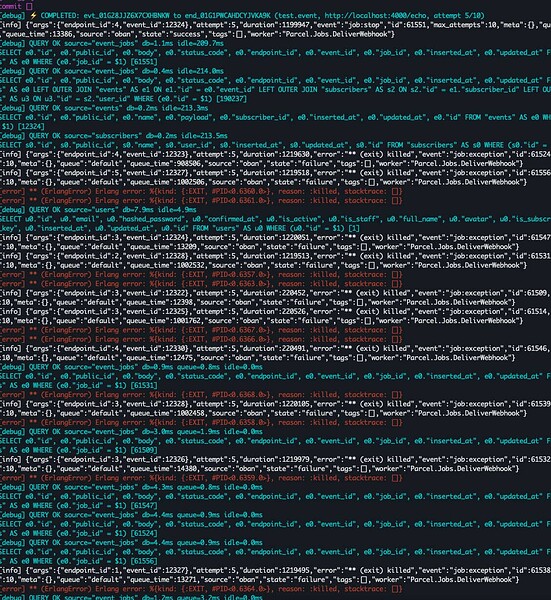

[error] Child :undefined of Supervisor {Oban.Registry, {Oban, {:foreman, "default"}}} terminated

** (exit) killed

Pid: #PID<0.19581.7>

Start Call: Task.Supervised.start_link/?

Restart: :temporary

Shutdown: 5000

Type: :worker

[error] Child :undefined of Supervisor {Oban.Registry, {Oban, {:foreman, "default"}}} terminated

** (exit) killed

Pid: #PID<0.19582.7>

Start Call: Task.Supervised.start_link/?

Restart: :temporary

Shutdown: 5000

Type: :worker

[error] Child :undefined of Supervisor {Oban.Registry, {Oban, {:foreman, "default"}}} terminated

** (exit) killed

Pid: #PID<0.19584.7>

Start Call: Task.Supervised.start_link/?

Restart: :temporary

Shutdown: 5000

Type: :worker

[error] Child :undefined of Supervisor {Oban.Registry, {Oban, {:foreman, "default"}}} terminated

** (exit) killed

Pid: #PID<0.19583.7>

Start Call: Task.Supervised.start_link/?

Restart: :temporary

Shutdown: 5000

Type: :worker

[error] Child :undefined of Supervisor {Oban.Registry, {Oban, {:foreman, "default"}}} terminated

** (exit) killed

Pid: #PID<0.19586.7>

Start Call: Task.Supervised.start_link/?

Restart: :temporary

Shutdown: 5000

Type: :worker

[error] Child :undefined of Supervisor {Oban.Registry, {Oban, {:foreman, "default"}}} terminated

** (exit) killed

Pid: #PID<0.19592.7>

Start Call: Task.Supervised.start_link/?

Restart: :temporary

Shutdown: 5000

Type: :worker

[error] Child :undefined of Supervisor {Oban.Registry, {Oban, {:foreman, "default"}}} terminated

** (exit) killed

Pid: #PID<0.19587.7>

Start Call: Task.Supervised.start_link/?

Restart: :temporary

Shutdown: 5000

Type: :worker

[error] Child :undefined of Supervisor {Oban.Registry, {Oban, {:foreman, "default"}}} terminated

** (exit) killed

Pid: #PID<0.19574.7>

Start Call: Task.Supervised.start_link/?

Restart: :temporary

Shutdown: 5000

Type: :worker

[error] Child :undefined of Supervisor {Oban.Registry, {Oban, {:foreman, "default"}}} terminated

** (exit) killed

Pid: #PID<0.19585.7>

Start Call: Task.Supervised.start_link/?

Restart: :temporary

Shutdown: 5000

Type: :worker

[error] Child :undefined of Supervisor {Oban.Registry, {Oban, {:foreman, "default"}}} terminated

** (exit) killed

Pid: #PID<0.19575.7>

Start Call: Task.Supervised.start_link/?

Restart: :temporary

Shutdown: 5000

Type: :worker

[error] Child :undefined of Supervisor {Oban.Registry, {Oban, {:foreman, "default"}}} terminated

** (exit) killed

Pid: #PID<0.19577.7>

Start Call: Task.Supervised.start_link/?

Restart: :temporary

Shutdown: 5000

Type: :worker

[error] Child :undefined of Supervisor {Oban.Registry, {Oban, {:foreman, "default"}}} terminated

** (exit) killed

Pid: #PID<0.19591.7>

Start Call: Task.Supervised.start_link/?

Restart: :temporary

Shutdown: 5000

Type: :worker

[error] Child :undefined of Supervisor {Oban.Registry, {Oban, {:foreman, "default"}}} terminated

** (exit) killed

Pid: #PID<0.19588.7>

Start Call: Task.Supervised.start_link/?

Restart: :temporary

Shutdown: 5000

Type: :worker

[error] Child :undefined of Supervisor {Oban.Registry, {Oban, {:foreman, "default"}}} terminated

** (exit) killed

Pid: #PID<0.19578.7>

Start Call: Task.Supervised.start_link/?

Restart: :temporary

Shutdown: 5000

Type: :worker

[error] Child :undefined of Supervisor {Oban.Registry, {Oban, {:foreman, "default"}}} terminated

** (exit) killed

Pid: #PID<0.19589.7>

Start Call: Task.Supervised.start_link/?

Restart: :temporary

Shutdown: 5000

Type: :worker

[error] Child :undefined of Supervisor {Oban.Registry, {Oban, {:foreman, "default"}}} terminated

** (exit) killed

Pid: #PID<0.19590.7>

Start Call: Task.Supervised.start_link/?

Restart: :temporary

Shutdown: 5000

Type: :worker

[info] {"args":{"endpoint_id":2,"event_id":25439},"attempt":5,"duration":243090,"error":"** (exit) killed","event":"job:exception","id":127098,"max_attempts":10,"meta":{},"queue":"default","queue_time":2513829,"source":"oban","state":"failure","tags":[],"worker":"Parcel.Jobs.Deliver"}

I’m still working to nail down a true path to replicate, but this is a good step – there’s a trace.

Will file an issue in @sorentwo’s Oban repo once I can find a way to replicate.