Ok great I’ll experiment with this and with your vm.args reply right now.

Hey just a few clarifications.

For all discussion below I’m using n1-highcpu-64 which is defined as:

- “High-CPU machine type with 64 vCPUs and 57.6 GB of memory.”

- “For the n1 series of machine types, a vCPU is implemented as a single hardware hyper-thread”

- I notice you did

+S 128with6400mbut above you used+S 24with12000m. Is it supposed to be1000 * # of cores / 2or100 * # of cores / 2 - I’m a little confused on the teriminology of “cores”. My server has 64 vCPU from hyperthreading. I assumed that means I have 64 cores, 64 vCPUs, and 32 “actual” hardware CPUs. Is that correct?

- You mention leaving a little extra space for KubeDNS. Do I set those limits for KubeDNS by limiting the resources for my app? Or is there a way to set it ‘directly’? Can I have you confirm these numbers for sanity on a single node specified above?

number of nodes

1 n1-highcpu-64

number of pods

1

imaginary number of CPUs allocated to KubeDNS

2

vm.args

+S 124

pod spec (not sure on multiplier)

limits:

cpu: 62000m

requests:

cpu: 62000m

Edit cpu: 62000m just defaults to cpu: "62" in GKE so I’m sure that’s right. Now my only question… do I allow more CPU’s for KubeDNS by doing it this way or do I specify explicitly for KubeDNS elsewhere and leave this at "64" instead of “62”`

Those are the settings I used and it didn’t seem to do anything. I’ve confirmed -S 126 is being passed to the command line, I made sure the YAML is set with "62" for both restrictions for the pod.

It’s really struggling with 40K/min (90% failure rate). So this appears to have 2x the performance, but in my above example with multiple apps I had almost 8-10x the performance, so I’m assuming something is still blocking

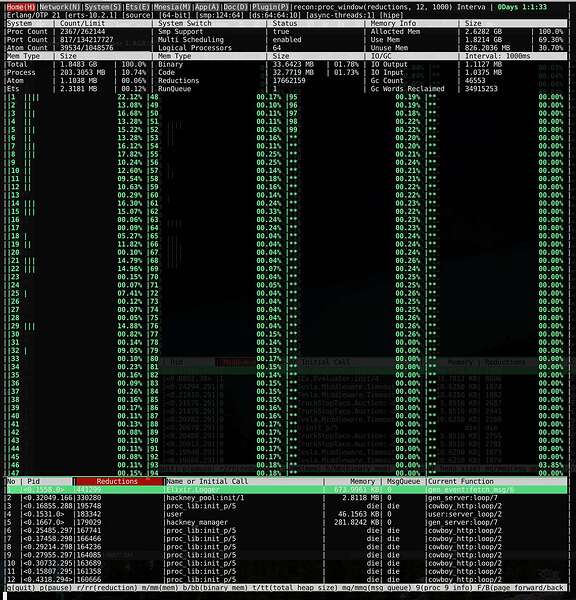

I do notice that :observer_cli now has a million more green bars (I’m assuming its showing schedulers). It does stop at 99 though, and in the system section it says 126 schedulers set, 64 online. not sure what that means… whether its a limitation of the library or if some of these actually arent running

Here’s what it looks like when theres a 98% timeout/failure rate. Sorted by reductions as well as MsgQ

bash-4.4# netstat -atn | grep TIME_WAIT | wc -l

10101

Ran it again: lol

28568

It hovers around ~1700 when all things are working properly, give or take +/-200

I’ll watch it to see when something goes wrong

TIMEOUT count seems to hover at about ~4% of incoming requests. when things go wrong its like 40-50% of incomign requests.

Being naive as possible, also because I do not have experience in this kind of load. I would suggest to take a look at the cloud provider, when you had more instances it behaved better, when switched to Google Cloud it also got better, the VM has enough resources to work I would say since nothing is maxed out, so it might be under the outgoing sockets and connections. One thing that I looked is the quotas page for Google Cloud AppEngine and seems that they cap at 19.200 outgoing requests/min. The K8s Engine quota page does not have enough information, only about containers, pods limits.

Very cool I will check this out. Just to be clear though, in my original post I mentioned that I tested 1 app instance on 1 very large server, and it performed poorly, and I tested 12 app instances on the very same server (I didn’t scale instances).

With that said, maybe somehow sockets get allocated to individual apps or something? I’m not sure.

How can I see current socket wait time or the currently used sockets? Is there a linux command or tool I can run

I just noticed thats for Google App ENgine which i’m not using

netstat -tpan (writing from head no access to man pages so verify the flags please) will show established connections. There will be a SendQ and RecvQ column there.

you can also run dmesg maybe the conntrack table is overflowing. There should be a message in there if this is happening

No idea if those numbers are fine or not fine. There’s an incredibly detailed TIME_WAIT write up here:

The tldr you could try is checking if net.ipv4.tcp_tw_reuse is set and if it’s not enabling it and re-running your tests.

I’m still not sure this is the actual problem but it’s worth trying to see if it makes any difference imho

It looks like the netststat you have installed is from BusyBox. Perhaps it would help to install the full version?

Right I mean the main thing here is that your app is barely doing any work.

Tell us more about your app. You’re using buoy right, are you adjusting the pool size to deal with the fact that you’ve got a ton of CPUs to work with or are you just sticking with the default? Are you using any other pools of workers?

When you say you have a 98% timeout, what do you mean exactly. Your HTTP requests to clients timeout? incoming requests timeout? a pool check out times out?

The way to go from here would be to trace a particular process that is making an HTTP call and figure out what takes so long.

Here was the end of the output. Does this look like what you’re saying? Someone else mentioned conntrack actually but I wasnt sure how to ‘check’ that

(keep scrolling in this window)

[ 2662.620374] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[ 2662.626974] cbr0: port 7(veth0964a801) entered blocking state

[ 2662.632982] cbr0: port 7(veth0964a801) entered disabled state

[ 2662.639339] device veth0964a801 entered promiscuous mode

[ 2662.644872] cbr0: port 7(veth0964a801) entered blocking state

[ 2662.650805] cbr0: port 7(veth0964a801) entered forwarding state

[ 2662.729439] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[ 2662.751043] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[ 2662.757881] cbr0: port 8(vethaa37aac0) entered blocking state

[ 2662.763892] cbr0: port 8(vethaa37aac0) entered disabled state

[ 2662.770016] device vethaa37aac0 entered promiscuous mode

[ 2662.775686] cbr0: port 8(vethaa37aac0) entered blocking state

[ 2662.781601] cbr0: port 8(vethaa37aac0) entered forwarding state

[ 2663.802062] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[ 2663.825977] IPv6: ADDRCONF(NETDEV_UP): vethb8127507: link is not ready

[ 2663.832700] IPv6: ADDRCONF(NETDEV_CHANGE): vethb8127507: link becomes ready

[ 2663.839946] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[ 2663.846701] cbr0: port 9(vethb8127507) entered blocking state

[ 2663.852696] cbr0: port 9(vethb8127507) entered disabled state

[ 2663.858733] device vethb8127507 entered promiscuous mode

[ 2663.864271] cbr0: port 9(vethb8127507) entered blocking state

[ 2663.870211] cbr0: port 9(vethb8127507) entered forwarding state

[ 2670.307052] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[ 2670.346600] IPv6: ADDRCONF(NETDEV_UP): veth150f8503: link is not ready

[ 2670.353364] IPv6: ADDRCONF(NETDEV_CHANGE): veth150f8503: link becomes ready

[ 2670.360749] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[ 2670.367476] cbr0: port 10(veth150f8503) entered blocking state

[ 2670.373498] cbr0: port 10(veth150f8503) entered disabled state

[ 2670.379748] device veth150f8503 entered promiscuous mode

[ 2670.385304] cbr0: port 10(veth150f8503) entered blocking state

[ 2670.391448] cbr0: port 10(veth150f8503) entered forwarding state

[ 2714.633728] cbr0: port 7(veth0964a801) entered disabled state

[ 2714.654119] device veth0964a801 left promiscuous mode

[ 2714.659384] cbr0: port 7(veth0964a801) entered disabled state

[ 7432.196782] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[ 7432.229220] IPv6: ADDRCONF(NETDEV_UP): veth7b2524ef: link is not ready

[ 7432.235945] IPv6: ADDRCONF(NETDEV_CHANGE): veth7b2524ef: link becomes ready

[ 7432.243211] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[ 7432.249759] cbr0: port 7(veth7b2524ef) entered blocking state

[ 7432.255711] cbr0: port 7(veth7b2524ef) entered disabled state

[ 7432.261792] device veth7b2524ef entered promiscuous mode

[ 7432.267511] cbr0: port 7(veth7b2524ef) entered blocking state

[ 7432.273417] cbr0: port 7(veth7b2524ef) entered forwarding state

[ 7432.475401] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[ 7432.493870] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[ 7432.500441] cbr0: port 11(veth547ebf10) entered blocking state

[ 7432.506422] cbr0: port 11(veth547ebf10) entered disabled state

[ 7432.512487] device veth547ebf10 entered promiscuous mode

[ 7432.518162] cbr0: port 11(veth547ebf10) entered blocking state

[ 7432.524198] cbr0: port 11(veth547ebf10) entered forwarding state

[ 7432.621174] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[ 7432.639948] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[ 7432.646630] cbr0: port 12(veth6f18b83a) entered blocking state

[ 7432.652612] cbr0: port 12(veth6f18b83a) entered disabled state

[ 7432.658692] device veth6f18b83a entered promiscuous mode

[ 7432.664255] cbr0: port 12(veth6f18b83a) entered blocking state

[ 7432.670257] cbr0: port 12(veth6f18b83a) entered forwarding state

[ 7432.785612] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[ 7432.811095] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[ 7432.817671] cbr0: port 13(vethf3366897) entered blocking state

[ 7432.823648] cbr0: port 13(vethf3366897) entered disabled state

[ 7432.829896] device vethf3366897 entered promiscuous mode

[ 7432.835501] cbr0: port 13(vethf3366897) entered blocking state

[ 7432.841506] cbr0: port 13(vethf3366897) entered forwarding state

[ 7433.201972] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[ 7433.223922] IPv6: ADDRCONF(NETDEV_UP): veth7d9e56a6: link is not ready

[ 7433.230633] IPv6: ADDRCONF(NETDEV_CHANGE): veth7d9e56a6: link becomes ready

[ 7433.237878] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[ 7433.245270] cbr0: port 14(veth7d9e56a6) entered blocking state

[ 7433.251244] cbr0: port 14(veth7d9e56a6) entered disabled state

[ 7433.257363] device veth7d9e56a6 entered promiscuous mode

[ 7433.262982] cbr0: port 14(veth7d9e56a6) entered blocking state

[ 7433.268982] cbr0: port 14(veth7d9e56a6) entered forwarding state

[ 9897.983980] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[ 9898.008215] IPv6: ADDRCONF(NETDEV_UP): veth23ac2f9a: link is not ready

[ 9898.015187] IPv6: ADDRCONF(NETDEV_CHANGE): veth23ac2f9a: link becomes ready

[ 9898.022421] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[ 9898.029298] cbr0: port 15(veth23ac2f9a) entered blocking state

[ 9898.035290] cbr0: port 15(veth23ac2f9a) entered disabled state

[ 9898.041630] device veth23ac2f9a entered promiscuous mode

[ 9898.047208] cbr0: port 15(veth23ac2f9a) entered blocking state

[ 9898.053220] cbr0: port 15(veth23ac2f9a) entered forwarding state

[ 9898.159161] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[ 9898.186243] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[ 9898.193014] cbr0: port 16(vethc3fe8217) entered blocking state

[ 9898.199048] cbr0: port 16(vethc3fe8217) entered disabled state

[ 9898.205195] device vethc3fe8217 entered promiscuous mode

[ 9898.210711] cbr0: port 16(vethc3fe8217) entered blocking state

[ 9898.216708] cbr0: port 16(vethc3fe8217) entered forwarding state

[ 9898.344650] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[ 9898.369426] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[ 9898.375655] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[ 9898.405867] cbr0: port 17(veth70edf783) entered blocking state

[ 9898.411925] cbr0: port 17(veth70edf783) entered disabled state

[ 9898.418122] device veth70edf783 entered promiscuous mode

[ 9898.423641] cbr0: port 17(veth70edf783) entered blocking state

[ 9898.429667] cbr0: port 17(veth70edf783) entered forwarding state

[ 9898.436266] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[ 9898.442918] cbr0: port 18(veth87cf4512) entered blocking state

[ 9898.448945] cbr0: port 18(veth87cf4512) entered disabled state

[ 9898.455086] device veth87cf4512 entered promiscuous mode

[ 9898.460597] cbr0: port 18(veth87cf4512) entered blocking state

[ 9898.466595] cbr0: port 18(veth87cf4512) entered forwarding state

[11681.493470] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[11681.517451] IPv6: ADDRCONF(NETDEV_UP): vethe2bf268a: link is not ready

[11681.524305] IPv6: ADDRCONF(NETDEV_CHANGE): vethe2bf268a: link becomes ready

[11681.531563] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[11681.538223] cbr0: port 19(vethe2bf268a) entered blocking state

[11681.544247] cbr0: port 19(vethe2bf268a) entered disabled state

[11681.550479] device vethe2bf268a entered promiscuous mode

[11681.556141] cbr0: port 19(vethe2bf268a) entered blocking state

[11681.562172] cbr0: port 19(vethe2bf268a) entered forwarding state

[11681.689345] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[11681.720716] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[11681.727387] cbr0: port 20(veth449c2c98) entered blocking state

[11681.733577] cbr0: port 20(veth449c2c98) entered disabled state

[11681.739896] device veth449c2c98 entered promiscuous mode

[11681.745516] cbr0: port 20(veth449c2c98) entered blocking state

[11681.751533] cbr0: port 20(veth449c2c98) entered forwarding state

[62173.041954] Unsafe core_pattern used with fs.suid_dumpable=2.

[62173.041954] Pipe handler or fully qualified core dump path required.

[62173.041954] Set kernel.core_pattern before fs.suid_dumpable.

[62173.060073] Unsafe core_pattern used with fs.suid_dumpable=2.

[62173.060073] Pipe handler or fully qualified core dump path required.

[62173.060073] Set kernel.core_pattern before fs.suid_dumpable.

[62173.078426] Unsafe core_pattern used with fs.suid_dumpable=2.

[62173.078426] Pipe handler or fully qualified core dump path required.

[62173.078426] Set kernel.core_pattern before fs.suid_dumpable.

[62173.096337] Unsafe core_pattern used with fs.suid_dumpable=2.

[62173.096337] Pipe handler or fully qualified core dump path required.

[62173.096337] Set kernel.core_pattern before fs.suid_dumpable.

[62173.124340] ICMPv6: process `sysctl' is using deprecated sysctl (syscall) net.ipv6.neigh.eth0.base_reachable_time - use net.ipv6.neigh.eth0.base_reachable_time_ms instead

[62173.142378] nr_pdflush_threads exported in /proc is scheduled for removal

[77715.196260] cbr0: port 20(veth449c2c98) entered disabled state

[77715.230494] device veth449c2c98 left promiscuous mode

[77715.235830] cbr0: port 20(veth449c2c98) entered disabled state

[77715.288118] cbr0: port 12(veth6f18b83a) entered disabled state

[77715.313487] device veth6f18b83a left promiscuous mode

[77715.318697] cbr0: port 12(veth6f18b83a) entered disabled state

[77715.353644] cbr0: port 17(veth70edf783) entered disabled state

[77715.378371] device veth70edf783 left promiscuous mode

[77715.383581] cbr0: port 17(veth70edf783) entered disabled state

[77715.420776] cbr0: port 11(veth547ebf10) entered disabled state

[77715.445517] device veth547ebf10 left promiscuous mode

[77715.450864] cbr0: port 11(veth547ebf10) entered disabled state

[77715.492518] cbr0: port 15(veth23ac2f9a) entered disabled state

[77715.517305] device veth23ac2f9a left promiscuous mode

[77715.522547] cbr0: port 15(veth23ac2f9a) entered disabled state

[77715.562019] cbr0: port 16(vethc3fe8217) entered disabled state

[77715.587356] device vethc3fe8217 left promiscuous mode

[77715.592609] cbr0: port 16(vethc3fe8217) entered disabled state

[77715.633735] cbr0: port 18(veth87cf4512) entered disabled state

[77715.658554] device veth87cf4512 left promiscuous mode

[77715.663793] cbr0: port 18(veth87cf4512) entered disabled state

[77715.708461] cbr0: port 19(vethe2bf268a) entered disabled state

[77715.733713] device vethe2bf268a left promiscuous mode

[77715.738958] cbr0: port 19(vethe2bf268a) entered disabled state

[77715.773416] cbr0: port 14(veth7d9e56a6) entered disabled state

[77715.800367] device veth7d9e56a6 left promiscuous mode

[77715.805780] cbr0: port 14(veth7d9e56a6) entered disabled state

[77715.842600] cbr0: port 13(vethf3366897) entered disabled state

[77715.867793] device vethf3366897 left promiscuous mode

[77715.873018] cbr0: port 13(vethf3366897) entered disabled state

[77976.948345] cbr0: port 7(veth7b2524ef) entered disabled state

[77976.972153] device veth7b2524ef left promiscuous mode

[77976.977364] cbr0: port 7(veth7b2524ef) entered disabled state

[77977.009746] cbr0: port 3(veth8702f8e4) entered disabled state

[77977.033864] device veth8702f8e4 left promiscuous mode

[77977.039089] cbr0: port 3(veth8702f8e4) entered disabled state

[77977.935736] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[77977.954056] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[77977.960650] cbr0: port 3(veth5e616dfb) entered blocking state

[77977.966546] cbr0: port 3(veth5e616dfb) entered disabled state

[77977.972585] device veth5e616dfb entered promiscuous mode

[77977.978099] cbr0: port 3(veth5e616dfb) entered blocking state

[77977.984056] cbr0: port 3(veth5e616dfb) entered forwarding state

[78098.484391] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[78098.502406] IPv6: ADDRCONF(NETDEV_UP): veth1bead827: link is not ready

[78098.509304] IPv6: ADDRCONF(NETDEV_CHANGE): veth1bead827: link becomes ready

[78098.516567] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[78098.523113] cbr0: port 7(veth1bead827) entered blocking state

[78098.529029] cbr0: port 7(veth1bead827) entered disabled state

[78098.535027] device veth1bead827 entered promiscuous mode

[78098.540549] cbr0: port 7(veth1bead827) entered blocking state

[78098.546446] cbr0: port 7(veth1bead827) entered forwarding state

[78103.133064] cbr0: port 3(veth5e616dfb) entered disabled state

[78103.210045] device veth5e616dfb left promiscuous mode

[78103.215308] cbr0: port 3(veth5e616dfb) entered disabled state

[78243.844407] cbr0: port 7(veth1bead827) entered disabled state

[78243.934977] device veth1bead827 left promiscuous mode

[78243.940195] cbr0: port 7(veth1bead827) entered disabled state

[78254.501447] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[78254.515293] IPv6: ADDRCONF(NETDEV_UP): vethaf9ca1fe: link is not ready

[78254.521987] IPv6: ADDRCONF(NETDEV_CHANGE): vethaf9ca1fe: link becomes ready

[78254.529188] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[78254.535778] cbr0: port 3(vethaf9ca1fe) entered blocking state

[78254.541687] cbr0: port 3(vethaf9ca1fe) entered disabled state

[78254.547776] device vethaf9ca1fe entered promiscuous mode

[78254.553303] cbr0: port 3(vethaf9ca1fe) entered blocking state

[78254.559208] cbr0: port 3(vethaf9ca1fe) entered forwarding state

[82646.307066] cbr0: port 3(vethaf9ca1fe) entered disabled state

[82646.394249] device vethaf9ca1fe left promiscuous mode

[82646.399473] cbr0: port 3(vethaf9ca1fe) entered disabled state

[82648.550342] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[82648.569029] IPv6: ADDRCONF(NETDEV_UP): veth1a3a646f: link is not ready

[82648.575856] IPv6: ADDRCONF(NETDEV_CHANGE): veth1a3a646f: link becomes ready

[82648.583116] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[82648.589677] cbr0: port 3(veth1a3a646f) entered blocking state

[82648.595580] cbr0: port 3(veth1a3a646f) entered disabled state

[82648.601776] device veth1a3a646f entered promiscuous mode

[82648.607308] cbr0: port 3(veth1a3a646f) entered blocking state

[82648.613213] cbr0: port 3(veth1a3a646f) entered forwarding state

[85185.628038] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[85185.660197] IPv6: ADDRCONF(NETDEV_UP): veth83a3a535: link is not ready

[85185.666903] IPv6: ADDRCONF(NETDEV_CHANGE): veth83a3a535: link becomes ready

[85185.674134] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[85185.680739] cbr0: port 7(veth83a3a535) entered blocking state

[85185.686641] cbr0: port 7(veth83a3a535) entered disabled state

[85185.692673] device veth83a3a535 entered promiscuous mode

[85185.698213] cbr0: port 7(veth83a3a535) entered blocking state

[85185.704125] cbr0: port 7(veth83a3a535) entered forwarding state

[85420.676756] cbr0: port 3(veth1a3a646f) entered disabled state

[85420.750799] device veth1a3a646f left promiscuous mode

[85420.756151] cbr0: port 3(veth1a3a646f) entered disabled state

[85429.685663] cbr0: port 7(veth83a3a535) entered disabled state

[85429.758753] device veth83a3a535 left promiscuous mode

[85429.763987] cbr0: port 7(veth83a3a535) entered disabled state

[85455.051699] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[85455.069365] IPv6: ADDRCONF(NETDEV_UP): vethd1c80a38: link is not ready

[85455.076074] IPv6: ADDRCONF(NETDEV_CHANGE): vethd1c80a38: link becomes ready

[85455.083300] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[85455.089893] cbr0: port 3(vethd1c80a38) entered blocking state

[85455.095796] cbr0: port 3(vethd1c80a38) entered disabled state

[85455.101828] device vethd1c80a38 entered promiscuous mode

[85455.107464] cbr0: port 3(vethd1c80a38) entered blocking state

[85455.113485] cbr0: port 3(vethd1c80a38) entered forwarding state

[85455.124682] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[85455.141302] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[85455.147923] cbr0: port 7(vethea41f89d) entered blocking state

[85455.153833] cbr0: port 7(vethea41f89d) entered disabled state

[85455.159847] device vethea41f89d entered promiscuous mode

[85455.165355] cbr0: port 7(vethea41f89d) entered blocking state

[85455.171252] cbr0: port 7(vethea41f89d) entered forwarding state

[85455.379654] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[85455.405937] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[85455.412539] cbr0: port 11(veth63742689) entered blocking state

[85455.418532] cbr0: port 11(veth63742689) entered disabled state

[85455.424618] device veth63742689 entered promiscuous mode

[85455.430120] cbr0: port 11(veth63742689) entered blocking state

[85455.436103] cbr0: port 11(veth63742689) entered forwarding state

[85455.443096] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[85455.460577] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[85455.467110] cbr0: port 12(vethb6a26dde) entered blocking state

[85455.473092] cbr0: port 12(vethb6a26dde) entered disabled state

[85455.479157] device vethb6a26dde entered promiscuous mode

[85455.484647] cbr0: port 12(vethb6a26dde) entered blocking state

[85455.490639] cbr0: port 12(vethb6a26dde) entered forwarding state

[85515.855614] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[85515.874094] IPv6: ADDRCONF(NETDEV_UP): vethba54f913: link is not ready

[85515.880924] IPv6: ADDRCONF(NETDEV_CHANGE): vethba54f913: link becomes ready

[85515.888150] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[85515.894858] cbr0: port 13(vethba54f913) entered blocking state

[85515.901038] cbr0: port 13(vethba54f913) entered disabled state

[85515.907126] device vethba54f913 entered promiscuous mode

[85515.912647] cbr0: port 13(vethba54f913) entered blocking state

[85515.918658] cbr0: port 13(vethba54f913) entered forwarding state

[85515.982054] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[85516.002248] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[85516.008989] cbr0: port 14(veth70755caf) entered blocking state

[85516.015010] cbr0: port 14(veth70755caf) entered disabled state

[85516.021096] device veth70755caf entered promiscuous mode

[85516.026767] cbr0: port 14(veth70755caf) entered blocking state

[85516.032746] cbr0: port 14(veth70755caf) entered forwarding state

[85527.854682] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[85527.876109] IPv6: ADDRCONF(NETDEV_UP): vethd9c95384: link is not ready

[85527.882839] IPv6: ADDRCONF(NETDEV_CHANGE): vethd9c95384: link becomes ready

[85527.890078] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[85527.896773] cbr0: port 15(vethd9c95384) entered blocking state

[85527.902781] cbr0: port 15(vethd9c95384) entered disabled state

[85527.908943] device vethd9c95384 entered promiscuous mode

[85527.914571] cbr0: port 15(vethd9c95384) entered blocking state

[85527.920569] cbr0: port 15(vethd9c95384) entered forwarding state

[85527.989141] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[85528.009121] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[85528.015733] cbr0: port 16(veth409dedff) entered blocking state

[85528.021702] cbr0: port 16(veth409dedff) entered disabled state

[85528.027927] device veth409dedff entered promiscuous mode

[85528.033439] cbr0: port 16(veth409dedff) entered blocking state

[85528.039441] cbr0: port 16(veth409dedff) entered forwarding state

[85567.287370] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[85567.309754] IPv6: ADDRCONF(NETDEV_UP): veth81d34932: link is not ready

[85567.316463] IPv6: ADDRCONF(NETDEV_CHANGE): veth81d34932: link becomes ready

[85567.323700] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[85567.330308] cbr0: port 17(veth81d34932) entered blocking state

[85567.336304] cbr0: port 17(veth81d34932) entered disabled state

[85567.342399] device veth81d34932 entered promiscuous mode

[85567.347945] cbr0: port 17(veth81d34932) entered blocking state

[85567.353918] cbr0: port 17(veth81d34932) entered forwarding state

[85567.458168] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[85567.476615] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[85567.483422] cbr0: port 18(veth1416d4e3) entered blocking state

[85567.489460] cbr0: port 18(veth1416d4e3) entered disabled state

[85567.495591] device veth1416d4e3 entered promiscuous mode

[85567.501140] cbr0: port 18(veth1416d4e3) entered blocking state

[85567.507207] cbr0: port 18(veth1416d4e3) entered forwarding state

[85572.042900] cbr0: port 16(veth409dedff) entered disabled state

[85572.085916] device veth409dedff left promiscuous mode

[85572.091276] cbr0: port 16(veth409dedff) entered disabled state

[85579.340089] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[85579.353476] IPv6: ADDRCONF(NETDEV_UP): veth4a563bb4: link is not ready

[85579.360171] IPv6: ADDRCONF(NETDEV_CHANGE): veth4a563bb4: link becomes ready

[85579.367448] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[85579.374159] cbr0: port 16(veth4a563bb4) entered blocking state

[85579.380279] cbr0: port 16(veth4a563bb4) entered disabled state

[85579.386442] device veth4a563bb4 entered promiscuous mode

[85579.392018] cbr0: port 16(veth4a563bb4) entered blocking state

[85579.398015] cbr0: port 16(veth4a563bb4) entered forwarding state

[85579.483031] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[85579.509794] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[85579.516557] cbr0: port 19(veth9fad3455) entered blocking state

[85579.522551] cbr0: port 19(veth9fad3455) entered disabled state

[85579.528733] device veth9fad3455 entered promiscuous mode

[85579.534684] cbr0: port 19(veth9fad3455) entered blocking state

[85579.540717] cbr0: port 19(veth9fad3455) entered forwarding state

[85580.785189] cbr0: port 11(veth63742689) entered disabled state

[85580.819634] device veth63742689 left promiscuous mode

[85580.825014] cbr0: port 11(veth63742689) entered disabled state

[85584.113269] cbr0: port 14(veth70755caf) entered disabled state

[85584.145244] device veth70755caf left promiscuous mode

[85584.150518] cbr0: port 14(veth70755caf) entered disabled state

[85585.005013] cbr0: port 15(vethd9c95384) entered disabled state

[85585.038427] device vethd9c95384 left promiscuous mode

[85585.043655] cbr0: port 15(vethd9c95384) entered disabled state

[85592.476558] cbr0: port 7(vethea41f89d) entered disabled state

[85592.510953] device vethea41f89d left promiscuous mode

[85592.516252] cbr0: port 7(vethea41f89d) entered disabled state

[85593.257997] cbr0: port 12(vethb6a26dde) entered disabled state

[85593.291184] device vethb6a26dde left promiscuous mode

[85593.296568] cbr0: port 12(vethb6a26dde) entered disabled state

[85596.089384] cbr0: port 13(vethba54f913) entered disabled state

[85596.120660] device vethba54f913 left promiscuous mode

[85596.125875] cbr0: port 13(vethba54f913) entered disabled state

[85605.376063] cbr0: port 3(vethd1c80a38) entered disabled state

[85605.406789] device vethd1c80a38 left promiscuous mode

[85605.412012] cbr0: port 3(vethd1c80a38) entered disabled state

[85625.948416] cbr0: port 16(veth4a563bb4) entered disabled state

[85625.978481] device veth4a563bb4 left promiscuous mode

[85625.983694] cbr0: port 16(veth4a563bb4) entered disabled state

[85651.211850] cbr0: port 18(veth1416d4e3) entered disabled state

[85651.240260] device veth1416d4e3 left promiscuous mode

[85651.245535] cbr0: port 18(veth1416d4e3) entered disabled state

[85651.279956] cbr0: port 17(veth81d34932) entered disabled state

[85651.307969] device veth81d34932 left promiscuous mode

[85651.313175] cbr0: port 17(veth81d34932) entered disabled state

[85651.346612] cbr0: port 19(veth9fad3455) entered disabled state

[85651.374755] device veth9fad3455 left promiscuous mode

[85651.379995] cbr0: port 19(veth9fad3455) entered disabled state

[85719.720836] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[85719.741119] IPv6: ADDRCONF(NETDEV_UP): veth311df964: link is not ready

[85719.747844] IPv6: ADDRCONF(NETDEV_CHANGE): veth311df964: link becomes ready

[85719.755071] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[85719.761671] cbr0: port 3(veth311df964) entered blocking state

[85719.767563] cbr0: port 3(veth311df964) entered disabled state

[85719.773614] device veth311df964 entered promiscuous mode

[85719.779187] cbr0: port 3(veth311df964) entered blocking state

[85719.785083] cbr0: port 3(veth311df964) entered forwarding state

[85730.030240] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[85730.053372] IPv6: ADDRCONF(NETDEV_UP): veth0e2dfc91: link is not ready

[85730.060100] IPv6: ADDRCONF(NETDEV_CHANGE): veth0e2dfc91: link becomes ready

[85730.067378] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[85730.074058] cbr0: port 7(veth0e2dfc91) entered blocking state

[85730.079954] cbr0: port 7(veth0e2dfc91) entered disabled state

[85730.085941] device veth0e2dfc91 entered promiscuous mode

[85730.091436] cbr0: port 7(veth0e2dfc91) entered blocking state

[85730.097373] cbr0: port 7(veth0e2dfc91) entered forwarding state

[85730.310015] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[85730.336443] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[85730.343030] cbr0: port 11(vethd908b9de) entered blocking state

[85730.349021] cbr0: port 11(vethd908b9de) entered disabled state

[85730.355133] device vethd908b9de entered promiscuous mode

[85730.360698] cbr0: port 11(vethd908b9de) entered blocking state

[85730.366710] cbr0: port 11(vethd908b9de) entered forwarding state

[85730.940419] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[85730.971317] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[85730.977895] cbr0: port 12(vetha7203f1f) entered blocking state

[85730.983876] cbr0: port 12(vetha7203f1f) entered disabled state

[85730.989948] device vetha7203f1f entered promiscuous mode

[85730.995451] cbr0: port 12(vetha7203f1f) entered blocking state

[85731.001439] cbr0: port 12(vetha7203f1f) entered forwarding state

[85731.409134] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[85731.433346] IPv6: ADDRCONF(NETDEV_UP): vetha8803811: link is not ready

[85731.440214] IPv6: ADDRCONF(NETDEV_CHANGE): vetha8803811: link becomes ready

[85731.447512] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[85731.454120] cbr0: port 13(vetha8803811) entered blocking state

[85731.460099] cbr0: port 13(vetha8803811) entered disabled state

[85731.466329] device vetha8803811 entered promiscuous mode

[85731.471896] cbr0: port 13(vetha8803811) entered blocking state

[85731.477892] cbr0: port 13(vetha8803811) entered forwarding state

[85731.581605] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[85731.604371] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[85731.610987] cbr0: port 14(vethdac2429b) entered blocking state

[85731.616968] cbr0: port 14(vethdac2429b) entered disabled state

[85731.623056] device vethdac2429b entered promiscuous mode

[85731.628684] cbr0: port 14(vethdac2429b) entered blocking state

[85731.634686] cbr0: port 14(vethdac2429b) entered forwarding state

[85732.028233] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[85732.058826] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[85732.065420] cbr0: port 15(vethdb06219c) entered blocking state

[85732.071418] cbr0: port 15(vethdb06219c) entered disabled state

[85732.077557] device vethdb06219c entered promiscuous mode

[85732.083087] cbr0: port 15(vethdb06219c) entered blocking state

[85732.089087] cbr0: port 15(vethdb06219c) entered forwarding state

[85732.878470] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[85732.920751] IPv6: ADDRCONF(NETDEV_UP): veth1b4a356d: link is not ready

[85732.927544] IPv6: ADDRCONF(NETDEV_CHANGE): veth1b4a356d: link becomes ready

[85732.934793] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[85732.941498] cbr0: port 16(veth1b4a356d) entered blocking state

[85732.947493] cbr0: port 16(veth1b4a356d) entered disabled state

[85732.953662] device veth1b4a356d entered promiscuous mode

[85732.959231] cbr0: port 16(veth1b4a356d) entered blocking state

[85732.965279] cbr0: port 16(veth1b4a356d) entered forwarding state

[85733.475754] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[85733.521927] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[85733.528766] cbr0: port 17(vethc49b95be) entered blocking state

[85733.534764] cbr0: port 17(vethc49b95be) entered disabled state

[85733.540908] device vethc49b95be entered promiscuous mode

[85733.546504] cbr0: port 17(vethc49b95be) entered blocking state

[85733.552502] cbr0: port 17(vethc49b95be) entered forwarding state

[85734.134726] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[85734.178505] IPv6: ADDRCONF(NETDEV_UP): vethc9bbaa29: link is not ready

[85734.185458] IPv6: ADDRCONF(NETDEV_CHANGE): vethc9bbaa29: link becomes ready

[85734.192845] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[85734.199768] cbr0: port 18(vethc9bbaa29) entered blocking state

[85734.205759] cbr0: port 18(vethc9bbaa29) entered disabled state

[85734.211927] device vethc9bbaa29 entered promiscuous mode

[85734.217479] cbr0: port 18(vethc9bbaa29) entered blocking state

[85734.223467] cbr0: port 18(vethc9bbaa29) entered forwarding statebash-4.4# dmesg | grep conn

[ 1.451351] nf_conntrack version 0.5.0 (65536 buckets, 262144 max)

The tricky part is… I assume I need to run these commands IN the container. If I run them out of the container I get very neutered results, probably mostly stuff for kubernetes.

The problem is, I can only run /usr/bin/toolbox in the normal SSH environment, and not in the container. So I’m kind of curious how I can install custom libs inside the container… maybe I just need to put it in my docker image or something?

Right now I’m using Tesla with a hackney adapter, and pooling/keep-alive disabled (someone else suggested that).

I have tried Buoy, Tesla, Machine gun (gun + poolboy) and have spent a lot of time adjusting the pool settings. Before I realized there was a bigger problem that’s basically what I spent all my time doing without much success.

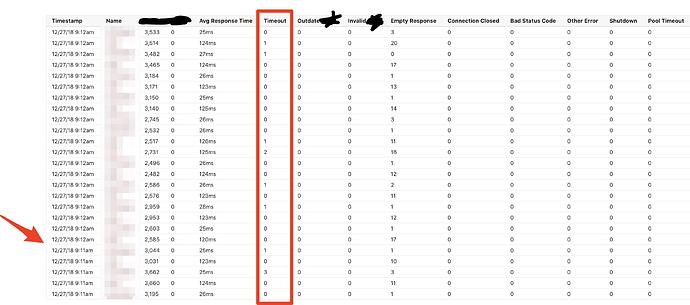

The way my timeouts work is this… for every outbound request I pattern match against the error codes the library can potentially send back. Some error codes I don’t care about but all the common ones I log. I then send that error code to a StatsLogger which aggregates and sums every 1 minute, and writes those 1 minute increments to the database. So basically I see a by-host, 1 minute breakdown, of all failure codes.

So “timeout” is different for all the different libraries (as far as the error code they choose), but in my current configuration it’s this:

{stats, body} = case response do

{:error, :econnrefused} ->

{%{stats | timeout: 1}, nil}

{:error, :timeout} ->

{%{stats | timeout: 1}, nil}

{:error, :closed} ->

{%{stats | connection_closed: 1, response_time: nil}, nil}

{:error, :socket_closed_remotely} ->

{%{stats | connection_closed: 1, response_time: nil}, nil}

#{:error, %{reason: :shutdown}} ->

# {%{stats | shutdown: 1, response_time: nil}, nil}

#{:error, %{reason: :pool_timeout}} ->

# {%{stats | pool_timeout: 1, response_time: nil}, nil}

{:error, reason} ->

RedisLogger.error("[UNKNOWN HTTP ERROR] #{inspect(reason)} #{url}")

{%{stats | other_error: 1, response_time: nil}, nil}

{:ok, %{status: status}} when status >= 400 ->

{%{stats | bad_status_code: 1}, nil}

{:ok, %{body: ""}} ->

{%{stats | empty_response: 1}, nil}

{:ok, %{body: nil}} ->

{%{stats | empty_response: 1}, nil}

{:ok, resp} ->

{stats, resp.body}

end

Here’s what my app interface looks like. I blocked out some column headers that are mislabled which would make things confusing. The column with a black header could just be called “responses with data”. What’s important is to see the arrow where you can see it change to the next minute. you will notice theres 20 entries for 9:12am. Thats because I’m currently querying 2 outgoing APIs and I have 10 app instances running. Each app logs each ‘name’ (or API) separately. In the timeout column you can see that the timeout is ~.07% of the incoming request amount for that API. If this number hovers around .07-.7% it’s acceptable, as I’m sure some of those may be legitimate timeouts. Even if they’re not, it’s still an acceptable amount.

When everything starts to have a problem, timeouts will start to rapidly increase and “successful responses with data” will decrease such that when you add those 2 columns together, timeouts will be 80-90% of it.

If I lowered my # of apps so that its getting ~10K per API per app, timeouts would aggressively increase.

Currently I don’t log anything on the incoming request. I made another thread about how to look the response time but in general im not too worried about the performance of that or if it fails, becuase this is way more important to fix. Once i fix it, i can always log the overall performance and tune it

![]()

Sorry for off-topic, but do you make the requests to the same domains frequently? If so, I’d still use pooling and definitely keep-alive, it would help you avoid setting up new tcp connections needlessly.

So I’m kind of curious how I can install custom libs inside the container… maybe I just need to put it in my docker image or something?

I suppose you are running the elixir app in a container, possible with alpine linux inside? If so, you can connect to the container and install the libraries you want with apk. Check out Configure Networking - Alpine Linux. To get ss, you can, for example, apk add iproute2.

Not seeing signs of the conntrack table overflowing on the host kernel in the dmesg output.

Those interfaces flapping and entering blocking state would be strange on a bare metal server but maybe it’s normal on a kubernees node (pods being stopped and started?) Not sure because I’ve zero experience with kubernees.

Great info. Can you try running this outside of K8s? I would want to make sure that this isn’t related to K8s DNS lookups.

EDIT: I only bring this up because I ran into some really strange DNS issues on AWS when I first used K8s. I had to add:

dnsConfig:

options:

- name: use-vc

to my pod spec in order to avoid issues. You’re doing a LOT more out bound requests than I am though, so it may be worth just testing this outside the KubeDNS world to isolate the problem.

ANOTHER EDIT: On latest k8s you may actually have CoreDNS instead of KubeDNS. This blog post here may be useful https://github.com/coredns/deployment/blob/master/kubernetes/Scaling_CoreDNS.md

Instead of adding debugging tools to your container, it should be possible to run them on the host os after entering container’s namespace.