I don’t know how useful this is, I’m just running iex -S mix on my NIF, it’s not running in production or anything, but I hope it helps.

Is it possible to run erlang:system_info to check schedulers_state, scheduling_statistics, dirty_cpu_schedulers_online, dirty_cpu_schedulers

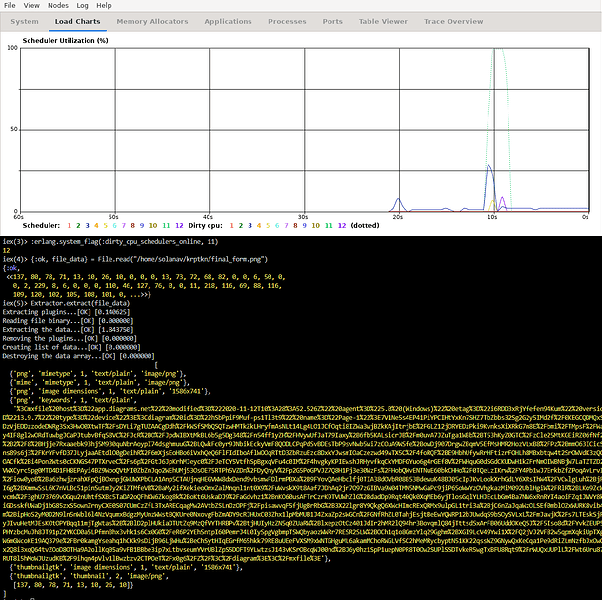

iex(1)> {:ok, file_data} = File.read("/home/solanav/krptkn/final_form.png")

{:ok,

<<137, 80, 78, 71, 13, 10, 26, 10, 0, 0, 0, 13, 73, 72, 68, 82, 0, 0, 6, 50, 0,

0, 2, 229, 8, 6, 0, 0, 0, 110, 46, 127, 76, 0, 0, 11, 218, 116, 69, 88, 116,

109, 120, 102, 105, 108, 101, 0, ...>>}

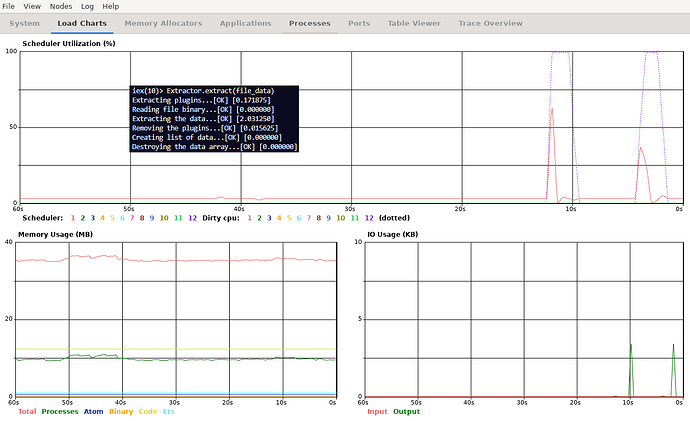

iex(2)> Extractor.extract(file_data)

Extracting plugins...[OK] [0.046875]

Reading file binary...[OK] [0.000000]

Extracting the data...[OK] [0.218750]

Removing the plugins...[OK] [0.000000]

Creating list of data...[OK] [0.000000]

Destroying the data array...[OK] [0.000000]

[

{'png', 'mimetype', 1, 'text/plain', 'image/png'},

{'png', 'image dimensions', 1, 'text/plain', '1586x741'},

{'mime', 'mimetype', 1, 'text/plain', 'image/png'},

{'png', 'keywords', 1, 'text/plain', 'url encoded data'},

{'thumbnailgtk', 'image dimensions', 1, 'text/plain', '1586x741'},

{'thumbnailgtk', 'thumbnail', 2, 'image/png', [137, 80, 78, 71, 13, 10, 26, 10]},

{'gstreamer', 'mimetype', 1, 'text/plain', 'image/png'},

{'gstreamer', 'video dimensions', 1, 'text/plain', '1586x741'},

{'gstreamer', 'video depth', 1, 'text/plain', '32'},

{'gstreamer', 'pixel aspect ratio', 1, 'text/plain', '1/1'}

]

iex(3)> :erlang.system_info(:schedulers_state)

{12, 12, 12}

iex(4)> :erlang.system_info(:scheduling_statistics)

[

{:process_max, 0, 0},

{:process_high, 0, 0},

{:process_normal, 0, 0},

{:process_low, 0, 0},

{:port, 0, 0}

]

iex(5)> :erlang.system_info(:dirty_cpu_schedulers_online)

12

iex(6)> :erlang.system_info(:dirty_cpu_schedulers)

12

And the most useful would be to check schedulers using microstate accounting? msacc:start(1000), msacc:print(), msacc:stop(). Might not be wise to use it in a production env…

Here I did :msacc.start(1000), ran the NIF, printed and then stopped.

iex(8)> :msacc.print()

Average thread real-time : 1001601 us

Accumulated system run-time : 7208 us

Average scheduler run-time : 593 us

Thread aux check_io emulator gc other port sleep

Stats per thread:

async( 0) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

aux( 1) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 99.99%

dirty_cpu_( 1) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

dirty_cpu_( 2) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

dirty_cpu_( 3) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

dirty_cpu_( 4) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

dirty_cpu_( 5) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

dirty_cpu_( 6) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

dirty_cpu_( 7) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

dirty_cpu_( 8) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

dirty_cpu_( 9) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

dirty_cpu_(10) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

dirty_cpu_(11) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

dirty_cpu_(12) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

dirty_io_s( 1) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

dirty_io_s( 2) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

dirty_io_s( 3) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

dirty_io_s( 4) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

dirty_io_s( 5) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

dirty_io_s( 6) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

dirty_io_s( 7) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

dirty_io_s( 8) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

dirty_io_s( 9) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

dirty_io_s(10) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

poll( 0) 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

scheduler( 1) 0.00% 0.05% 0.00% 0.00% 0.00% 0.00% 99.94%

scheduler( 2) 0.00% 0.00% 0.00% 0.00% 0.06% 0.00% 99.94%

scheduler( 3) 0.00% 0.00% 0.00% 0.00% 0.06% 0.00% 99.94%

scheduler( 4) 0.00% 0.00% 0.00% 0.00% 0.07% 0.00% 99.93%

scheduler( 5) 0.00% 0.00% 0.00% 0.00% 0.06% 0.00% 99.94%

scheduler( 6) 0.00% 0.00% 0.00% 0.00% 0.06% 0.00% 99.94%

scheduler( 7) 0.00% 0.00% 0.00% 0.00% 0.01% 0.00% 99.99%

scheduler( 8) 0.00% 0.00% 0.00% 0.00% 0.06% 0.00% 99.94%

scheduler( 9) 0.00% 0.00% 0.00% 0.00% 0.06% 0.00% 99.94%

scheduler(10) 0.00% 0.00% 0.00% 0.00% 0.06% 0.00% 99.94%

scheduler(11) 0.00% 0.00% 0.00% 0.00% 0.06% 0.00% 99.94%

scheduler(12) 0.00% 0.00% 0.00% 0.00% 0.06% 0.00% 99.94%

Stats per type:

async 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

aux 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 99.99%

dirty_cpu_sche 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

dirty_io_sched 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

poll 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00%

scheduler 0.00% 0.00% 0.00% 0.00% 0.05% 0.00% 99.94%

:ok