I came across something intriguing that aligns perfectly with one of Elixir’s strategic focuses—local AI. Exo, a company working on distributed AI, has engineered a setup using consumer hardware like Mac Minis interconnected with Thunderbolt 5 to create an AI cluster. They’re running models like Nemotron 70B at 8 tokens per second, with plans to scale to Llama 405B. The kicker? They’re doing this entirely with local resources, side-stepping the cloud altogether. You can check out their project here: Exo on GitHub.

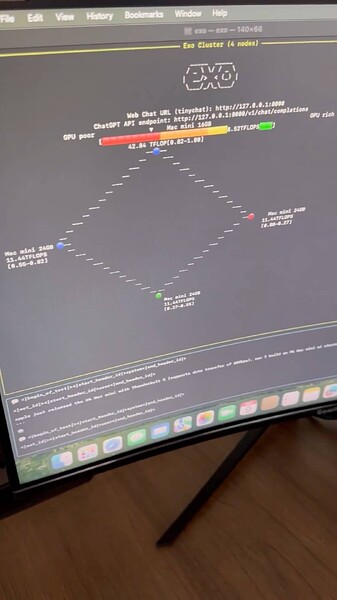

For example, Alex Cheema’s setup (based on Exo’s tools) utilizes a distributed cluster across four M4 Pro Mac Minis, leveraging Thunderbolt 5 to handle models at impressive speeds without relying on cloud infrastructure. See the setup in action here: Alex Cheema on x.com.

This is where Elixir’s potential becomes exciting: Elixir is strategically positioned to handle not only local AI but also Cloud AI and High-Performance Scientific Computing (HPSC), given its strong distributed systems foundation, concurrency, and fault tolerance. Recently, Elixir won a Mozilla Builders Accelerator grant to create a distributed ML framework focused on local AI, highlighting Elixir’s suitability for this emerging area. Mozilla’s accelerator champions AI models running on personal devices, an alternative to centralized models. Read about the grant and other projects here: Mozilla Builders Accelerator.

Incidentally, Exo is hiring in distributed AI and hardware cluster roles, a chance for hands-on work in democratized AI. Big bucks: cracked software engineers ($250K-$500K + equity) and ML researchers ($300K-$600K + equity).

Why this matters for Elixir:

- Elixir’s strengths in distributed systems: Its concurrency and fault-tolerant design make it ideal for managing AI workloads across diverse local devices, whether iPhones, gaming consoles, or desktops.

- Growing trend toward local AI: With privacy and edge-computing power on the rise, decentralized AI running on personal hardware is more feasible than ever. Exo is proving what’s possible, and Elixir has everything to not only match it but lead the field.

- Building Elixir’s reputation as a local AI powerhouse: This is an opportunity to solidify Elixir as the go-to for scalable, distributed AI frameworks across cloud, local, and HPSC applications.

Some questions for the community:

- What would it take for Elixir to truly lead in local AI? How can we capitalize on our strengths in concurrency and fault tolerant systems?

- What tools or frameworks exist or could exist to boost Elixir’s capabilities for distributed AI workloads? Where does the ecosystem need to grow?

- Can Elixir’s decentralized AI capabilities be a strong alternative to cloud-based models? What’s needed to make this vision real?

Looking forward to everyone’s thoughts.