Hello everyone ![]() ,

,

I am required to load test products before deployment, and unfortunately, I am facing a big issue with Presence.track (and possibly Presence.list() in the future, as for links at the end).

Here is the simple implementation of the channel I used for load testing:

@impl true

def join("room:lobby", payload, socket) do

send(self(), :after_join)

{:ok, socket}

end

def handle_info(:after_join, socket) do

{:ok, _} = ChattingWeb.Channels.Presence.track(socket, socket.id, %{})

{:noreply, socket}

end

@spec terminate(any(), any()) :: :ok

def terminate(reason, _socket) do

Logger.debug"[RoomChannel] terminate #{inspect reason}"

:ok

end

What I have tried (changing pool_size) with no noticeable results:

(1)

# application.ex

def start(_type, _args) do

children = [

# ...

{Phoenix.PubSub, [name: Chatting.PubSub, pool_size: 100]},

{ChattingWeb.Channels.Presence, [pool_size: 10_000 ]},

ChattingWeb.Endpoint

]

(2) Wrap Presence.track inside a task? This will make Presence Tracker very very inconsistent as it is already eventually consistent by design, so that is not the solution

14:54:25.523 [debug] [RoomChannel] terminate {:timeout, {GenServer, :call, [ChattingWeb.Channels.Presence_shard1484, {:track, #PID<0.544937.0>, "room:lobby", "91990be2-cf40-45e4-b2b2-d225bb45f5eb", %{}}, 5000]}}

14:54:25.522 [error] GenServer #PID<0.542937.0> terminating

** (stop) exited in: GenServer.call(ChattingWeb.Channels.Presence_shard1484, {:track, #PID<0.542937.0>, "room:lobby", "49b459ef-e13a-4ed7-8c7b-5c15cbd0d781", %{}}, 5000)

** (EXIT) time out

(elixir 1.16.2) lib/gen_server.ex:1114: GenServer.call/3

(chatting 0.1.0) lib/chatting_web/channels/room_channel.ex:20: ChattingWeb.RoomChannel.handle_info/2

(phoenix 1.7.11) lib/phoenix/channel/server.ex:358: Phoenix.Channel.Server.handle_info/2

(stdlib 5.2.1) gen_server.erl:1095: :gen_server.try_handle_info/3

(stdlib 5.2.1) gen_server.erl:1183: :gen_server.handle_msg/6

(stdlib 5.2.1) proc_lib.erl:241: :proc_lib.init_p_do_apply/3

Last message: :after_join

State: %Phoenix.Socket{assigns: %{}, channel: ChattingWeb.RoomChannel, channel_pid: #PID<0.542937.0>, endpoint: ChattingWeb.Endpoint, handler: ChattingWeb.UserSocket, id: "49b459ef-e13a-4ed7-8c7b-5c15cbd0d781", joined: true, join_ref: "3", private: %{log_handle_in: :debug, log_join: :info}, pubsub_server: Chatting.PubSub, ref: nil, serializer: Phoenix.Socket.V2.JSONSerializer, topic: "room:lobby", transport: :websocket, transport_pid: #PID<0.543895.0>}

14:54:25.522 [error] GenServer #PID<0.544271.0> terminating

** (stop) exited in: GenServer.call(ChattingWeb.Channels.Presence_shard1484, {:track, #PID<0.544271.0>, "room:lobby", "94ebe1e7-3594-4a02-a8eb-e72113b5353e", %{}}, 5000)

** (EXIT) time out

(elixir 1.16.2) lib/gen_server.ex:1114: GenServer.call/3

(chatting 0.1.0) lib/chatting_web/channels/room_channel.ex:20: ChattingWeb.RoomChannel.handle_info/2

(phoenix 1.7.11) lib/phoenix/channel/server.ex:358: Phoenix.Channel.Server.handle_info/2

(stdlib 5.2.1) gen_server.erl:1095: :gen_server.try_handle_info/3

(stdlib 5.2.1) gen_server.erl:1183: :gen_server.handle_msg/6

(stdlib 5.2.1) proc_lib.erl:241: :proc_lib.init_p_do_apply/3

Last message: :after_join

14:54:30.445 [error] GenServer ChattingWeb.Channels.Presence_shard1484 terminating

** (CaseClauseError) no case clause matching: {:error, {:system_limit, [{:erlang, :spawn_link, [Task.Supervised, :reply, [{:nonode@nohost, ChattingWeb.Channels.Presence_shard1484, #PID<0.2052.0>}, [#PID<0.566.0>, ChattingWeb.Channels.Presence.Supervisor, Chatting.Supervisor, #PID<0.342.0>], :monitor]], [error_info: %{module: :erl_erts_errors}]}, {Task.Supervised, :start_link, 2, [file: ~c"lib/task/supervised.ex", line: 14]}, {DynamicSupervisor, :start_child, 3, [file: ~c"lib/dynamic_supervisor.ex", line: 795]}, {DynamicSupervisor, :handle_start_child, 2, [file: ~c"lib/dynamic_supervisor.ex", line: 781]}, {:gen_server, :try_handle_call, 4, [file: ~c"gen_server.erl", line: 1131]}, {:gen_server, :handle_msg, 6, [file: ~c"gen_server.erl", line: 1160]}, {:proc_lib, :init_p_do_apply, 3, [file: ~c"proc_lib.erl", line: 241]}]}}

(elixir 1.16.2) lib/task/supervisor.ex:546: Task.Supervisor.async/6

(phoenix 1.7.11) lib/phoenix/presence.ex:606: Phoenix.Presence.async_merge/2

(phoenix 1.7.11) lib/phoenix/presence.ex:500: Phoenix.Presence.handle_diff/2

(phoenix_pubsub 2.1.3) lib/phoenix/tracker/shard.ex:508: Phoenix.Tracker.Shard.report_diff/3

(phoenix_pubsub 2.1.3) lib/phoenix/tracker/shard.ex:344: Phoenix.Tracker.Shard.drop_presence/2

(phoenix_pubsub 2.1.3) lib/phoenix/tracker/shard.ex:218: Phoenix.Tracker.Shard.handle_info/2

(stdlib 5.2.1) gen_server.erl:1095: :gen_server.try_handle_info/3

(stdlib 5.2.1) gen_server.erl:1183: :gen_server.handle_msg/6

(stdlib 5.2.1) proc_lib.erl:241: :proc_lib.init_p_do_apply/3

Last message: {:EXIT, #PID<0.554202.0>, :noproc}

State: %{pubsub_server: Chatting.PubSub, log_level: false, presences: %Phoenix.Tracker.State{replica: {:nonode@nohost, 1714308076082337}, context: %{{:nonode@nohost, 1714308076082337} => 334608}, clouds: %{{:nonode@nohost, 1714308076082337} => MapSet.new([{{:nonode@nohost, 1714308076082337}, 253267}, {{:nonode@nohost, 1714308076082337}, 306433}, {{:nonode@nohost, 1714308076082337}, 235263}, {{:nonode@nohost, 1714308076082337}, 289567}, {{:nonode@nohost, 1714308076082337}, 280347}, {{:nonode@nohost, 1714308076082337}, 262143}, {{:nonode@nohost, 1714308076082337}, 237141}, {{:nonode@nohost, 1714308076082337}, 278634}, {{:nonode@nohost, 1714308076082337}, 274722}, {{:nonode@nohost, 1714308076082337}, 287044}, {{:nonode@nohost, 1714308076082337}, 279115}, {{:nonode@nohost, 1714308076082337}, 317479}, {{:nonode@nohost, 1714308076082337}, 305956}, {{:nonode@nohost, 1714308076082337}, 329034}, {{:nonode@nohost, 1714308076082337}, 321040}, {{:nonode@nohost, 1714308076082337}, 254547}, {{:nonode@nohost, 1714308076082337}, 315778}, {{:nonode@nohost, 1714308076082337}, 275319}, {{:nonode@nohost, 1714308076082337}, 245256}, {{:nonode@nohost, 1714308076082337}, 252318}, {{:nonode@nohost, 1714308076082337}, 247804}, {{:nonode@nohost, 1714308076082337}, 254101}, {{:nonode@nohost, 1714308076082337}, 235555}, {{:nonode@nohost, 1714308076082337}, 248297}, {{:nonode@nohost, 1714308076082337}, 293332}, {{:nonode@nohost, 1714308076082337}, 261099}, {{:nonode@nohost, 1714308076082337}, 319460}, {{:nonode@nohost, 1714308076082337}, 253883}, {{:nonode@nohost, 1714308076082337}, 322082}, {{:nonode@nohost, 1714308076082337}, 319785}, {{:nonode@nohost, 1714308076082337}, 306916}, {{:nonode@nohost, 1714308076082337}, 304791}, {{:nonode@nohost, 1714308076082337}, 299706}, {{:nonode@nohost, 1714308076082337}, 257108}, {{:nonode@nohost, 1714308076082337}, 307406}, {{:nonode@nohost, 1714308076082337}, 305716}, {{:nonode@nohost, 1714308076082337}, 300977}, {{:nonode@nohost, 1714308076082337}, 297285}, {{:nonode@nohost, 1714308076082337}, 323886}, {{:nonode@nohost, 1714308076082337}, 282769}, {{:nonode@nohost, ...}, 288775}, {{...}, ...}, {...}, ...])}, values: ChattingWeb.Channels.Presence_shard1484, pids: #Reference<0.3223613206.2631270406.173034>, mode: :normal, delta: %Phoenix.Tracker.State{replica: {:nonode@nohost, 1714308076082337}, context: %{}, clouds: %{{:nonode@nohost, 1714308076082337} => MapSet.new([{{:nonode@nohost, 1714308076082337}, 253267}, {{:nonode@nohost, 1714308076082337}, 306433}, {{:nonode@nohost, 1714308076082337}, 243256}, {{:nonode@nohost, 1714308076082337}, 141152}, {{:nonode@nohost, 1714308076082337}, 235263}, {{:nonode@nohost, 1714308076082337}, 289567}, {{:nonode@nohost, 1714308076082337}, 280347}, {{:nonode@nohost, 1714308076082337}, 148722}, {{:nonode@nohost, 1714308076082337}, 262143}, {{:nonode@nohost, 1714308076082337}, 240776}, {{:nonode@nohost, 1714308076082337}, 233737}, {{:nonode@nohost, 1714308076082337}, 237141}, {{:nonode@nohost, 1714308076082337}, 240841}, {{:nonode@nohost, 1714308076082337}, 181530}, {{:nonode@nohost, 1714308076082337}, 278634}, {{:nonode@nohost, 1714308076082337}, 158785}, {{:nonode@nohost, 1714308076082337}, 186440}, {{:nonode@nohost, 1714308076082337}, 274722}, {{:nonode@nohost, 1714308076082337}, 287044}, {{:nonode@nohost, 1714308076082337}, 279115}, {{:nonode@nohost, 1714308076082337}, 317479}, {{:nonode@nohost, 1714308076082337}, 305956}, {{:nonode@nohost, 1714308076082337}, 329034}, {{:nonode@nohost, 1714308076082337}, 179127}, {{:nonode@nohost, 1714308076082337}, 321040}, {{:nonode@nohost, 1714308076082337}, 254547}, {{:nonode@nohost, 1714308076082337}, 233121}, {{:nonode@nohost, 1714308076082337}, 185188}, {{:nonode@nohost, 1714308076082337}, 137959}, {{:nonode@nohost, 1714308076082337}, 315778}, {{:nonode@nohost, 1714308076082337}, 275319}, {{:nonode@nohost, 1714308076082337}, 245256}, {{:nonode@nohost, 1714308076082337}, 252318}, {{:nonode@nohost, ...}, 247804}, {{...}, ...}, {...}, ...])}, values: %{{{:nonode@nohost, 1714308076082337}, 245256} => {#PID<0.556979.0>, "room:lobby", "9ff6e317-fccf-4934-8f31-8983d31f94c7", %{phx_ref: "F8py1N5qBap8Q0QF"}}, {{:nonode@nohost, 1714308076082337}, 245605} => {#PID<0.558387.0>, "room:lobby", "993be463-7012-4db1-9c08-f3ae287c8cbf", %{phx_ref: "F8py1N7oicl8Q7ME"}}, {{:nonode@nohost, 1714308076082337}, 244570} => {#PID<0.554235.0>, "room:lobby", "efb244ac-9aec-4968-8c9a-f22e4f593571", %{phx_ref: "F8py1N1kKbF8Q1ZC"}}, {{:nonode@nohost, 1714308076082337}, 245697} => {#PID<0.558594.0>, "room:lobby", "8327bc69-fc55-4b0e-8134-99bfeb846ae7", %{phx_ref: "F8py1N8MQPl8QyjD"}}, {{:nonode@nohost, 1714308076082337}, 245338} => {#PID<0.557424.0>, "room:lobby", "02f39af0-426b-418d-a3e3-b6d6eb2e0b79", %{phx_ref: "F8py1N6JPAR8Q1VF"}}, {{:nonode@nohost, 1714308076082337}, 245578} => {#PID<0.558860.0>, "room:lobby", "823b865d-7fc4-405c-a6da-e7e9d6c21943", %{phx_ref: "F8py1N7eD558Q67E"}}, {{:nonode@nohost, 1714308076082337}, 245814} => {#PID<0.558927.0>, "room:lobby", "4933d3b9-8a59-42af-8a9e-9a9faa062936", %{phx_ref: "F8py1N81ugF8Q3hC"}}, {{:nonode@nohost, 1714308076082337}, 245037} => {#PID<0.546531.0>, "room:lobby", "2446a94f-2bb5-4b4e-90b7-83edcb5c7f7e", %{phx_ref: "F8py1N4X3Sd8Q6dI"}}, {{:nonode@nohost, 1714308076082337}, 245407} => {#PID<0.547176.0>, "room:lobby", "a382cc0a-039e-4337-8166-720519b0bca1", %{phx_ref: "F8py1N6hOV18Q6FE"}}, {{:nonode@nohost, 1714308076082337}, 244556} => {#PID<0.554272.0>, "room:lobby", "5c1caa8a-28b2-4344-bc18-eef73ca02427", %{phx_ref: "F8py1N1e8gl8Q1LC"}}, {{:nonode@nohost, 1714308076082337}, 244751} => {#PID<0.544335.0>, "room:lobby", "d3bc2049-6cda-4427-95de-40ac61972599", %{phx_ref: "F8py1N2f_9l8Q9KK"}}, {{:nonode@nohost, 1714308076082337}, 245509} => {#PID<0.557177.0>, "room:lobby", "77db1d76-c499-4e00-8398-2b950b886e2b", %{phx_ref: "F8py1N7HO2N8Q2uF"}}, {{:nonode@nohost, 1714308076082337}, 245566} => {#PID<0.558106.0>, "room:lobby", "3f00d128-84b6-49e9-ba43-3f4788bb2d35", %{phx_ref: "F8py1N7aaKR8Q6wE"}}, {{:nonode@nohost, 1714308076082337}, 245441} => {#PID<0.557106.0>, "room:lobby", "5289fd9e-5a63-44f9-8ea3-115e6659c3f5", %{phx_ref: "F8py1N6sSKx8Q2MF"}}, {{:nonode@nohost, 1714308076082337}, 244693} => {#PID<0.546227.0>, "room:lobby", "8011d44c-39d7-4d65-bf53-ca9cba5a4cd6", %{phx_ref: "F8py1N2LnLZ8Q3UC"}}, {{:nonode@nohost, 1714308076082337}, 245062} => {#PID<0.556411.0>, "room:lobby", "d9314ded-8456-4c95-9c95 (truncated)

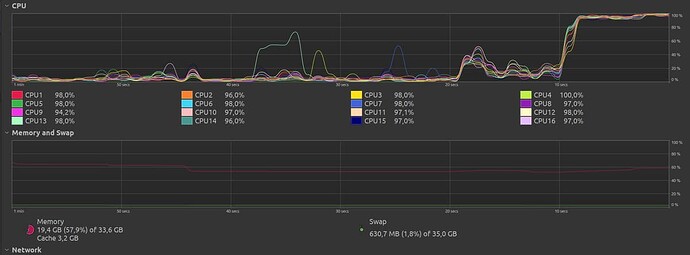

Possibly ChattingWeb.Channels.Presence_shard1484 is getting too many messages in its inbox?

The following problem has been addressed here with implementation changes of the past: