Hi all, i hit an issue of performance problem in the term of caching. I wonder if there’s any easy way to batch concurrent call (same key) for cacheable result

To illustrate the problem, here i was using Cachex for caching, and to help cache some action/external nerwork call, i was using this helper code to wrap an action/function with cacheable result

@doc """

Wrap doing something in cache, with cache_key

Cache will only cache for succesful result, for error result will not be cached

Input:

cache_key: cache_key, can be anything (tuple, map, etc.)

result_fn: anoymous zero-argument function which return {:ok. any()} or {:error, any()}

opt:

ttl: time to live, default to :timer.hours(1)

Output:

{:ok, any()} | {:error, any()} based on result_fn

"""

def wrap_caching(cache_key, result_fn, opt \\ []) do

case Cachex.get(:my_cache, key) do

{:ok, nil} ->

result = result_fn.()

case result do

{:ok, data} ->

Cachex.put(:my_cache, cache_key, data, ttl: opt[:ttl] || :timer.hours(1))

{:error, _} ->

:do_nothing

end

result

{:ok, data} ->

{:ok, data}

end

end

so if i were to do an same external network call in a function, it would cache nicely and call external network only once, like:

def call_osrm_routing(origin, destination)

wrap_caching({:call_osrm_routing, origin, destination}, fn ->

... do network call to OSRM routing server

end)

end

def do_some_logic() do

# Here, in this function, actually calling OSRM routing server only done once

call_osrm_routing(origin, destination)

... do some work

call_osrm_routing(origin, destination)

... do some work

call_osrm_routing(origin, destination)

end

However, i hit a problem, is when call is concurrent (like for example in Absinthe async resolver), it would make each concurrent call do the external network call by themself, because when the function is called, the cache result isn’t yet ready yet, for example:

# these would make the external osrm call three time, due to cache result isn't ready when second/third invocation

[

Task.async(fn -> call_osrm_routing(origin, destination) end),

Task.async(fn -> call_osrm_routing(origin, destination) end),

Task.async(fn -> call_osrm_routing(origin, destination) end),

]

How should i solve this problem? is there library/tools to help?

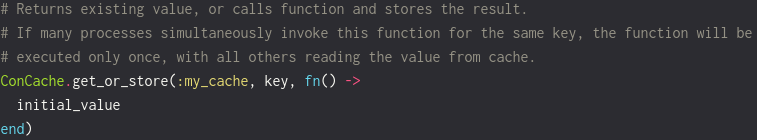

I was thinking to build a batching genserver, which would intercept function call and do work if there’s no same work happen, or if there’s same work currently executing, it would wait until it’s finished, but would like to know if there’s other better way.