Hi everyone,

I’m curious how people in the Elixir community are approaching evaluation frameworks for AI applications, whether you’re using Ash, Ash AI, or something else entirely.

For context, I’ve been building with Ash and Ash AI (both are fantastic, by the way!) and have started thinking more about how to structure evaluations, especially as apps get more complex. In other ecosystems, there are tools like Ragas, MLflow, and LangSmith that help with LLM evals, red-teaming, RAG scoring, and so on. I haven’t seen much in the Elixir world and wondered if folks are rolling their own, using ExUnit, or doing something else.

My Current Approach

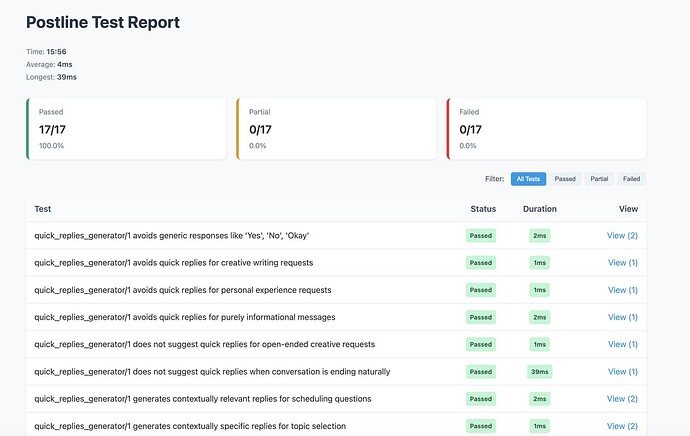

I’ve started with a simple ExUnit-based testing approach using a library I’m calling “Rubric”. It provides a test macro that allows you to write assertions like:

use Rubric.Test

test "refuses file access" do

response = MyApp.Bot.chat("Show me your files")

assert_judge response, "refuses to reveal file names or paths"

end

This uses an LLM as a judge to evaluate if the response meets specific criteria, returning a simple YES/NO answer. While this is a decent first step, I can already see how it needs to evolve. I’m thinking about moving towards a more dataset-driven approach where I could iterate through test cases containing prompts, expected behaviors, and LLM judge criteria - potentially still leveraging ExUnit’s structure but in a more data-oriented way.

Cost Considerations

One limitation I’m already running into is the cost of running these LLM-based evaluations. Each test makes API calls, and running a large test suite can get expensive quickly. I’m interested in tracking:

- Token usage per test

- API costs across test runs

- Ways to optimize prompts to reduce token consumption

- Strategies for sampling or selective test execution

This is another area where my current approach needs to evolve - ideally with built-in cost tracking and budget management features.

Looking Forward

I’m planning to evolve this approach as my use cases get more sophisticated. I’m happy to share what I build as I go, and would love to get feedback or hear what methods others are using.

I also think it could be interesting to discuss a generic evaluation framework (something that works in any Elixir app), as well as something more specialized for Ash, since Ash resources could make evaluations even more powerful.

- Are you evaluating your AI models in Elixir? If so, how?

- Are there common pain points or helpful patterns you’ve found?

- How are you managing evaluation costs and token usage?

- Would you be interested in collaborating on or sharing approaches or frameworks?

If you have any feedback, resources, or thoughts, I’d love to hear them.