Had some fun with the compiler.

I had a map of maps - it was (pretty-printed) 100K lines long. So I wanted to “optimize it.”

Instead of this:

%{ "A_KEY" => %{ group1_key1: "blurb",

....},

"ANOTHER_KEY" => ...

I decided to do this:

%{ "A_KEY" => AnotherModuleInAnotherFile.A_KEY.group,

"ANOTHER_KEY" => AnotherModuleInAnotherFile.ANOTHER_KEY.group

,,,}

# and in a separate file

defmodule AnotherModuleInAnotherFile.A_KEY do

def group do

%{ group1_key1: "blurb",

....}

end

end

The map had basically 155 sub-maps which I put into separate files.

My expectation was that this would be a way to split this huge map sensibly. The sub-maps were completey constant so I expected the implementations of the group/1 functions to simply return a pointer or reference to term storage and this to become super-cheap in terms of compilation. (And to be done in parallel.)

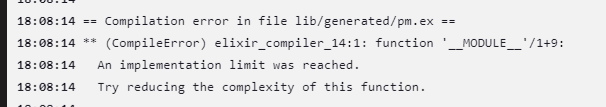

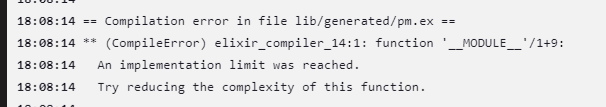

Instead I learned of a new compiler-error:

The supposedly complex instruction was described as: {call_ext,0,{extfunc,'Elixir.MyModule.MY_KEY',group,0}}

Also, compilation expanded from roughly 90s (for a total of 300K lines) into more than ten minutes when it got killed by a supervision process.

So much for my assumptions of how to make code compile in parallel.

So I kept the huge maps, but split the file in three parts, and that’s what I can sensibly do here, I guess.

Alternatively I could instead put all the 155 entries into :persistent_term instead with 155 generated put/2 calls and use that. The map literal is just a module attribute used by a function for lookup. So, it could also simply refer to :persistent_term.get/1 without changing the interface.

I guess in that case the compiler would not be forced to resolve it the same way. (I assume the compiler simply tried to resolve the map literal just the same as before, but the ext functions made its work more complex…)