I traced what Mint is doing and dropped down one level to :ssl and I consistently get the same results:

defmodule SslTest do

def run() do

host = "localhost"

port = 4040

method = "POST"

path = "/test/text"

content_length = 10 * 1024 * 1024

ssl_opts = [verify: :verify_none]

body = String.duplicate("-", content_length)

chunks = [body]

#chunks = chunk_binary(body, 1024 * 10)

IO.puts("waiting 3 seconds...")

Process.sleep(3000)

headers = [

{"host", "#{host}:#{port}"},

{"content-type", "text/plain"},

{"content-length", content_length}

]

{:ok, socket} =

:ssl.connect(

String.to_charlist(host),

port,

ssl_opts(host, ssl_opts)

)

request = [

"#{method} #{path} HTTP/1.1\r\n",

Enum.map(headers, fn {name, value} -> "#{name}: #{value}\r\n" end),

"\r\n"

]

:ok = :ssl.send(socket, request)

for chunk <- chunks do

:ok = :ssl.send(socket, chunk)

end

Process.sleep(500)

{:ok, response} = :ssl.recv(socket, 0, 0)

:ok = :ssl.close(socket)

IO.puts(response)

end

def ssl_opts(hostname, opts) do

[

server_name_indication: String.to_charlist(hostname),

versions: [:"tlsv1.3", :"tlsv1.2"],

depth: 4,

secure_renegotiate: true,

reuse_sessions: true,

packet: :raw,

mode: :binary,

active: false,

ciphers: [

%{cipher: :aes_256_gcm, key_exchange: :any, mac: :aead, prf: :sha384},

%{cipher: :aes_128_gcm, key_exchange: :any, mac: :aead, prf: :sha256},

%{cipher: :chacha20_poly1305, key_exchange: :any, mac: :aead, prf: :sha256},

%{cipher: :aes_128_ccm, key_exchange: :any, mac: :aead, prf: :sha256},

%{cipher: :aes_128_ccm_8, key_exchange: :any, mac: :aead, prf: :sha256},

%{

cipher: :aes_256_gcm,

key_exchange: :ecdhe_ecdsa,

mac: :aead,

prf: :sha384

},

%{cipher: :aes_256_gcm, key_exchange: :ecdhe_rsa, mac: :aead, prf: :sha384},

%{

cipher: :aes_256_ccm,

key_exchange: :ecdhe_ecdsa,

mac: :aead,

prf: :default_prf

},

%{

cipher: :aes_256_ccm_8,

key_exchange: :ecdhe_ecdsa,

mac: :aead,

prf: :default_prf

},

%{

cipher: :chacha20_poly1305,

key_exchange: :ecdhe_ecdsa,

mac: :aead,

prf: :sha256

},

%{

cipher: :chacha20_poly1305,

key_exchange: :ecdhe_rsa,

mac: :aead,

prf: :sha256

},

%{

cipher: :aes_128_gcm,

key_exchange: :ecdhe_ecdsa,

mac: :aead,

prf: :sha256

},

%{cipher: :aes_128_gcm, key_exchange: :ecdhe_rsa, mac: :aead, prf: :sha256},

%{

cipher: :aes_128_ccm,

key_exchange: :ecdhe_ecdsa,

mac: :aead,

prf: :default_prf

},

%{

cipher: :aes_128_ccm_8,

key_exchange: :ecdhe_ecdsa,

mac: :aead,

prf: :default_prf

},

%{cipher: :aes_256_gcm, key_exchange: :dhe_rsa, mac: :aead, prf: :sha384},

%{cipher: :aes_256_gcm, key_exchange: :dhe_dss, mac: :aead, prf: :sha384},

%{

cipher: :aes_256_cbc,

key_exchange: :dhe_rsa,

mac: :sha256,

prf: :default_prf

},

%{

cipher: :aes_256_cbc,

key_exchange: :dhe_dss,

mac: :sha256,

prf: :default_prf

},

%{cipher: :aes_128_gcm, key_exchange: :dhe_rsa, mac: :aead, prf: :sha256},

%{cipher: :aes_128_gcm, key_exchange: :dhe_dss, mac: :aead, prf: :sha256},

%{

cipher: :chacha20_poly1305,

key_exchange: :dhe_rsa,

mac: :aead,

prf: :sha256

},

%{

cipher: :aes_128_cbc,

key_exchange: :dhe_rsa,

mac: :sha256,

prf: :default_prf

},

%{

cipher: :aes_128_cbc,

key_exchange: :dhe_dss,

mac: :sha256,

prf: :default_prf

},

%{

cipher: :aes_256_cbc,

key_exchange: :ecdhe_ecdsa,

mac: :sha,

prf: :default_prf

},

%{

cipher: :aes_256_cbc,

key_exchange: :ecdhe_rsa,

mac: :sha,

prf: :default_prf

},

%{

cipher: :aes_256_cbc,

key_exchange: :ecdh_ecdsa,

mac: :sha,

prf: :default_prf

},

%{

cipher: :aes_256_cbc,

key_exchange: :ecdh_rsa,

mac: :sha,

prf: :default_prf

},

%{

cipher: :aes_128_cbc,

key_exchange: :ecdhe_ecdsa,

mac: :sha,

prf: :default_prf

},

%{

cipher: :aes_128_cbc,

key_exchange: :ecdhe_rsa,

mac: :sha,

prf: :default_prf

},

%{

cipher: :aes_128_cbc,

key_exchange: :ecdh_ecdsa,

mac: :sha,

prf: :default_prf

},

%{

cipher: :aes_128_cbc,

key_exchange: :ecdh_rsa,

mac: :sha,

prf: :default_prf

},

%{

cipher: :aes_256_cbc,

key_exchange: :dhe_rsa,

mac: :sha,

prf: :default_prf

},

%{

cipher: :aes_256_cbc,

key_exchange: :dhe_dss,

mac: :sha,

prf: :default_prf

},

%{

cipher: :aes_128_cbc,

key_exchange: :dhe_rsa,

mac: :sha,

prf: :default_prf

},

%{

cipher: :aes_128_cbc,

key_exchange: :dhe_dss,

mac: :sha,

prf: :default_prf

},

%{

cipher: :aes_128_cbc,

key_exchange: :rsa_psk,

mac: :sha256,

prf: :default_prf

},

%{

cipher: :aes_256_cbc,

key_exchange: :rsa_psk,

mac: :sha,

prf: :default_prf

},

%{

cipher: :aes_128_cbc,

key_exchange: :rsa_psk,

mac: :sha,

prf: :default_prf

},

%{cipher: :rc4_128, key_exchange: :rsa_psk, mac: :sha, prf: :default_prf},

%{

cipher: :aes_256_cbc,

key_exchange: :srp_rsa,

mac: :sha,

prf: :default_prf

},

%{

cipher: :aes_256_cbc,

key_exchange: :srp_dss,

mac: :sha,

prf: :default_prf

},

%{

cipher: :aes_128_cbc,

key_exchange: :srp_rsa,

mac: :sha,

prf: :default_prf

},

%{

cipher: :aes_128_cbc,

key_exchange: :srp_dss,

mac: :sha,

prf: :default_prf

},

%{cipher: :aes_256_cbc, key_exchange: :rsa, mac: :sha256, prf: :default_prf},

%{cipher: :aes_128_cbc, key_exchange: :rsa, mac: :sha256, prf: :default_prf},

%{cipher: :aes_256_cbc, key_exchange: :rsa, mac: :sha, prf: :default_prf},

%{cipher: :aes_128_cbc, key_exchange: :rsa, mac: :sha, prf: :default_prf},

%{cipher: :"3des_ede_cbc", key_exchange: :rsa, mac: :sha, prf: :default_prf},

%{

cipher: :"3des_ede_cbc",

key_exchange: :ecdhe_ecdsa,

mac: :sha,

prf: :default_prf

},

%{

cipher: :"3des_ede_cbc",

key_exchange: :ecdhe_rsa,

mac: :sha,

prf: :default_prf

},

%{

cipher: :"3des_ede_cbc",

key_exchange: :dhe_rsa,

mac: :sha,

prf: :default_prf

},

%{

cipher: :"3des_ede_cbc",

key_exchange: :dhe_dss,

mac: :sha,

prf: :default_prf

},

%{

cipher: :"3des_ede_cbc",

key_exchange: :ecdh_ecdsa,

mac: :sha,

prf: :default_prf

},

%{

cipher: :"3des_ede_cbc",

key_exchange: :ecdh_rsa,

mac: :sha,

prf: :default_prf

},

%{cipher: :des_cbc, key_exchange: :dhe_rsa, mac: :sha, prf: :default_prf},

%{cipher: :des_cbc, key_exchange: :rsa, mac: :sha, prf: :default_prf},

%{

cipher: :rc4_128,

key_exchange: :ecdhe_ecdsa,

mac: :sha,

prf: :default_prf

},

%{cipher: :rc4_128, key_exchange: :ecdhe_rsa, mac: :sha, prf: :default_prf},

%{cipher: :rc4_128, key_exchange: :ecdh_ecdsa, mac: :sha, prf: :default_prf},

%{cipher: :rc4_128, key_exchange: :ecdh_rsa, mac: :sha, prf: :default_prf},

%{cipher: :rc4_128, key_exchange: :rsa, mac: :sha, prf: :default_prf},

%{cipher: :rc4_128, key_exchange: :rsa, mac: :md5, prf: :default_prf}

]

]

|> Keyword.merge(opts)

end

def chunk_binary(str, size \\ 102_400, acc \\ []) do

case String.split_at(str, size) do

{slice, ""} -> Enum.reverse([slice | acc])

{slice, rest} -> chunk_binary(rest, size, [slice | acc])

end

end

end

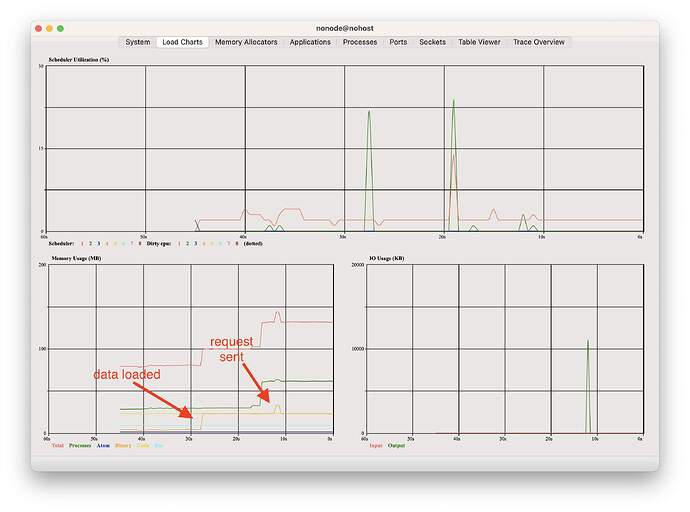

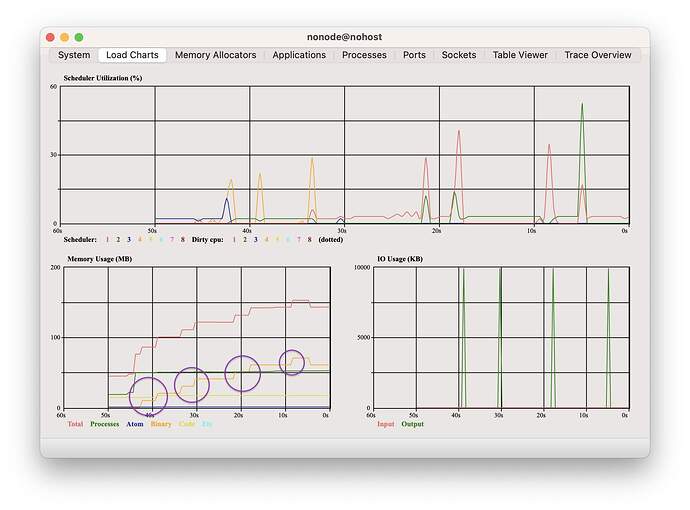

If you uncomment the line with the call to chunk_binary the memory spike disappears.