Thanks for the suggestion, tried it but don’t see any difference

upgraded to cowboy 2.7.0, but still the same will give benchmarking individual parts a try, thanks for the response

Doing some naive napkin math: 54266 / 260 * 919 / 1000 gives extrapolated running time of 191.81ms.

Looking at the code of Slack controller, I further see that:

- Measurements are performed after the input is parsed

- The output is roughly of the same size as the input.

Which would explain the remaining gap of roughly 2x between 192ms and 349ms. Based on this quick analysis, my suspicion is that you spend a lot of time returning the same output that you received at the input. Consider dropping that part, since I see no reason why the client should get back the same thing they sent to the server. That should roughly halve the execution time.

If you could also relay that same json untouched to slack, you could bring the running time down significantly, because you wouldn’t need to parse the json at all.

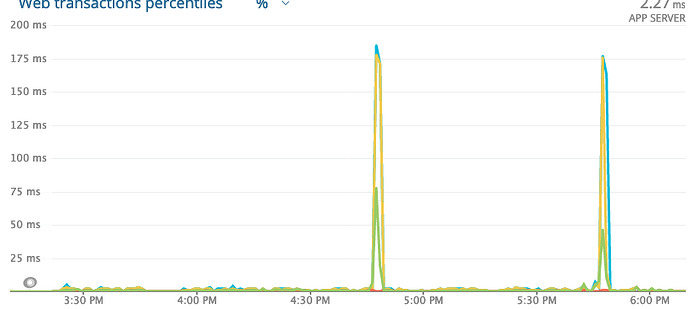

Are you running your Elixir app in Docker with all those other software that are in docker-compose.yml? I don’t know much about Docker but all of those are running on same host right? If they are, that lag you are seeing could be some other app running side by side that is causing a CPU spike and that causes Elixir/Phoenix to lag. Example there is open issue with Go about co-tenancy https://github.com/golang/go/issues/17969 but it could be another runtime that has GC that’s kicking in as well.

thanks for the perspective but the host is quite stable and even when there is spike in response time there is no spike in resources

Thanks for the response, yes you are right about the response time spike is most likely based on the amount of body that is returned. After my initial suspicion it being a P99 GC pause coming from a ruby world hence the name of this thread i am of the same opinion now its to do with the response body but as this is my first experience with elixir the returning of response with the same body of request was more to evaluate the response rendering speed and If Jason performance is as such then it looks to be an Achilles heel and the performance seems to be much poor than like of ruby as well.

ruby’s json is pure C afaik - so by all means C is faster than pure elixir… things like serializing from DB/activerecord is an entirely different story though…

try and use jiffy perhaps https://github.com/davisp/jiffy

Even though individual requests might be slower than when using ruby, though elixir will sustain load much longer than rails usually, as a rails application usually has only a couple of threads running, and if they are all busy serving requests, all others have to wait until a “worker” becomes free again.

Elixir applications usually perform better in this scenario, as each request is handled by a separate process on the BEAM, and on average you can exhaust your CPUs quite well under load and keep up with stable response times until to that point.

In your case you could ignore JSON completely by allowing API endpoint to support normal form POST and keep JSON you are sending to Slack as string. Also why send content back to client? It already knows what it’s sending. Now you JSON decode & encode same data and you could skip both by sending Slack JSON as string in form and don’t return body just return only status because client already has that body.

I just explained it earlier above as its not some response design but rather used for evaluation which has shown it to be a good test

As @NobbZ said, there is a concurrent dimension to this story. Even if single json processing takes a long time, it won’t significantly affect the duration of other activities in the system (like e.g. other requests), not even on a single core. I demonstrated this behaviour in this talk.

Jiffy mentioned by @outlog can indeed help if you want to handle larger jsons quickly. I reached for it a few years ago for this precise reason and it did the job. In some cases, when you control all communication parts, switching from json to :erlang.term_to_binary and its counterpart can boost performance even further. So there are definitely options, but it’s worth noting that maximum speed of a sequential program is not the main focus of BEAM, and it will sometimes even sacrifice speed of a single process to get other properties, which are usually more important in a software/backend system, such as fault-tolerance and stable latencies.

Finally, instead of using http requests in production to benchmark json lib perf, consider making a repeatable local script using benchee.

Parsing/decoding is usually the slowest. I bet you are spending most time on that. So you are not really testing Jason rendering speed there. Just create API endpoint that doesn’t take any input that uses Jason to encode same kind of data as Elixir map that you experienced was slow.

Thank you all for the insights, it been a good learning topic for me as newbie to elixir. Really appreciate the community support. Will really enjoy working with elxiir.

cheers