Hi Folks,

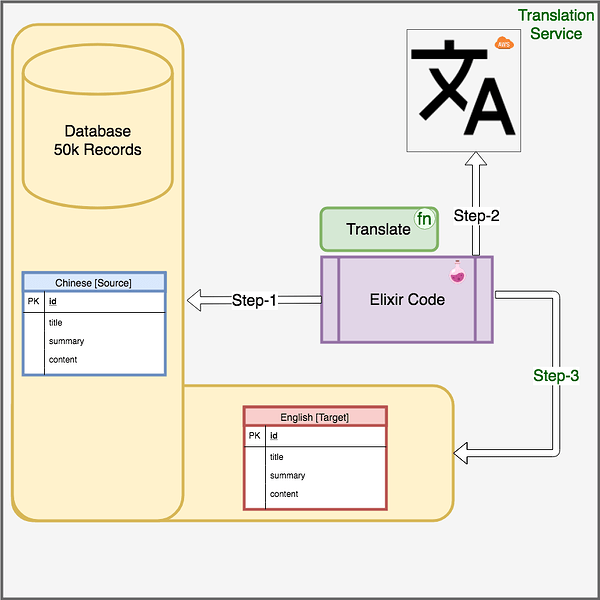

We have a translate function which converts Chinese Text into English.

We need to convert about 50k to 60k records from Chinese to English with it.

For a single record it takes between 5s to 20s to convert each column to English.

Then we store it in another DB which takes hardly a second.

Step-1: Fetch Record(s) from DB.

Step-2: For the Record(s) → Run Translate Function

Step-3: Store the translation to another table

Translate Function: It takes record from Source Table(Chinese) and make about 10-15 calls to AWS and generate English Text for each column and Store that data in Target Table(English).

It takes about 5s to 20s to load.

How to perform the above translate operation on 50k DB Records efficiently ?

We have few concerns:

-

What if program fails at say 10k record. We don’t want to re-translate those 10k again.

Where should we be doing book-keeping… in the DB itself (on target table) ? -

How to perform this task efficiently i.e. Concurrenlty ?

-

What’s a good way to approach this taks? Do we need to use

GenServer?

Hi Folks,

I’ve a fairly simple looking problem.

We have a translate function which converts Chinese Text into English.

We need to convert about 50k to 60k records from Chinese to English with it.

For a single record it takes between 5s to 20s to convert each column to English.

Then we store it in another DB which takes hardly a second.

How to perform the above translate operation on 50k DB Records efficiently ?

We have few concerns:

-

What if program fails at say 10k record. We don’t want to re-translate those 10k again.

Where should we be doing book-keeping… in the DB itself (on target table) ? -

How to perform this task efficiently i.e. Concurrenlty ?

-

What’s a good way to approach this taks? Do we need to use

GenServer?