Hey, years late to the party, but I’ve been seeing a strange lag between the mix task and tests starting and can’t determine what is causing it! It is especially prominent in our Github Action CI.

The test suite takes 7 minutes(420s) , 190s running tests, 120 seconds unaccounted for! (The remainder is running test seeds & migrations)

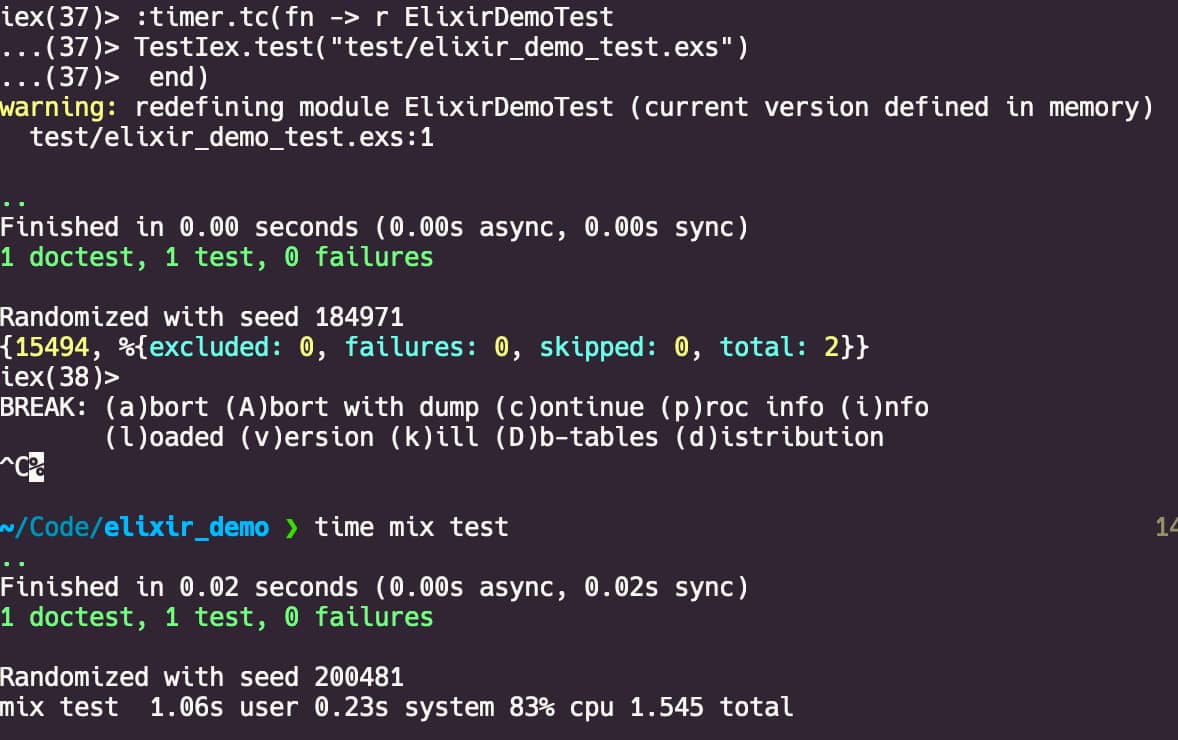

Running MIX_DEBUG=1 and after wrapping many things in :timer.tc I’ve narrowed it down, but can’t tell what the cause is.

The big problem is, once the mix test task is run, we don’t see any logging for 1:40s! Then we get 4 DB connection errors, then 20s more, and the tests start returning.

Context, all of our tests are concurrent ( ), there are 6K, we have 3 Repos running

), there are 6K, we have 3 Repos running SQLSandbox. I tried setting unique timeouts on all of our explicate checkout/2 statements, but it still returns the 120000ms default.

I have tons more data, if anything jumps out, please point and I will research!

Fri, 29 Mar 2024 14:28:56 GMT <- Ran mix ecto.migrate in 33ms

Fri, 29 Mar 2024 14:28:56 GMT -> Running mix run priv/repo/test_seeds.exs (inside Crowbar.Mixfile)

Fri, 29 Mar 2024 14:28:56 GMT -> Running mix app.start priv/repo/test_seeds.exs (inside Crowbar.Mixfile)

Fri, 29 Mar 2024 14:28:57 GMT warning: CrowbarWeb.Endpoint.init/2 is deprecated, …

Fri, 29 Mar 2024 14:28:57 GMT Warning: (phoenix 1.7.11) … a couple more of these

Fri, 29 Mar 2024 14:28:57 GMT <- Ran mix app.start in 1436ms

Fri, 29 Mar 2024 14:28:58 GMT <- Ran mix run in 1784ms

Fri, 29 Mar 2024 14:28:58 GMT -> Running mix run priv/repo2/seeds.exs (inside Crowbar.Mixfile)

Fri, 29 Mar 2024 14:29:17 GMT <- Ran mix run in 19371ms # This is bad, but not our biggest problem :face-palm:

Fri, 29 Mar 2024 14:29:17 GMT -> Running mix test --cover --partitions 2 --max-cases 4 (inside Crowbar.Mixfile)

Fri, 29 Mar 2024 14:30:57 GMT Error: -29T14:30:57.712 [error] Postgrex.Protocol (#PID<0.628.0>) disconnected: ** (DBConnection.ConnectionError) owner #PID<0.726.0> timed out because it owned the connection for longer than 120000ms (set via the :ownership_timeout option)

Fri, 29 Mar 2024 14:30:57 GMT … Exactly 3 more of this error ^ - Very suspicious, I think our code is causing this

Fri, 29 Mar 2024 14:31:19 GMT Excluding tags: [:skip]

Fri, 29 Mar 2024 14:31:32 GMT ................................ more tests complete

…

Finished in 195.1 seconds (190.2s async, 4.9s sync)

66 doctests, 12 properties, 2684 tests, 0 failures, 13 excluded

Randomized with seed 710739

----------------

Coverage data exported.

<- Ran mix test in 340632ms

<- Ran mix coveralls.lcov in 361175ms