Hi,

I have both Python and Elixir experience. Other languages greatly influenced me with their ideas. Beam VM and OTP inspires me with architecture decisions. Its documentation is very proactive and helps to see the problems from other perspective.

I have never worked with Pykka directly, only when debugged code in Python music player. This experience is not enough, so will not speculate on it.

As I understand, you have a server, that collects telemetry data. This server also needs to display interface for the user. It would be helpful, if you could give more context about the task. For example:

- Do you need to aggregate data before showing it? You can discard raw data after aggregation.

- Do you need a raw data? You can run other aggregations on it later.

- How fast the data comes in?

- How do you plan to store this data?

- What will be if server crashes? Is it OK, if it stops responding?

- Do you really have to implement both desktop and browser UI?

- Which web framework do you plan to use?

- Does a server fetch the data from these devices, or the devices send telemetry to the server?

- How much history do you plan to store? Do you have enough disk space for it (if everything will be on the single server)?

- Do you need to have separate permissions for people? I mean, do you need to hide some data from specific users?

- Do you need to show interface remotely? I mean, if it will be deployed to a public server? If yes, then it is better to protect it.

- How do you plan to scale the application, if you will have 2 servers instead of one?

I would split the task into 3 main parts. Receiving the data, processing it and presentation.

Receive (devices send data to a server)

If I expect large amounts of data, then I possibly need to store it raw before processing.

There are some ways to do it. For example, the data can be saved directly to a database (many options here), added to a queue (RabbitMQ, Redis, Kafka, etc) or possibly some other solution.

It is better to have these endpoints as fast as possible, so they can handle much data. Also I would load-test different solutions here. Possibly web servers with async features will work fine.

We can compare it to process mailbox in Elixir. A process receives many messages, but can handle them as soon as gets some processor time. So, we try to emulate a mailbox here.

Receive (server polls devices for data)

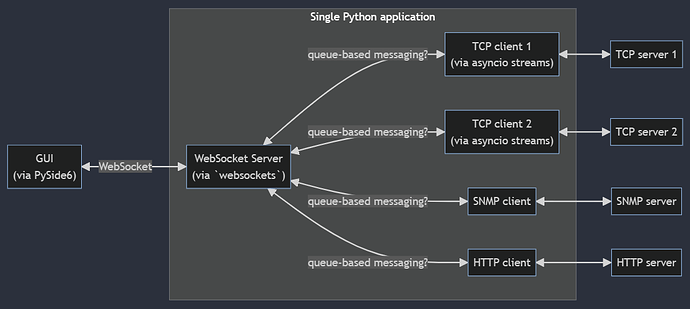

If server fetches data from many devices over the network, then this part should be as concurrent, as it can be. Running multiple Python processes will introduce overhead here, so possibly you will want to go with threads, or even asyncio-related libraries. Idea is the same - you need to fetch as many data as possible with as little overhead, as possible.

You can compare this to launching multiple processes in Elixir to get the data, but we try to emulate the same behaviour with features from Python.

Process the data

If you need to show a raw data, then you can omit this step at all.

If you need to process it somehow, then you can look at something like Celery. I have also worked with Apache Airflow, but it can be overkill here. So, the basic idea is to get a chunk (batch) to process and store the results somewhere. The details greatly depend on the use-case.

Present data

It depends on the type of data, its amounts etc. Possibly you can just generate pages with charts via Flask or Django templates. Besides, you can just refresh a page every N seconds instead of using websockets. It can be just refreshing the whole page, only a part of it, or fetching data from API. Example with Stimulus, example with unpoly.js, example with HTMX. I would like to have only one UI. If you do really need a desktop application - possibly there is a way to show the same UI in a web-view.

Also there is Plotly Dash - have used it to render dashboards. It also supports open-source version.

General advices

I would recommend Designing Data-Intensive Applications book. It gives nice overview of tradeoffs, architecture details and approaches to work with data. It does not dive too deep into details, but gives many ideas (at least for me). Enjoyed reading it (and watching nice pictures there).

Another book with useful tips for overloaded system is Erlang in anger. It describes some typical issues with overloaded system in Beam and how to fight them. But you can apply ideas in it in other languages. Actually you can use actor model to design the system, but it will consist in Operating System processes instead of BEAM VM ones.

I would also recommend not to make the system too complicated - you will be grateful for it later. Possibly the simplest solution will work fine for you. The more moving parts you have - the more of them you need to support.

Hope it will help you, or at least give some ideas.