Currently, I would recommend https://fly.io/ . Their product is so good I honestly feel like I’m stealing from them. Their entire gig is deploying app servers close to the users and routing them to the closest one.

I was previously running on a dedicated cpu + 4GB ram vps and just recently migrated a small site to fly.io with 1x-shared-cpu + 1GB ram in multiple regions. And to ensure they were actually real, I put up a small site there over a year ago on the free tier…and it is still running.

So it’s more reliable, it’s faster for the users…and it’s roughly the same price.

Quite frankly, it’s really too good to be true, so hopefully, it stays that way.

So now that I’m at it, let me give a quick breakdown of what’s amazing and what’s sort-of meh.

The Amazing

Deployment

I dislike docker. But the slickest thing Fly did was making sure no one actually has to run Docker. Each organization has their own very beefy builder machine. Running a deploy just means you have to upload the docker context and they take care of the rest with the remote builder.

So 600 lines of Ansible has been replaced by a <100 line Dockerfile. Deploying from a slow connection is much easier because the context needed to upload to fly is roughly 5MB while a finished binary package was roughly 40MB.

Running a liveview app in multiple regions means it does a rolling deploy, which takes several minutes. Previously the deploys would incur about 30 seconds of downtime, but now there are zero-downtime deploys.

Proxy

The secret-sauce to fly’s awesomeness is their custom proxy.

During a rolling deploy, it pretty much ensures that there is no downtime as it re-balances any connections. If a region is down, it will re-direct any traffic to a different region.

For a Liveview application, this means when the websocket breaks, it will seamlessly re-connect to another region and the user will not notice.

Having built lots of crap, this is quite a feat of engineering to do it so seamlessly. It’s really quite impressive (and that’s coming from a old, grizzled, and grumpy person).

Performance

The shared-1x-cpu runs faster than dedicated cpu on other providers. And the VPS I was using was faster than DigitalOcean / AWS / etc.

For the most cpu intensive operation in the application:

| Machine | Lower is better |

|--------------------------+-----------------|

| Laptop 8-core 16GB | 131us/operation |

| Fly 1x-shared-cpu 256MB | 156us/operation |

| VPS 1x-dedicated cpu 4GB | 165us/operation |

| VPS shared cpu 1GB | 174us/operation |

I do expect this to slow down over time as more people migrate to the platform and the VM’s get more used.

User perceived performance

For my application and user-base, the latency for liveview updates changed from 150ms to 50ms by running out of SJC, MIA, and AMS. I had already optimized all the client-side calls, so quite frankly, this is a ludicrous improvement in user performance. It almost gets to the level of, hey, this is as fast as running on localhost.

It’s absurd.

Free tier

There is a very generous Fly.io Resource Pricing · Fly Docs and a pretty straightforward developer experience with their CLI tool.

Elixir support

@chrismccord (creator of liveview) works there. They have entire sections of docs dedicated to elixir also.

The meh

Autoscale tuning

It’s pretty tough to tune autoscale for Liveview applications as the connections are mostly idle websockets. There is a soft-limit and hard-limit for the number of connections per app server and the proxy starts re-routing to slower regions when an app server hits the soft-limit. This certainly makes sense from an uptime and a pricing perspective, but for Liveview, these users who were supposed to connect in Seattle might be going across the country to NY for the same page.

Initially, I experimented with setting a lower soft-limit to allow the autoscaling to kick in faster with 5 regions, 20 connections softlimit, and 512MB ram app server. However, requests were often re-routed to other regions as the app scaled up.

Finally decided to go with 3 regions, 100 connection softlimit, and 1GB ram which gives quite a bit of headroom, but also guarantees that the user will hit a fast server most of the time.

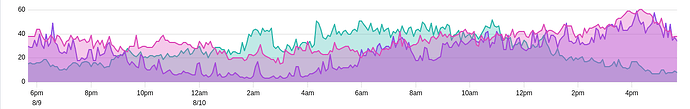

This is a graph of the concurrent connections per vm over the past 24 hours. Pretty neat to see how the traffic moves from region to region.

It seems to work and I’m sure fly will have more options in the future.

DNS staleness

Relying on the absolute accuracy of DNS is not recommended. They have an entire internal network based on wireguard and ipv6 which is super-duper nifty. But do not rely on dns records being immediately accurate. For instance, libcluster will query old hosts for a few minutes. This doesn’t cause me any issues, but not sure if others would have problems.

Proxy reliability and random footguns.

Did run into timeouts once which could never be re-produced. Still, it feels more reliable than

- Apigee - occasional timeouts and dealing with enterprise support that is…meh.

- AWS ELB - occasional downtime on deploys as failover happens

- Google Loadbalancer - generally frightening as they take down your entire business every two years.

Fly is really new, so we don’t have the years of data that we have on other vendors.

Also, make sure your restart_limit is not 0 in your fly.toml .

Database backups

Fly.io does not provide a managed database, though they are funding development on litefs which is sqlite replication made by the author of litestream. If you need point-in-time recovery, you may want to connect to a managed database somewhere else if you don’t want to setup something yourself.

I’ve had to setup a separate running host to take backups and forward logs, which will probably end up being part of the platform at some point in the distant future (scheduled jobs, etc.)

Metrics + logging

The dashboard also provides short-term logging and metrics in a reasonable fashion. Nothing spectacular, but passable.

Beta testing

It’s early and the product isn’t polished. You’re a beta tester. The product is not close to finished. However, it is no different than using any AWS product that is less than 2 years old…except that the product is actually usable. They are working on a new machines api that will replace their current one and allow them to scale to zero.

Their current product works fine though, just a little unpolished. For instance, they introduced multi-process apps and they don’t autoscale the same as normal apps and it’s only documented on the forums.

If you stick to the basics, everything should work fine.

Surprise billing

You can fix the number of servers and not use autoscale. At that point, the only surprise bill would be the bandwidth. And the billing for bandwidth is at 2 cents / gb, not 10 cents / gb like AWS. And, really generous free tier.

Remote builder/deploy flakiness

They supply the remote builder, but if it runs out of disk space (50 GB), you will have to destroy and restart.

Community forum.

They have a forum…which mostly gets overrun about questions of why X isn’t working and gives an unusual impression that the platform doesn’t work. Most questions involve the user not using ipv6 or trying to get fancy with Docker. On the plus side, they do have people responding, which is completely unlike other vendors. And the CEO @mrkurt responds on their forums with exemplary patience.

Conclusion

The amazing outweighs the meh by several orders of magnitude.

Like I said earlier, my deployment is now:

- faster

- more reliable

- and roughly the same price.

I’m still waiting for the other shoe to drop as it’s still pretty early for fly.io, but for right now for a price to value ratio…it’s really unmatched as far as I can see.

![]() localhost:4000/someApiEndpoint

localhost:4000/someApiEndpoint![]() api.mydomain.domain

api.mydomain.domain