Awesome. Thanks!

The Image.Text.text/2 function uses SVG to generate the text in order to have more control over the stroke, background color and so on. One disadvantage of using SVG is exactly the use case you are after: autowrap and autosize to fit into a bounding box.

I’ll add a new function, maybe Image.Text.wrapped_text/2 to leverage Vix.Vips.Operation.text/2 per @akash-akya’s example above.

I’ve looked at unifying these capabilities into a single function but there is too much difference between the two approaches so I’ll need to make sure the documentation is clear.

It would be great if

Image.Options.Crop.crop_focus()could have a focal point{x_percent, y_percent}too.

@tmjoen, libvips doesn’t have a corresponding option to do this that can be applied to the Image.thumbnail/2 function. And the Image.thumbnail/2 function does all sorts of clever things to optimise for speed and memory (for example, block reduce on open).

Which means I can do what you ask (and its a logical ask) but the cropping pipeline won’t be as efficient as just using Image.thumbnail/2.

Basically I can improve Image.crop/2 to have the option to use percentage offsets rather than pixel offsets. Would that meet your use case?

Text is weird (and hates you) so it’s more on the “nice to have” side of things ![]()

@dmitriid, just because you asked for it ![]() .

.

I added an option :autofit to Image.Text.text/2. You can try it using the main branch from GitHub for now (unless issues crop up I’ll recheck the documentation and publish on Sunday). The changelog entry is:

Enhancements

- Add

:autofitoption toImage.Text.text/2. When set totrue, text is rendered withVix.Vips.Operation.text/2. The default,false, uses SVG rendering. There are pros and cons to both strategies. The main difference is thatautofit: truewill automatically size the text and perform line wrapping to fit the specified:widthand:height. Thanks to @dmitriid for the suggestion!

Examples

# The base image

iex> {:ok, base_image} = Image.open("./test/support/images/Singapore-2016-09-5887.jpg")

# Text rendered in a 300x300 image, left aligned text, colored white

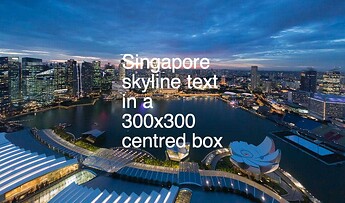

iex> {:ok, text} = Image.Text.text("Singapore skyline text in a 300x300 centred box",

...> height: 300, width: 300, autofit: true, text_fill_color: :white)

iex> {:ok, final_image} = Image.compose(base_image, text, x: :middle, y: :center)

# Text rendered in a 300x300 image, right aligned text, colored white

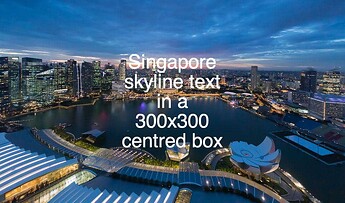

iex> {:ok, text} = Image.Text.text("Singapore skyline text in a 300x300 centred box",

...> height: 300, width: 300, autofit: true, text_fill_color: :white, justify: :right)

iex> {:ok, final_image} = Image.compose(base_image, text, x: :middle, y: :center)

# Text rendered in a 300x300 image, center aligned text, colored white

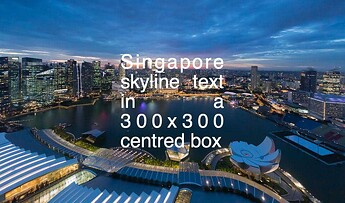

iex> {:ok, text} = Image.Text.text("Singapore skyline text in a 300x300 centred box",

...> height: 300, width: 300, autofit: true, text_fill_color: :white, justify: :center)

iex> {:ok, final_image} = Image.compose(base_image, text, x: :middle, y: :center)

# Text rendered in a 300x300 image, justified text, colored white

iex> {:ok, text} = Image.Text.text("Singapore skyline text in a 300x300 centred box",

...> height: 300, width: 300, autofit: true, text_fill_color: :white, justify: true)

iex> {:ok, final_image} = Image.compose(base_image, text, x: :middle, y: :center)

Oh. My. Glob!

This is amazing!

Thanks @kip, I think that would work nicely! Currently I’m calculating all this (probably poorly) myself and shelling out to sharp-cli for the conversion — I don’t believe that’s any faster ![]()

Loving the library so far. I submitted a PR to get the tests working again, and I’d like to contribute some way to continually ensure the tests pass with strict equality.

I wonder if something like Image Upscaling Playground - a Hugging Face Space by bookbot could be incorporated perhaps?

@tmjoen, I’ve published Image version 0.16.0 with the following changelog entry. Hope that meets your requirements - of course ping me if not.

Enhancements

- Allow percentages to be specified as

Image.crop/5parameters for left, top, width and height. Percentages are expressed as floats in the range -1.0 to 1.0 (for left and top) and greater than 0.0 and less than or equal to 1.0 (for width and height). Thanks to @tmjoen for the suggestion.

Image processing definitely would benefit from a playground. Livebook smart cells would be awesome for that, its just a matter of time. I’ll open an issue on the repo - collaboration and contribution would be warmly welcome! @cocoa is way ahead on this with evision so I have some catching up to do ![]()

There is “kino_vix” to use in livebook, which automatically renders vix images inline. It is a great idea but not fully working correctly at the moment ![]()

Please do open an issue if it’s not working. I authored that very minor contribution so I’ll happily fix it too.

Image 0.18.0 has just been published with the primary new feature being integration with Bumblebee to provide image classification. Bumblebee is just amazing and I can’t wait to add other integrations. There have been requests for StableDiffusion integration so I’ll take a look at that next.

Example

iex> Image.open!("./test/support/images/puppy.webp")

...> |> Image.Classification.classify()

%{predictions: [%{label: "Blenheim spaniel", score: 0.9701485633850098}]}

iex> Image.open!("./test/support/images/puppy.webp")

...> |> Image.Classification.labels()

["Blenheim spaniel"]

Enhancements

Amazing work Kip!

Awesome!! Can’t wait!! ![]()

Looking forward to seeing what you add and thanks for all your work, I think all these features are going to interest a lot of people ![]()

@AstonJ, StableDiffusion “image-to-image” mode is not yet available in Axon/Bumblebee but it is being tracked. I go ahead and add “text-to-image” for now (in the next couple of days).

Thanks for the update Kip, I look forward to seeing text-to-image …and image-to-image when/if it becomes available ![]()

Nearly there with Image.Generation.text_to_image/2 (need some more work on documentation before release). Huge amounts of fun with this. On my M1 Max is takes about a minute to generate an image so definitely better to have a supported GPU.

iex> [i] = Image.Generation.text_to_image("impressionist purple numbat in the style of monet")

[%Vix.Vips.Image{ref: #Reference<0.1344162992.4105306142.245952>}]

Looking good Kip! I’m sure lots of fun will be had with it once it’s released! ![]()

Also I’m not sure whether you (or @seanmor5) are aware but Google extended Stable Diffusion and created DreamBooth. It’s open source and offers a way to tweak Stable Diffusion to produce variations of photos in different styles. It’s what Avatar AI uses and is exactly what I am looking for - it would be awesome if we could have this! ![]()

The project I would use it for is…

Drum roll...

Edit: screenshot snipped! I need to stop talking about potential projects because apparently when you share details of them it gives you the same sense of achievement of having completed them - so ends up working against you! Open to my arm being twisted tho! But it will take a lot of twisting, haha!

I’m in no major rush for it tho - if I start that project it will be when LV is at 1.0 (or when Chris thinks we’re unlikely to see major changes to it).

3 posts were split to a new topic: Elixir Deployment Options - GPU edition!