Hey, I am using Phoenix for a still quite small app. All the app does is getting some input, then starting a bunch of processes per entity and after a certain time, stopping these processes again (DynamicSupervisor).

We are not live yet, so the system is most of the time in “idle” mode, except a few times a week when the customer is testing some stuff.

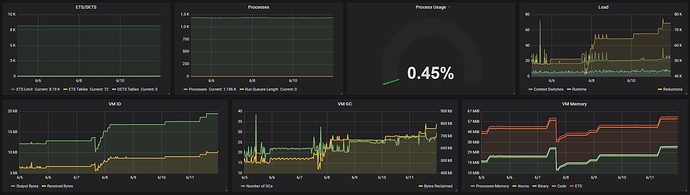

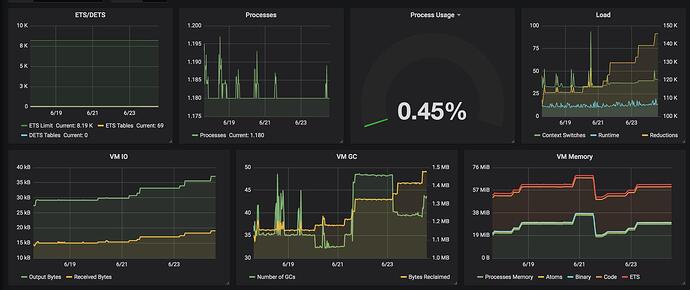

I am monitoring the application with Prometheus and noticed some increase in resource usage over time. You can see the BEAM dashboard in the provided screenshot. It shows the last 7 days. The resource usage goes up over time. I redeployed one time, that’s when the usage goes straight down.

For the memory part, it is mostly process memory that increases. It cannot be my processes since they get cleaned up if an entity is not “tracked” anymore.

It’s weird that the IO, GC and Load also increases.

While typing this, I may have an idea of what it could be. The Prometheus metrics are filling up over time, for example when bots hit URLs that don’t exist. But the Prometheus package stores the data in ETS and the ETS memory stays at around 1,9mb the whole time while process memory goes from 12mb to 33mb.

Just noticed that the process memory dropped to around 15mb overnight (screenshot is from yesterday), while all other metrics stayed high.

I am really confused about what’s going on here.

Quick summary of my setup:

- Phoenix 1.4

- Using DynamicSupervisor to start and terminate “tracking” processes

- No real load on the system yet

- Hackney pool size of 1000

- prometheus_ex package for collecting metrics