I managed to compile XLA on Jetson Nano platform with Cuda 10.2 of JetPack

And I’m compiling XLA 0.2 because latest version (0.3) is abandoned the version of Cuda.

That’s how I manage deps of the project

defp deps do

[

{:xla, "~> 0.2.0", runtime: false, app: false, override: true},

{:exla, "~> 0.1.0-dev", github: "elixir-nx/nx", sparse: "exla", tag: "v0.1.0", app: false},

{:nx, "~> 0.1.0-dev", github: "elixir-nx/nx", sparse: "nx", tag: "v0.1.0", override: true, app: false},

{:axon, "~> 0.1.0-dev", github: "elixir-nx/axon", app: false},

{:elixir_make, "~> 0.6", app: false, override: true},

{:table_rex, "~> 3.1.1", app: false, override: true}

]

end

My config is:

use Mix.Config

config :nx, :default_defn_options, [compiler: EXLA, client: :cuda]

config :exla, :clients, cuda: [platform: :cuda], default: [platform: :cuda]

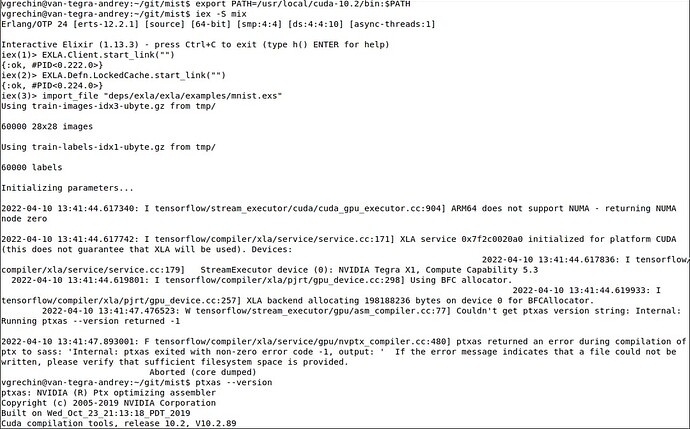

I’m trying to run the code from LambdaDays’21 but it runs through MNIST training loop very slowly,

it seems CUDA and EXLA itself don’t work with such configuration.

I also tried

@default_defn_compiler EXLA

in livebook with the same unfortunate result.

How can I check if XLA, EXLA and NX were properly built?