Background

I am trying to find a consistent way of implementing the Functional Architecture as described in a previous thread Functional Architecture in Elixir.

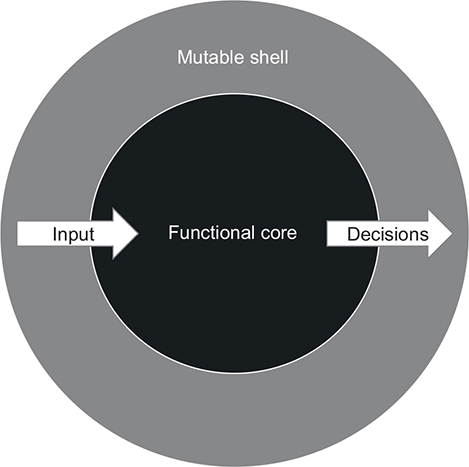

To summarize the post, that architecture can be understood with the following picture:

Some of you may also recognize this as the Functional core, Imperative shell, from destroyallsoftware:

The way forward

Now, that post identifies some shortcomings of this type of architecture. This post aims to explore a possible solution.

In specific the Interpreter pattern as described in this Ruby talk:

This pattern is built using another common patter, the Composite pattern:

Encapsulation issues

Basically, what this model encourages, is to have a purely functional core. This core never deals with external services, instead, each call to the core returns a tree of actions for the imperative shell to run. Then, this imperative shell runs those commands and invokes the next function on the functional core.

To me, this already has a problem: breaking encapsulation.

Let’s say you have a set of actions in your functional core:

- fetch data from DB

- do calculations

- fetch data from URL

- perform evaluation

- return result

Normally, under a well designed API, an external user would call P1, and see P5. A service calling our core would invoke P1 and wait for P5.

However, with this pattern, the service calling our core would have to:

- call P1

- wait for P1

- call P2

- wait for P2

- …

- call P4 and take P4’s result as final

This means that P2-P4 (implementation details) are now exposed, so the service layer can call them because that’s the only way to continue the computation (Since the core doesn’t actually call anything, the external shell must know what do to next).

This break in encapsulation means a bloated API and problems maintaining in the long term.

Bloated APIs are a recipe for disaster.

Am I missing something?

I watched the full video and I am somewhat familiar with destroyallsoftware, having seen some of their talks as well. But this sacrifice one makes just for the sake of a functional core looks like a time bomb waiting to explode.

- Am I missing something?

- Have I misunderstood any concepts?

- What are your takes on this?