Hi,

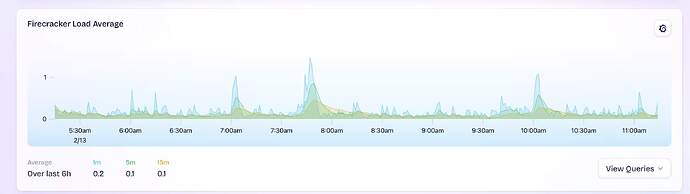

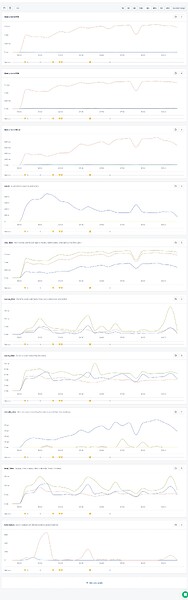

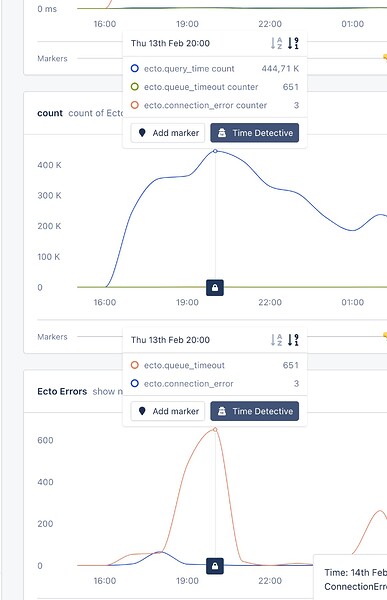

We have a regular Phoenix project using Ecto and Postgresql. But recently we are seeing a lot of connection timeouts or idle/closed connections. All looks like problem that DB pool is exhausted. Traffic has been pretty low, queries look ok, no big queries running all the time, no big Oban jobs. But I’m pretty sure we’re missing something.

So is it possible to debug which processes and code places are using the DB pool? Thanks to telemetry, we’re able to run code when these errors occur, but the question is whether it’s possible to get details about pool usage.

Telemetry handler:

def handle_event(

[:enaia, :repo, :query],

measurements,

%{result: {:error, %DBConnection.ConnectionError{} = error}} = metadata,

_config

) do

# check and log details about Pool usage

end

I can get repo’s child, but I’m not sure how to proceed.

repo_pid = Process.whereis(MyApp.Repo)

children = Supervisor.which_children(repo_pid)