Hello!

I would like to present to you my work of the last couple weeks, an up-to-date JSON Schema validation library.

TL;DR: Repo link at the end of the post

History

I started to work on this when I needed to ensure that a bunch of JSON schemas were properly implemented. Validating a piece of data with a JSON schema is easy, but how can you know that your schema is correct? How do you validate the schema itself?

It turns out that the draft/2020-12 meta schema, like its predecessors, is a schema that can validate other schemas, an it can validate itself.

I just needed a standard JSON schema validator that could follow all the rules of that specification.

So I started to work on that. I thought it would be a quick job with the help of the JSON Schema Test

Suite. Just generate all the test and make sure they are all green!

Oh boy! That specification is something… I could quickly write the main part of all the rules, but there were a lot of cases that required special handling all along the validation process.

Soon enough I had something that would work for my use case, but I was hooked, I wanted to make it work and implement the whole spec, as a personal challenge, cycling between this is so much fun and argh! why is it so convoluted!? let’s rewrite everything again…

And once it was done I thought I could share that with the community, because in the end it works pretty well.

Quick overview

The library works in two parts, first the “build”, converting schemas into a more workable data structure, and the second, the “validation”.

Validation is straightforward once the build is correct, it works like in any other library: take the data, apply a validation function, and return an ok/error tuple. So basically with a schema like {"type": "integer"} the code boils down to a tiny wrapper around is_integer/1.

The build took a lot more time to write. Especially to handle the $dynamicRef and its $dynamicAnchor counterpart. I understand why the spec is done that way but honestly I am not sure the JSON Schema group is going in the right direction. The unevaluated keyword was a huge headache too.

Also, there is now the concept of vocabularies, which JSV follows. The capabilities of a JSON schema is defined by the vocabulary declared in the meta-schema. For instance with this schema:

{"$schema": "https://example.com/meta-schema", "type": "integer"}

The “type” keyword will only work if the https://example.com/meta-schema resource declares a $vocabulary that the implementation knows.

For now, JSV only knows about the vocabulary in https://json-schema.org/draft/2020-12/schema, with special fallbacks for draft-7. Future versions of the library will add support for custom vocabularies.

So, to build a schema JSV will:

- Fetch the meta-schema and check the vocabulary to pick what validator implementations it will use.

- Resolve the schema, meaning it will download all the references, recursively.

- Build all the validators of all those schemas (not the meta-schema). This will lead to some duplication but it’s fast and it can easily be done at compile-time.

- Finally extract all the used validators, including anchors and dynamic anchors and wrap all of this into a “root” schema, an internal representation of the original JSON schema.

What is supported

- All features from draft 2020-12 except content validation.

- All features from draft 7 except content validation.

- Custom vocabularies are not (yet) supported.

- Format validation: a default implementation is provided.

- Custom format validation.

- Compile-time builds.

- Custom resolvers.

Other drafts are not supported, notably draft 2019-09. Draft 4 will work if you bother changing all the id to $id.

A note on resolvers

If you read carefully you have probably ticked when I wrote that the library will download all meta-schemas and referenced schemas recursively. This is indeed not quite true, the library will not fetch anything from the web on its own, for security reasons. You can write your own resolver or use the built-in one with a whitelist of URL prefixes. In any case, you will have to explicitly declare a resolver to do so.

This is well explained in the README. At least I hope so.

Basic usage

This is just a copy-pasta from the README:

# 1. Define a schema

schema = %{

type: :object,

properties: %{

name: %{type: :string}

},

required: [:name]

}

# 2. Define a resolver

resolver = {JSV.Resolver.BuiltIn, allowed_prefixes: ["https://json-schema.org/"]}

# 3. Build the schema

root = JSV.build!(schema, resolver: resolver)

# 4. Validate the data

case JSV.validate(%{"name" => "Alice"}, root) do

{:ok, data} ->

{:ok, data}

{:error, validation_error} ->

# Errors can be casted as JSON validator output to return them

# to the producer of the invalid data

{:error, JSON.encode!(JSV.normalize_error(validation_error))}

end

Implementation notes

-

For the validation of email addresses, the mail_address library can by pulled in, optionally. It seems to work well.

-

For other formats such as

uri,uri-reference,iri,iri-reference,uri-template,json-pointerandrelative-json-pointerI used the abnf_parsec library. It is optional as well. Fallback support forurianduri-referenceis provided.I am not satisfied with this implementation so far. First because the official RFCs that the JSON Schema Specification points to give the ABNF grammars in a way that makes you doubt your copy-and-paste skills. Then because I have some false negatives. I will need to study ABNF a little bit more. Thumbs up to the author, that library won me a lot of time.

-

The built-in resolver requires a proper JSON implementation. If you are running Elixir 1.17 or below, you will need Jason or Poison. This is generally not a problem.

-

I have not solved the float problem with bigints. The JSON Schema Specification allows to treat

1000000000000000000000000.0as an integer, buttrunc(1000000000000000000000000.0)equals999999999999999983222784. It’s too late when the value enters my code, the JSON parsers will already have converted that number to1.0e24.

The future

This library is already useful to me. If I found it is used by many of you I think it will be worth to make it even better. There are a couple ways to do so:

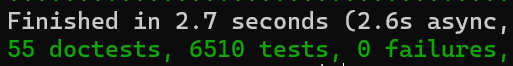

- Create some benchmarks to give people better information when choosing a library. I have not been too regarding on performance. So far it’s seems to be fast enough for my needs. The 5200 tests that build a schema and validate some data run in less than 3 seconds without concurrency.

- Support custom vocabularies and vocabularies override. I know this can be useful since I have been storing additional data in JSON schemas in several projects already. This will allow to implement content validation (

contentMediaType,contentEncoding,contentSchema). I also would like the library to return errors or warnings if some keyword in the schema were not used during the build. - Implement a test suite for Bowtie though I am not sure this is widely used. I just found that out the other day.

- Write more documentation. The API docs need some work. A guide for custom vocabularies will be needed because, unfortunately, one has to understand the internals of the library in order to build a vocabulary that will report errors correctly.

- Support for deserialization into Elixir structs.

Use it today

Thank you for reading!

If you would like to give it a try, I’d be glad to get some feedback from you!

Happy new year to all alchemists around here!