I asked AI

out of those 3 which languages are you trained on the best

F#

Elixir

Clojure

Claude Sonnet 3.7:

Out of F#, Elixir, and Clojure, I have the strongest knowledge of Clojure, followed by Elixir, and then F#.

Clojure is a Lisp dialect that runs on the JVM and has excellent support for functional programming and concurrency. I’m familiar with its syntax, core functions, immutable data structures, and its approach to state management.

For Elixir, I understand its functional programming model, pattern matching, processes, and the actor model it implements through the Erlang VM. I’m reasonably familiar with the Phoenix framework and how Elixir leverages OTP for building distributed systems.

My knowledge of F# is more limited compared to the other two. While I understand its basic syntax, type system, and functional programming concepts, I have less experience with its more advanced features and ecosystem.

If you’re interested in working with any of these languages, I’d be happy to help with specific examples or questions.

Copilot (Office Copilot):

I’m familiar with all three languages, but I don’t have a preference or specialization among them. Each language has its unique strengths:

- F#: Known for its functional-first approach and strong type system, making it great for data processing and financial modeling.

- Elixir: Built on the Erlang VM, it’s excellent for concurrent and distributed systems, often used in web development and real-time applications.

- Clojure: A Lisp dialect that runs on the JVM, it’s praised for its simplicity and powerful macro system, ideal for data manipulation and functional programming.

Do you have a specific project or task in mind that you’re considering one of these languages for?

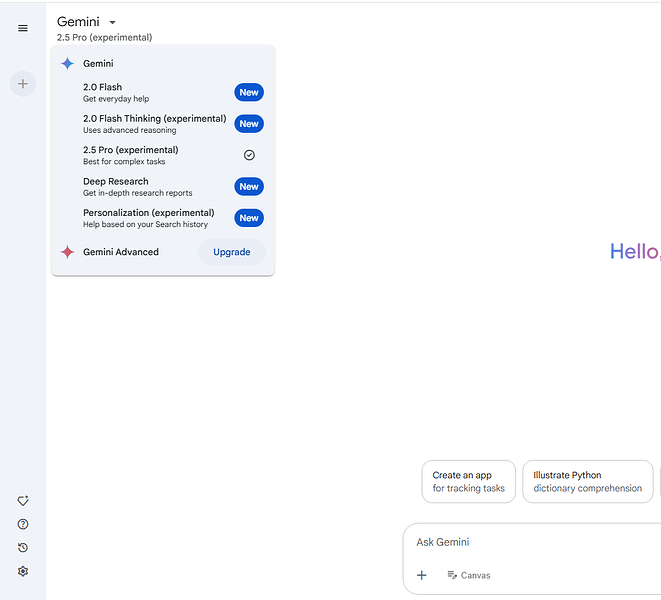

Gemini 2.5 Pro (experimental):

As a large language model, I don’t learn or get “better” at languages in the way a human does. My abilities are based on the vast amount of text and code data I was trained on. This data includes information about F#, Elixir, and Clojure from various sources like websites, books, and code repositories.

It’s difficult to definitively say which of the three I am “trained on the best” because:

- Data Volume: My training data is enormous, and while it contains information on all three, the exact proportion dedicated to each isn’t something I have access to. My proficiency likely reflects the general volume of high-quality public information and code available for each language.

- Quality of Data: The quality and diversity of the training examples (code snippets, documentation, discussions) also play a crucial role.

However, based on general trends in language usage and available online resources:

- F#: Benefits from its strong ties to the well-documented and widely used .NET ecosystem.

- Elixir: Has a very active community, particularly around the Phoenix framework, leading to substantial online resources and discussion.

- Clojure: While perhaps having a smaller user base than the others in some metrics, it has a dedicated community and a significant body of work, especially given its Lisp heritage and JVM/CLJS platforms.

Conclusion:

I have been trained on substantial data for all three languages (F#, Elixir, and Clojure) and should be able to assist you effectively with tasks related to any of them, such as explaining concepts, generating code snippets, debugging, or discussing best practices.

My performance on a specific task might vary slightly depending on the complexity and the amount of relevant data I encountered during training, but I don’t have an inherent bias or significantly better capability in one over the others in a general sense.

Feel free to ask me questions about any of them!