How to restrict memory usage on EXLA?

This is my memory usage before loading EXLA.

nvidia-smi

Sun Sep 3 13:13:11 2023

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.104.05 Driver Version: 535.104.05 CUDA Version: 12.2 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA GeForce RTX 3060 On | 00000000:01:00.0 On | N/A |

| 45% 53C P5 30W / 170W | 398MiB / 12288MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| 0 N/A N/A 1914 G /usr/lib/xorg/Xorg 131MiB |

| 0 N/A N/A 2128 G /usr/bin/gnome-shell 63MiB |

| 0 N/A N/A 7334 G ...irefox/3068/usr/lib/firefox/firefox 193MiB |

+---------------------------------------------------------------------------------------+

And after.

❯ nvidia-smi

Sun Sep 3 13:18:13 2023

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.104.05 Driver Version: 535.104.05 CUDA Version: 12.2 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA GeForce RTX 3060 On | 00000000:01:00.0 On | N/A |

| 0% 43C P3 30W / 170W | 11446MiB / 12288MiB | 2% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| 0 N/A N/A 1914 G /usr/lib/xorg/Xorg 131MiB |

| 0 N/A N/A 2128 G /usr/bin/gnome-shell 65MiB |

| 0 N/A N/A 7334 G ...irefox/3068/usr/lib/firefox/firefox 229MiB |

| 0 N/A N/A 15749 C ...ang/25.2.1/erts-13.1.4/bin/beam.smp 11006MiB |

+---------------------------------------------------------------------------------------+

As you can see Firefox and gnome bumped a little bit and the beam is trying to use the rest.

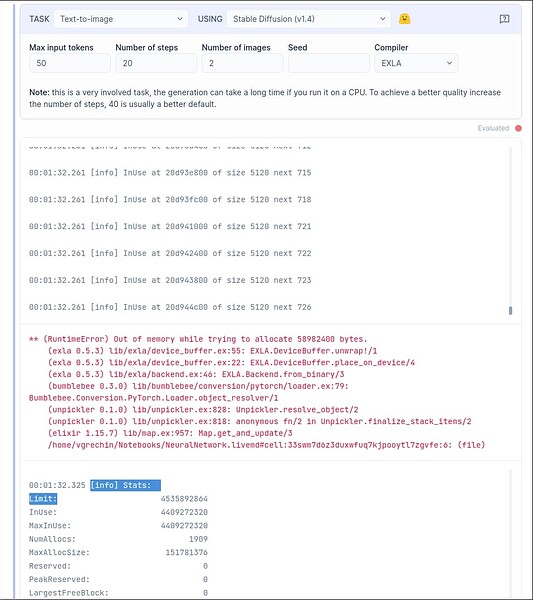

The issue is I get it to run once, but if I re evaluate to say change the seed or steps I then run out of memory.

13:20:48.865 [info] Total bytes in pool: 11350867968 memory_limit_: 11350867968 available bytes: 0 curr_region_allocation_bytes_: 22701735936

13:20:48.865 [info] Stats:

Limit: 11350867968

InUse: 11229623808

MaxInUse: 11261113856

NumAllocs: 6164

MaxAllocSize: 3514198016

Reserved: 0

PeakReserved: 0

LargestFreeBlock: 0

13:20:48.865 [warning] ****************************************************************************************************

13:20:48.865 [error] Execution of replica 0 failed: RESOURCE_EXHAUSTED: Out of memory while trying to allocate 6553600 bytes.

BufferAssignment OOM Debugging.

BufferAssignment stats:

parameter allocation: 6.25MiB

constant allocation: 0B

maybe_live_out allocation: 6.25MiB

preallocated temp allocation: 0B

total allocation: 12.50MiB

total fragmentation: 0B (0.00%)

Peak buffers:

Buffer 1:

Size: 6.25MiB

Entry Parameter Subshape: f32[1638400]

==========================

Buffer 2:

Size: 6.25MiB

XLA Label: copy

Shape: f32[1280,1280]

==========================

Buffer 3:

Size: 8B

XLA Label: tuple

Shape: (f32[1280,1280])

==========================

So is there a way to limit the MaxInUse for xla from the start? 10 should be enough right? Its trying to grab 11 now.