I use Gettext and ex_cldr for localisation, and would like to load a webfont in the appropriate charset based on the user’s locale (among which cyrillic, cyrillic-ext, devanagari, greek, greek-ext, latin, latin-ext, vietnamese, etc, see for example: arabic and google webfonts helper), rather than having files that include all of them (filesize can be 4x bigger, and also isn’t possible for all languages, some of which need their own font) and was wondering if ex_cldr has a way to map a language to a charset built in? (I couldn’t find it, but may have missed it due to the number of libraries)

@mayel thats an interesting question! I don’t believe CLDR has that data built in but I’ll check the links you posted and see what is possible. (I’m the ex_cldr author).

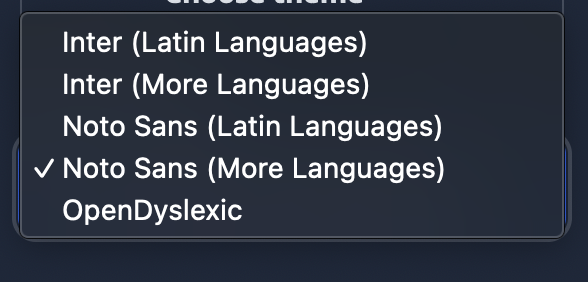

Thanks ![]() the approach I took temporarily is to have a dropdown like this:

the approach I took temporarily is to have a dropdown like this:

This controls which CSS file is included in the template which in turn loads differents fonts and different charsets, e.g. if the user selects a font with “Noto Sans (More Languages)” then noto-sans-more.css is used from https://github.com/bonfire-networks/bonfire-app/tree/main/assets/static/fonts

There’s two limitations with this I’d like to improve on:

- The user shouldn’t have to worry about manually changing the font charaset, this should instead follow their locale (so they just select a general font family)

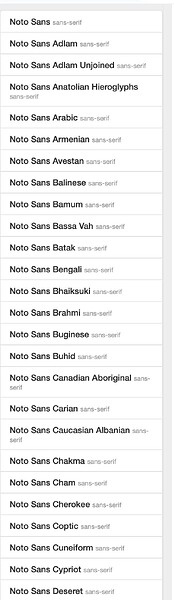

- Currently this only covers a few charsets, but I’d like to add support for more languages - see the seperate per-language fonts available - this screenshots only shows a few of them:

Having this kind of logic would also allow loading different fonts/charsets not only based on the user’s locale, but based on the language of a piece of text on the page (when dealing with user-generated content)…

You can get the script for any locale:

iex> {:ok, locale} = Cldr.validate_locale("th")

{:ok, #Cldr.LanguageTag<th [validated]>}

iex> locale.script

:Thai

Which is a starting point. I will add a function Cldr.Locale.script_from_locale/1 in the next release to make this more explicit.

Now to mapping the script to a font name may be out of scope for ex_cldr but I’m curious so will do some more investigating too.

Ah that’s a good start thanks! If out of scope for ex_cldr I’ll likely package what we end up with into a library (though it will probably be opinionated, as in supporting specific font families).

After some more digging:

- I published ex_cldr version 2.31.0 which includes a new function

Cldr.Locale.script_from_locale/1to return the script. For example:Latnor:Thaior:Deva. - You can use the function

Cldr.Validity.known(:scripts)to get the name of all the 167 scripts known to CLDR. This function parses data so its only used at compile-time inex_cldrto build functions. Its not public API so use at your own risk (its not going away however).Cldr.Locale.language_data/0returns a lot of data that can be used to map between languages, scripts and territories its more complex data but more complete. - Google Fonts is really sloppy in its terminology using “charset” interchangeably with “subset” when it really means “script”. And it uses “language” when it means “script” too. Disappointing really since Google is the largest sponsor of CLDR.

- CLDR does have mappings from language to script so a lookup is possible and I’ll add a function to

ex_cldrto make that easy. But there’s another problem needs solving first … - The language names used in Google Fonts are not canonical and have no alignment with ISO 639 language codes making it difficult to map from

locale.scriptorlocal.languageto the right font “subset”.

Hopefully this gives you something to go on. Its an interesting and useful objective to deliver a typeface to a user that can represent the requested script. So I’m happy to help on the ex_cldr - just reply here or open an issue on GitHub.

Last thought for now. Google says “language” but then uses “Latin” and “Latin-ext” which whilst a language, it isn’t used in that context. So manual mapping is going to be required, Perhaps something like this:

- Get the ISO 639 language code from a locale

iex> {:ok, locale} = Cldr.validate_locale("th")

{:ok, #Cldr.LanguageTag<th [validated]>}

iex> locale.language

"th"

-

Manually build a map of Google Font “languages” to ISO 639 codes and then use that to match with the locale and therefore know what the Google Fonts “subset” is.

-

In building the map, you could use the data returned from

Cldr.Locale.language_data/0that tells you probably more than you want in order to help build the right mapping. For example you could map all the languages that use thelatnscript into the Google Fontslatin, latin-extsubset.

Yeah manual mapping is where I assumed this was headed… Thanks for all your help, glad I’m not the only one who geeks out on this stuff ![]() Will report back!

Will report back!

Ah this seems useful for detecting what to use for user generated text (if no language was specified by the user), though unfortunately only has the common charsets and not the additional languages: char-subsets/charlists at master · strarsis/char-subsets · GitHub

Maybe you’ll find my text library useful? It has a native Elixir implementation of text detection. You need to install also the text_corpus_udhr library to have a corpus to work with for detection:

iex> Text.detect """

Договоренность о разблокировке украинских портов для возобновления экспорта зерна остается труднодостижимой, потому что Москва использует переговоры для реализации своих военных целей и обеспечения доминирования в Черном море, заявил заместитель министра экономики и торговый представитель Украины Тарас Качка.

"""

{:ok, "ru"}

I love the discussion, but I’d like to also propose a more practical angle: Drop using a webfont or if that’s not an option make sure it’s cached well and the 4x in size likely won’t matter too much in the long run – possibly load it async, so text is visible without it. Unless you’re specifically building a font preview application, where most font requests will be “first requests” this should be doable.

Yeah that’d be simpler, but I haven’t seen a font that offers more than 8 scripts, and taking Noto Sans as an example it has 145 separate fonts for scripts that aren’t included in the main one.

Somebody made some per-region font packages, they each measure in megabytes: Releases · satbyy/go-noto-universal · GitHub

Ah and 14 MB for all current (non-historical) Noto fonts.

That’s indeed rather large. What about the “no webfont” option though? People still have fonts installed on their computers already and it likely supports the scripts they want to read content in.

They’re likely to have fonts installed that support their primary language(s), but we’d have to rely on their browser (if using sans-serif) or their OS (is using system-ui) using that font as the default. And it wouldn’t help a user when seeing content posted by someone else in a language not supported by their font.

Oh maybe the first part of my statement is incorrect if we rely on HTML tags? Declaring language in HTML

So yeah, while it seemed like a fun exercise, and I may yet come back to it sometime, I’ll probably just map the locales corresponding to latin and those for cyrillic, devanagari, greek, latin-ext, vietnamese , loading a small webfont in the first case, a larger one in the second case, and for all others try just leaving it to the OS and browser (at least until users report problems…). Will share what I come up with for this simpler scope in any case.

It is possible to load (subsets of) a webfont dynamically in CSS by Unicode range, assuming that ranges for languages don’t change frequently:

Quote from the page:

For example, a site with many localizations could provide separate font resources for English, Greek and Japanese. For users viewing the English version of a page, the font resources for Greek and Japanese fonts wouldn’t need to be downloaded, saving bandwidth.