I am using finch in my application, what my application do is:

It sends a request to the camera for getting a jpeg, (Basic/Digest auth) and get the binary image, and then send it to another cloud.

so if I have 500 cameras they are sending 500 * 2 requests per second.

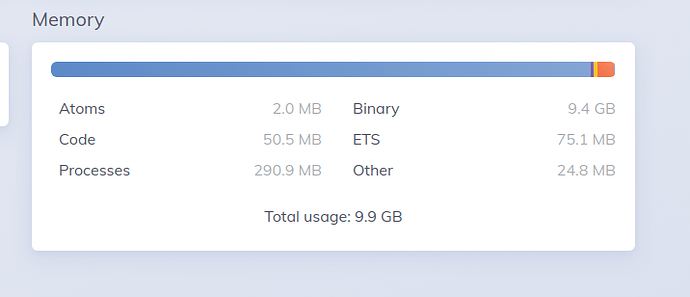

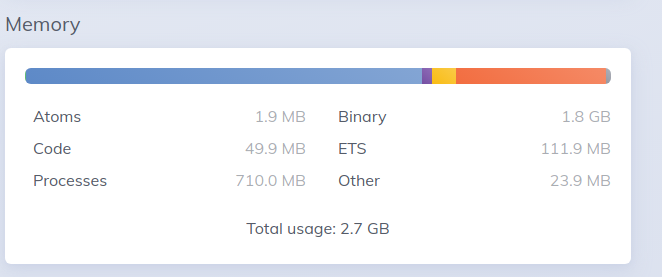

I was using HTTPoison before and my binary state on phoenix dashboard was like this

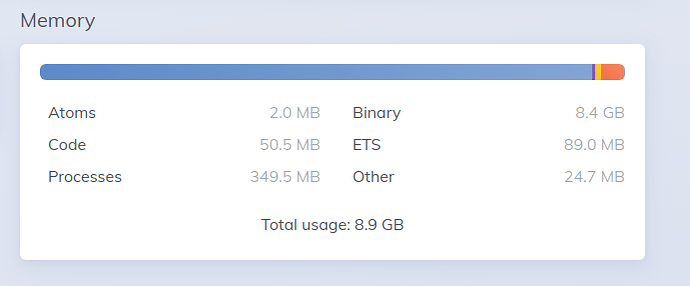

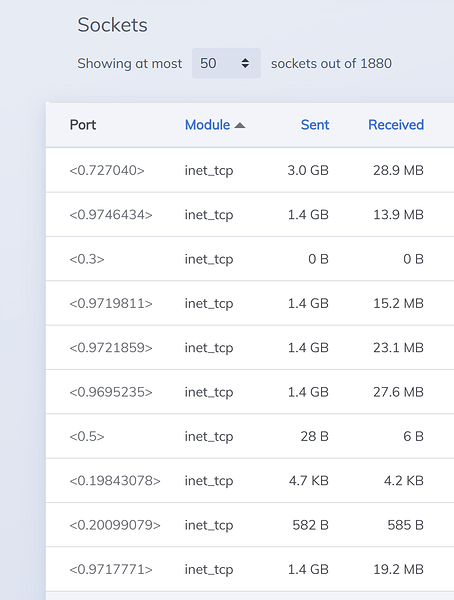

but when I deployed with Finch.

I had these pool settings with hackney

:hackney_pool.child_spec(:snapshot_pool, timeout: 50_000, max_connections: 10_000),

:hackney_pool.child_spec(:seaweedfs_upload_pool, timeout: 50_000, max_connections: 10_000),

:hackney_pool.child_spec(:seaweedfs_download_pool, timeout: 50_000, max_connections: 10_000)

and I have these settings with Finch now

{Finch,

name: EvercamFinch,

pools: %{

:default => [size: 500, max_idle_time: 25_000, count: 5]

}},

I want to know the reason of such massive binary increase?

UPDATE:

this is what I am using to make requests

defmodule EvercamFinch do

def request(method, url, headers \\ [], body \\ nil, opts \\ []) do

transformed_headers = transform_headers(headers)

Finch.build(method, url, transformed_headers, body)

|> Finch.request(__MODULE__, opts)

|> case do

{:ok, %Finch.Response{} = response} ->

{:ok, response}

{:error, reason} ->

{:error, reason}

end

end

defp transform_headers([]), do: []

defp transform_headers([{key, _value} | _rest] = headers) do

case is_atom(key) do

true -> transform_headers(headers, :atom)

false -> headers

end

end

defp transform_headers(headers, :atom) do

headers

|> Enum.map(fn {k, v} ->

{Atom.to_string(k), v}

end)

end

end

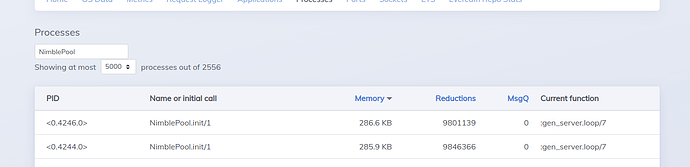

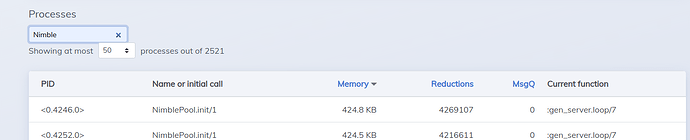

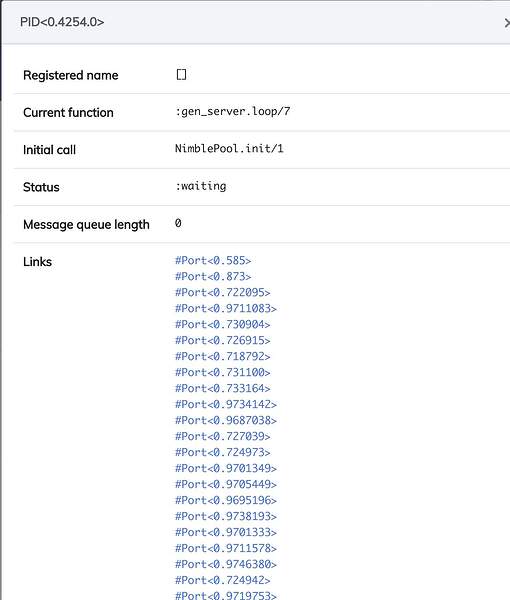

This is how my process look for NimblePool

even with small size and count. why the NimblePool has increased to 2500+?