I’m happy to get code critique.

Coding with Crawly was a very smooth experience. No unpleasant surprises; everything worked as expected. The Domo framework and the new dbg debugger hugely contributed to the experience.

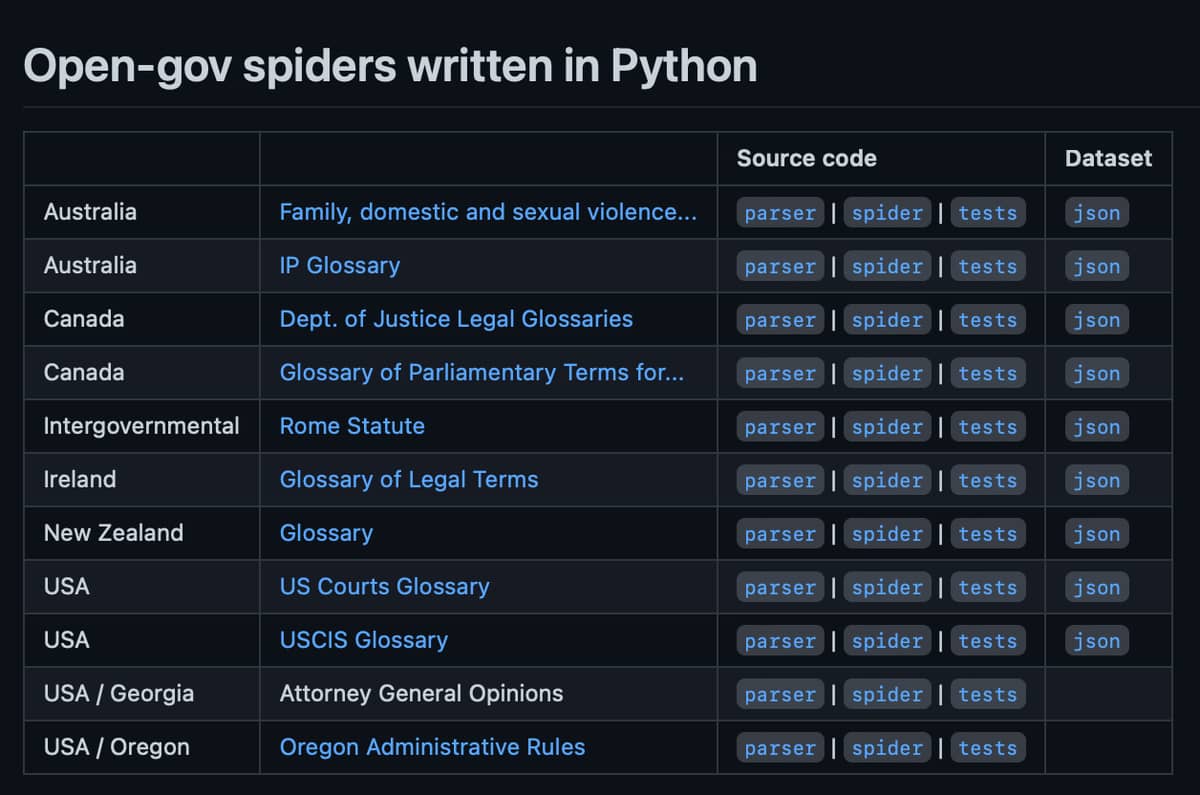

I coded with TDD — tests are here — and it was even a little easier than Python/Scrapy, which it seems to be modelled on.

The spider creates a 60MB JSON-lines file. I currently check it in by hand to our Datasets repo. It uses one API call and approx. 500 web pages as its source. The output “simply” captures the source structure of the Statutes with their content, leaving semantic additions to later pipeline stages:

{"number":"1","name":"Courts, Oregon Rules of Civil Procedure","kind":"volume","chapter_range":["1","55"]}

{"number":"2","name":"Business Organizations, Commercial Code","kind":"volume","chapter_range":["56","88"]}

{"number":"3","name":"Landlord-Tenant, Domestic Relations, Probate","kind":"volume","chapter_range":["90","130"]}

etc.