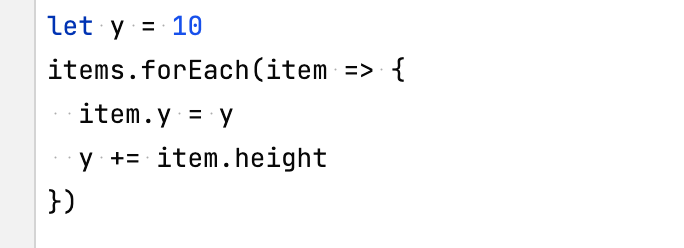

I came across such a piece of code and tried to implement it functionally. I found various ways to do it and benchmarked them using Benchee and I got some interesting results.

Codes and results can be seen in this gist.

First of all, I realized the huge negative impact of using ++ to add items to the list.

I expected using Enum.reduce/2 and Enum.map_reduce/3 to perform best, followed by tail_recursion_with_reverse/2 and then recursion/2. But the results were almost the opposite.

Now I have some questions that I will ask. Thanks in advance for all the feedback and help.

- In order to benchmark such questions, what other items should I consider in addition to the average execution time?

- That being said, is there any situation where ++ is the right choice for working with lists?

- Is there a better solution to this problem?

- Is it always better to write recursive functions in general than to use

Enumfunctions? - Does Beam perform a special optimization in the

recursion/2function that is written in the code, which performs better thantail_recursion_with_reverse/2?

def reduce(items, y) do

{items, _} = Enum.reduce(items, {[], y},

fn %{height: h} = item, {converted_items, y} -> {converted_items ++ [%{item | y: y}], y + h} end)

items

end

def reduce_with_reverse(items, y) do

{items, _} = Enum.reduce(items, {[], y},

fn %{height: h} = item, {converted_items, y} -> {[%{item | y: y} | converted_items], y + h} end)

Enum.reverse(items)

end

def map_reduce(items, y) do

{items, _} = Enum.map_reduce(items, y, fn item, acc -> {Map.put(item, :y, acc), acc + item.height} end)

items

end

def recursion([], _), do: []

def recursion([%{height: h} = item | t], y), do: [%{item | y: y} | recursion(t, h + y)]

def tail_recursion(items, y, converted_items \\ [])

def tail_recursion([], _y, converted_items), do: converted_items

def tail_recursion([h | t], y, converted_items), do:

tail_recursion(t, y + h.height, converted_items ++ [%{y: y, height: h.height}])

def tail_recursion_with_reverse(items, y, converted_items \\ [])

def tail_recursion_with_reverse([], _y, converted_items), do: Enum.reverse(converted_items)

def tail_recursion_with_reverse([h | t], y, converted_items), do:

tail_recursion_with_reverse(t, y + h.height, [%{y: y, height: h.height} | converted_items])