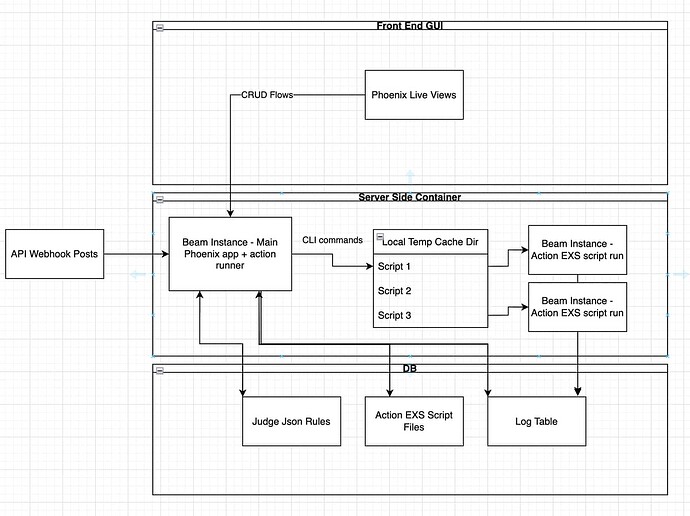

Problem Statement:

I am trying to build an ‘action runner’ for judge json rules. In a nut shell, say a json defined rule matches with action string:

{

...rule

"action": "collect_signature"

}

I’m trying to think of best architecture to run that ‘collect_signature’ code. It could be a module name or a script file. The intention is for rules to trigger actions in a dynamic way so actions are easy to maintain separately.

Desired Requirements:

- the action code has its own dependencies/hex packages

- adding new actions does not require app reload/recompile/redeploy of the ‘action runner’ i.e. the actions are dynamically loaded and run somehow.

Idea 1:

standalone exs scripts - Actions are defined in standalone exs files with their own Mix.installs. I tried this already by using Code.require_file/1 to dynamically run an exs file however ran into dependency errors. The ‘action runner’ runtime dependencies had conflicts with the loaded exs dependencies. Need a way to get around that. This seems the most promising however.

Idea 2:

Umbrella project where each action is a separate application. I don’t have experience with umbrella projects but this might be a feasible path. It seems a bit overkill however and I think the action runner would have to be reloaded every time a new action is created (which is a big con).

Idea 3:

Similar to umbrella, load actions as private local packages via a dependency path. The ‘action runner’ would then dynamically load dependencies in its mix.exs. Sudo code like:

defp deps do

for each action_name in actions folder return:

{:action_name, ">= 0.0.0", path: "/actions/action_name"}

end

Then maybe add a Mix task ‘new action’ that loads up the boiler plate for a new action in the correct folder. The main con with this is same as #2 - the action runner would have to be recompiled with every new action.