Im currently working on a hobby project for writing an Elixir integration for Betfair (odds exchange trading, sort of the same as any trading your can place orders via REST and you get push data from market and any order changes). To integrate with betfair two techniques are used. First there is a REST interface and secondly there is a TCP connection for streaming market and order data. Below I will describe the general requirements for the integration.

The first thing is to call a REST endpoint supplying username and password to login, this login starts a session on Betfair and returns a session token that needs to be included as a header for all future REST calls.

The session token is valid for 4hours, after 4hours a keep alive REST call needs to be done to extend the session for another 4hours.

Next one makes a REST call to get back a number of market ids. The list of market ids AND the session token are then supplied to a TCP connection to start a subscription and get market updates. Another subscription can be made on the same TCP connection or on another TCP connection for order updates.

The TCP connection receives akeep alive signal every 5seconds from the server, if no signal arrives within 5 seconds one has to re-subscribe using the same market ids and session token.

If either a REST call or the TCP connection return a invalid session reply one has to re-login to get a new session token and then re-subscribe with the same market ids but with this new session token.

Once I have logged in and started a subscription all the handling of the connection should be done automatically without any human intervention.

I have manage to get everything working by using two instances of Connection library, one for the REST interface keeping the session token and the keep alive process, and a second Connection for both Order and Market stream. However the implementation is just a big pile of mud and I would really love input on how more experienced Elixir developers would structure the code.

The type of questions I would like input on are:

-

How many processes would you use?

-

How would your supervisor tree look like?

-

What would be the responsibility of each process?

4 Likes

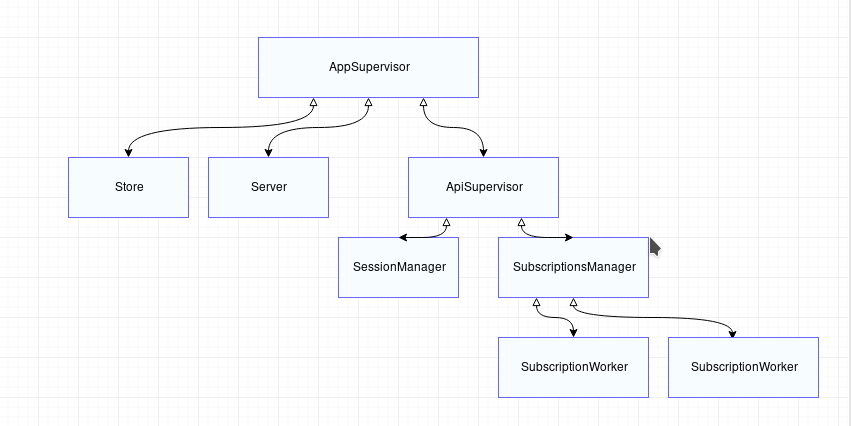

I might try something like this:

2 Likes

Nice, thanks could you say something how you think about the responsibility of each process?

For example what happens if I need to re login and then have the SubscriptionManager re-subscribe with the new session id? If I put the SubscriptionManager under the SessionManager I could just restart the whole tree and only have an Agent running outside the tree that stores the state I need even after the restart for example the list of market ids?

Yes sorry – because this is my first post I got stuck in some sort of moderation queue. I had originally intended to post the image and then edit the post to fill in the details

Please note also that I am not an expert in OTP although I have built some things with it.

To answer your question, you should design the supervision tree so that parts of the system that are invalid without each other crash together. So, if the SessionManager sends that request after the 4th hour and something goes wrong, it should die and take the SubscriptionManager down with it. The ApiSupervisor would then respawn a new SessionManager and SubscriptionsManager.

When the SessionManager is (re)started, it should make the POST request and get back the auth token (and keep it in its own gen_server state). As part of init, you can schedule the re-validation of the session with Process.send_after and give it a delay of just under 4 hrs. Other processes that need the session info, such as the SubscriptionWorkers can request it by sending the SessionManager a message which the SessionManager knows how to respond to (eg something like def handle_call(:get_session_info, args) ).

The SessionManager should tell the SubscriptionsManager when it is ok to spawn the SubscriptionWorkers after it has acquired valid session data. The SubscriptionsManager should therefore be a DynamicSupervisor.

I add a Store process because you may want your SubscriptionWorkers to keep their data in a different supervision tree. The Server process handles requests from the outside world and responds with data from the Store. Actually, you should place the server under a supervisor so that when it crashes it doesn’t wipe out the data in the store.

If either of the SubscriptionWorkers messes up its TCP connection, they should terminate with a specific message that causes their supervisor to crash (because the session will have become invalid)

1 Like

I like this. However I have some clarification questions:

If the keep alive fail should I really crash? This is a known problem I know that it can fail and I should handle it and not crash, crashing is for things I cant anticipate?

To get the behavior you describe I guess the ApiSupervisor has a :one_for_all strategy?

If the Server crashes it does not wipe out the Store process since the default strategy for AppSupervisor is one-for-one?

Why use a DynamicSupervisor at all if I want the workers to crash the whole tree, why not just start them using there start_link from the SubscriptionManager?

You can adjust this however you want. These are just the broad strokes. I would just ‘let it crash’ as per the well known Erlang/OTP axiom. You may choose to do it differently.