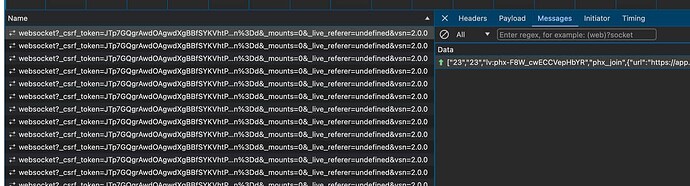

So I just got a new liveview deloyment, using Redis as the pubsub backend while I get cluster forming figured out. The first page render works fine, and there is a phx_join and phx_reply of rendered. After signing in with default phx.gen.auth behavior, I’m getting many phx_join messages sent but no phx_reply. Any idea why this might be?

What does the log of you phoenix app say?

The only error I have in my cloudwatch logs is this:

05:34:59.591 [error] Ranch listener AppWeb.Endpoint.HTTP had connection process started with :cowboy_clear:start_link/4 at #PID<0.3127.0> exit with reason: {:function_clause, [{WebSockAdapter.CowboyAdapter, :terminate, [{:crash, :error, :function_clause}, %{port: 80, scheme: "http", version: :"HTTP/1.1", path: "/live/websocket", host: "app.domain.com", peer: {{0, 0, 0, 0, 0, 65535, 44063, 6462}, 37060}, method: "GET", qs: "_csrf_token=FQJ-CHkAYWVEDy5BejkzQhItNCouEx43ERGNMF55sKcqWQQtzDehxJSP&_track_static%5B0%5D=https%3A%2F%2Fapp.domain.com%2Fassets%2Fapp-4d990432c83d65c7274ba6c2f26005e3.css%3Fvsn%3Dd&_track_static%5B1%5D=https%3A%2F%2Fapp.domain.com%2Fassets%2Fapp-a33e271c2b56bde39457fdd88e722922.js%3Fvsn%3Dd&_mounts=0&_live_referer=undefined&vsn=2.0.0"}, {Phoenix.LiveView.Socket, %{}, {%{channels: %{}, channels_inverse: %{}}, %Phoenix.Socket{assigns: %{}, channel: nil, channel_pid: nil, endpoint: AppWeb.Endpoint, handler: Phoenix.LiveView.Socket, id: "users_sessions:n66qO3JS6Pqw7v2Bcx6iwnQYTasR4SwhP8tDgaNs9cg=", joined: false, join_ref: nil, private: %{connect_info: %{session: %{"_csrf_token" => "PP9F4FTP7DM0-hb6hiQBVYMg", "live_socket_id" => "users_sessions:n66qO3JS6Pqw7v2Bcx6iwnQYTasR4SwhP8tDgaNs9cg=", "user_token" => <<159, 174, 170, 59, 114, 82, 232, 250, 176, 238, 253, 129, 115, 30, 162, 194, 116, 24, 77, 171, 17, 225, ...>>}}}, pubsub_server: [adapter: Phoenix.PubSub.Redis, name: App.PubSub, node_name: "node-0qw2do37A_U=", url: "redis://master.redacted.cache.amazonaws.com:6379", ssl: true], ref: nil, serializer: Phoenix.Socket.V2.JSONSerializer, topic: nil, transport: :websocket, transport_pid: nil}}}], [file: ~c"lib/websock_adapter/cowboy_adapter.ex", line: 52]}, {:cowboy_websocket, :handler_call, 6, [file: ~c"/app/deps/cowboy/src/cowboy_websocket.erl", line: 564]}, {:cowboy_http, :loop, 1, [file: ~c"/app/deps/cowboy/src/cowboy_http.erl", line: 253]}, {:proc_lib, :init_p_do_apply, 3, [file: ~c"proc_lib.erl", line: 241]}]}

Formatting that message helps make it understandable:

{

:function_clause,

[

{

WebSockAdapter.CowboyAdapter,

:terminate,

[

{:crash, :error, :function_clause},

%{

port: 80,

scheme: "http",

version: :"HTTP/1.1",

path: "/live/websocket",

host: "app.domain.com",

peer: {{0, 0, 0, 0, 0, 65535, 44063, 6462}, 37060},

method: "GET",

qs: "_csrf_token=FQJ-CHkAYWVEDy5BejkzQhItNCouEx43ERGNMF55sKcqWQQtzDehxJSP&_track_static%5B0%5D=https%3A%2F%2Fapp.domain.com%2Fassets%2Fapp-4d990432c83d65c7274ba6c2f26005e3.css%3Fvsn%3Dd&_track_static%5B1%5D=https%3A%2F%2Fapp.domain.com%2Fassets%2Fapp-a33e271c2b56bde39457fdd88e722922.js%3Fvsn%3Dd&_mounts=0&_live_referer=undefined&vsn=2.0.0"

},

{

Phoenix.LiveView.Socket,

%{},

{

%{channels: %{}, channels_inverse: %{}},

%Phoenix.Socket{

assigns: %{},

channel: nil,

channel_pid: nil,

endpoint: AppWeb.Endpoint,

handler: Phoenix.LiveView.Socket,

id: "users_sessions:n66qO3JS6Pqw7v2Bcx6iwnQYTasR4SwhP8tDgaNs9cg=",

joined: false,

join_ref: nil,

private: %{

connect_info: %{

session: %{

"_csrf_token" => "PP9F4FTP7DM0-hb6hiQBVYMg",

"live_socket_id" => "users_sessions:n66qO3JS6Pqw7v2Bcx6iwnQYTasR4SwhP8tDgaNs9cg=",

"user_token" => <<159, 174, 170, 59, 114, 82, 232, 250, 176, 238, 253, 129, 115, 30, 162, 194, 116, 24, 77, 171, 17, 225, ...>>

}

}

},

pubsub_server: [

adapter: Phoenix.PubSub.Redis,

name: App.PubSub,

node_name: "node-0qw2do37A_U=",

url: "redis://master.redacted.cache.amazonaws.com:6379",

ssl: true

],

ref: nil,

serializer: Phoenix.Socket.V2.JSONSerializer,

topic: nil,

transport: :websocket,

transport_pid: nil

}

}

}

],

[file: ~c"lib/websock_adapter/cowboy_adapter.ex", line: 52]

},

{:cowboy_websocket, :handler_call, 6, [file: ~c"/app/deps/cowboy/src/cowboy_websocket.erl", line: 564]},

{:cowboy_http, :loop, 1, [file: ~c"/app/deps/cowboy/src/cowboy_http.erl", line: 253]},

{:proc_lib, :init_p_do_apply, 3, [file: ~c"proc_lib.erl", line: 241]}

]

}

This tells us some things:

-

the ultimate cause of the exit was a

function_clauseerror inlib/websock_adapter/cowboy_adapter.ex:52 -

This error happened because

WebSockAdapter.CowboyAdapter.terminate/3only expects 2-tuples in the final argument, but the caller passed a 3-tuple shaped like{Phoenix.LiveView.Socket, %{}, {%{channels: ...}, %Phoenix.Socket{}}}The first element of that tuple is a handler like the module expects.

The third element is a

{state, socket}pair like would be expected withPhoenix.SocketThe second element is ???, and it’s going to be tricky to find where it came from

-

however, this isn’t the root cause - the process was trying to call

terminate/3because something else crashed with afunction_clause_error. Any noises in the logs before the message you posted?

My suspicion is a cascading error like this:

- something messed up the socket GenServer’s state from

{handler, state}to{handler, what_the_heck, state} - that caused the next function call dispatched against that server to fail to match (the first

function_clause_error) - that made the socket try to shut down, which then ALSO failed to match in

terminate/3(the secondfunction_clause_error)

Thank you! Do you know why it would be happening only after the user is authenticated? On the anonymous views I have, there doesn’t seem to be a problem. Seems like it’s crashing after the phx_join message, before it can respond.

What are you doing in the channel that may cause it to crash?

There’s nothing custom actually. The only thing non-default I can think of off the bat is that I’m using the Redis adapter for PubSub.

Can you show the code?

Sure, here’s the endpoint configuration in runtime.ex. As far as I can think at this point, there’s nothing else relating to sockets, pubsub, or presence in the app.

pubsub_server: [

adapter: Phoenix.PubSub.Redis,

name: App.PubSub,

node_name: "node-#{node_indentifier}",

url: redis_url,

ssl: true

]

Sorry, I was confused for a moment and thought it was an issue with channels.

I have never used the Redis adapter, nor have I needed to.

It works if you restore it to the original configuration?

May I ask though why you are using Redis?

You do not need it for PubSub to work.

And if you are worried about clustering, it is easy…

I wrote a post about it sometime back.