Oban Pro v1.5.0-rc.0 is out!

This release includes the new job decorator, unified migrations, a index-backed simple unique mode, changes for distributed PostgreSQL, improved batches, streamlined chains, worker aliases, hybrid job composition, and other performance improvements.

Elixir Support

This release requires a minimum of Elixir v1.14. We officially support 3 Elixir versions back,

and use of some newer Elixir and Erlang/OTP features bumped the minimum up to v1.14.

Job Decorator

Job Decorator

The new Oban.Pro.Decorator module converts functions into Oban jobs with a teeny-tiny @job true annotation. Decorated functions, such as those in contexts or other non-worker modules, can be executed as fully fledged background jobs with retries, priority, scheduling, uniqueness, and all the other guarantees you have come to expect from Oban jobs.

defmodule Business do

use Oban.Pro.Decorator

@job max_attempts: 3, queue: :notifications

def activate(account_id) when is_integer(account_id) do

case Account.fetch(account_id) do

{:ok, account} ->

account

|> notify_admin()

|> notify_users()

:error ->

{:cancel, :not_found}

end

end

end

# Insert a Business.activate/1 job

Business.insert_activate(123)

The @job decorator also supports most standard Job options, validated at compile time. As expected, the options can be overridden at runtime through an additional generated clause. Along with generated insert_ functions, there’s also a new_ variant that be used to build up job changesets for bulk insert, and a relay_ variant that operates like a distributed async/await.

Finally, the generated functions also respect patterns and guards, so you can write assertive clauses that defend against bad inputs or break logic into multiple clauses.

Unified Migrations

Unified Migrations

Oban has had centralized, versioned migrations from the beginning. When there’s a new release with database changes, you run the migrations and it figures out what to change on its own. Pro behaved differently for reasons that made sense when there was a single producers table, but don’t track with multiple tables and custom indexes.

Now Pro has unified migrations to keep all the necessary tables and indexes updated and fresh, and you’ll be warned at runtime if the migrations aren’t current.

See the Oban.Pro.Migration module for more details, or check the v1.5 Upgrade Guide for instructions on putting it to use.

Enhanced Unique

Enhanced Unique

Oban’s standard unique options are robust, but they require multiple queries and centralized locks to function. Now Pro supports an simplified, opt-in unique mode designed for speed, correctness, scalability, and simplicity.

The enhanced hybrid and simple modes allows slightly fewer options while boosting insert performance 1.5x-3.5x, from reducing database load with fewer queries, improving memory usage, and staying correct across multiple processes/nodes.

Here’s a comparison between inserting various batches with legacy and simple modes:

| jobs |

legacy |

simple |

boost |

| 100 |

45.08 |

33.93 |

1.36 |

| 1000 |

140.64 |

81.452 |

1.72 |

| 10000 |

3149.71 |

979.47 |

3.22 |

| 20000 |

oom error |

1741.67 |

|

See more in the Enhanced Unique section.

Distributed PostgreSQL

Distributed PostgreSQL

There were a handful of PostgreSQL features used in Oban and Pro that prevented it from running in distributed PostgreSQL clients such as Yugabyte.

A few table creation options prevented even running the migrations due to unsupported database features. Then there were advisory locks, which are part of how Oban normally handles unique jobs, and how Pro coordinates queues globally.

We’ve worked around both all of these limitations and it’s possible to run Oban and Pro on Yugabyte with most of the same functionality as regular PostgreSQL (global, rate limits, queue partitioning).

Improved Batches

Improved Batches

One of the Pro’s original three features, batches link the execution of many jobs as a group and run optional callback jobs after jobs are processed.

Composing batches used to rely on a dedicated worker, one that couldn’t be composed with other worker types. Now, there’s a stand alone Oban.Pro.Batch module that’s used to dynamically build, append, and manipulate batches from any type of job, and with much more functionality.

Batches gain support for streams (creating and appending with them), clearer callbacks, and allow setting any Oban.Job option on callback jobs.

alias Oban.Pro.Batch

mail_jobs = Enum.map(mail_args, &MyApp.MailWorker.new/1)

push_jobs = Enum.map(push_args, &MyApp.PushWorker.new/1)

[callback_opts: [priority: 9], callback_worker: CallbackWorker]

|> Batch.new()

|> Batch.add(mail_jobs)

|> Batch.add(push_jobs)

See more in the Batch docs.

Streamlined Chains

Streamlined Chains

Chains now operate like workflows, where jobs are scheduled until they’re ready to run and then descheduled after the previous link in the chain completes. Preemptive chaining doesn’t clog queues with waiting jobs, and it chews through a backlog without any polling.

Chains are also a standard Oban.Pro.Worker option now. There’s no need to define a chain specific worker, in fact, doing so is deprecated. Just add the chain option and you’re guaranteed a FIFO chain of jobs:

- use Oban.Pro.Workers.Chain, by: :worker

+ use Oban.Pro.Worker, chain: [by: :worker]

See more in the Chained Jobs section.

Improved Workflows

Improved Workflows

Workflows began the transition from a dedicated worker to a stand-alone module several versions ago. Now that transition is complete, and workflows can be composed from any type of job.

All workflow management functions have moved to a centralized Oban.Pro.Workflow module. An expanded set of functions, including the ability to cancel an entire workflow, conveniently work with either a workflow job or id, so it’s possible to maneuver workflows from anywhere.

Perhaps the most exciting addition, because it’s visual and we like shiny things, is the addition

of mermaid output for visualization. Mermaid has become the graphing

standard, and it’s an excellent way to visualize workflows in tools like LiveBook.

alias Oban.Pro.Workflow

workflow =

Workflow.new()

|> Workflow.add(:a, EchoWorker.new(%{id: 1}))

|> Workflow.add(:b, EchoWorker.new(%{id: 2}), deps: [:a])

|> Workflow.add(:c, EchoWorker.new(%{id: 3}), deps: [:b])

|> Workflow.add(:d, EchoWorker.new(%{id: 4}), deps: [:c])

Oban.insert_all(workflow)

Workflow.cancel_jobs(workflow.id)

Worker Aliases

Worker Aliases

Worker aliases solve a perennial production issue—how to rename workers without breaking existing jobs. Aliasing allows jobs enqueued with the original worker name to continue executing without exceptions using the new worker code.

-defmodule MyApp.UserPurge do

+defmodule MyApp.DataPurge do

- use Oban.Pro.Worker

+ use Oban.Pro.Worker, aliases: [MyApp.UserPurge]

See more in the Worker Aliases section.

v1.5.0-rc.0 — 2024-07-26

Enhancements

-

[Smart] Implement check_available/1 engine callback for faster staging queries.

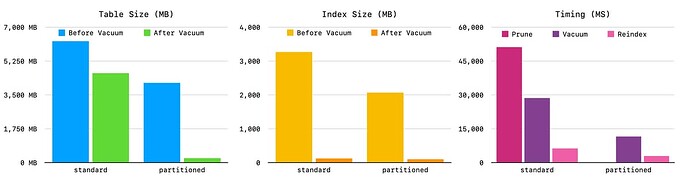

The smart engine defines a custom check_available/1 callback that vastly outperforms the Basic implementation on large tables. This table illustrates the difference in a benchmark of 24 queues with an event split of available and completed jobs on a local database with no additional load:

| jobs |

original |

optimized |

boost |

| 2.4m |

107.79ms |

0.72ms |

149x |

| 4.8m |

172.10ms |

1.15ms |

149x |

| 7.2m |

242.32ms |

4.28ms |

56x |

| 9.6m |

309.46ms |

7.89ms |

39x |

The difference in production may be much greater.

Worker

-

[Worker] Add before_process/1 callback.

The new callback is applied before process/1 is called, and is able to to modify the job or stop processing entirely. Like after_process, it executes in the job’s process after all internal processing (encryption, structuring) are applied.

-

[Worker] Avoid re-running stages during after_process/1 hooks.

Stages such as structuring and encryption are only ran once during execution, then the output is reused in hooks.

-

[Worker] Prevent compile time dependencies in worker hooks.

Explicit worker hooks are now aliased to prevent a compile time dependency. Initial validation for explicit hooks is reduced as a result, but it retains checks for global hooks.

Batch

-

[Batch] Add new callbacks with clearer, batch-specific names.

The old handle_ names aren’t clear in the context of hybrid job compositions. This introduces new batch_ prefixed callbacks for the Oban.Pro.Batch module. The legacy callbacks are still handled for backward compatibility.

-

[Batch] Add from_workflow/2 for simplified Batch/Worker composition.

Create a batch from a workflow by augmenting all jobs to also be part of a batch.

-

[Batch] Add cancel_jobs/2 for easy cancellation of an entire batch.

-

[Batch] Add append/2 for extending batches with new jobs.

-

[Batch] Uniformly accept job or batch_id in all functions.

Now it’s possible to fetch batch jobs from within a process/1 block via a Job struct, or from anywhere in code with the batch_id alone.

Workflow

-

[Workflow] Add get_job/3 for fetching a single dependent job.

Internally it uses all_jobs/3, but the name and arguments make it clear that this is the way to get a single workflow job.

-

[Workflow] Add cancel_jobs/3 for easy cancellation of an entire workflow.

The new helper function simplifies cancelling full or partial workflows using the same query logic behind all_jobs and stream_jobs.

-

[Workflow] Drop optional dependency on libgraph

The libgraph dependency hasn’t had a new release in years and is no longer necessary, we can build the same simple graph with the digraph module available in OTP.

-

[Workflow] Add to_mermaid/1 for mermaid graph output.

Now that we’re using digraph we can also take control of rendering our own output, such as mermaid.

-

[Workflow] Uniformly accept job or workflow_id in all functions.

Now it’s possible to fetch workflow jobs from within a process/1 block via a Job struct, or from anywhere in code with the workflow_id alone.

Bug Fixes

-

[Smart] Require a global queue lock with any flush handlers.

Flush handlers for batches, chains, and workflows must be staggered without overlapping transactions. Without a mutex to stagger fetching the transactions on different nodes can’t see all completed jobs and handlers can misfire.

This is most apparent with batches, as they don’t have a lifeline to clean up after there’s an issue as with workflows.

-

[DynamicPruner] Use state-specific timestamps when pruning.

Previously, the most recent timestamp was used rather than the timestamp for the job’s current state.

-

[Workflow] Prevent incorrect workflow_id types by validating options passed to new/1.

Deprecations

-

[Oban.Pro.Workers.Batch] The worker is deprecated in favor of composing with the new Oban.Pro.Batch module.

-

[Oban.Pro.Workers.Chain] The worker is deprecated in favor of composing with the new chain option in Oban.Pro.Worker.

-

[Oban.Pro.Workers.Workflow] The worker is deprecated in favor of composing with the new Oban.Pro.Workflow module.

DynamicPartitioner

DynamicPartitioner Scheduling Guarantees

Scheduling Guarantees UUIDv7 for Binary Ids

UUIDv7 for Binary Ids