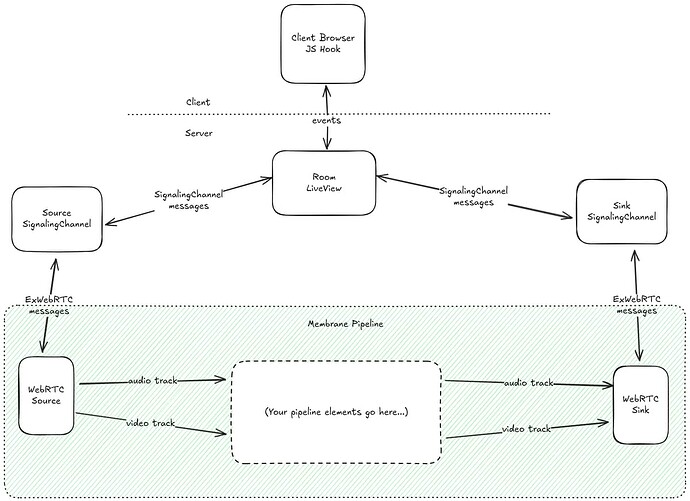

I am interested in using Membrane Framework as a proxy for OpenAI Realtime API and this livebook provided in the examples does almost precisely what I need.

What I am struggling to understand is how this would work in actual production environment.

In particular, I noticed that the pipeline initiated here:

{:ok, _supervisor, pipeline} =

Membrane.Pipeline.start_link(OpenAIPipeline,

openai_ws_opts: openai_ws_opts,

webrtc_source_ws_port: 8829,

webrtc_sink_ws_port: 8831

)

Accepts only one connection, and when this connection drops, it shuts down with:

(ex_webrtc 0.4.1) lib/ex_webrtc/dtls_transport.ex:313: ExWebRTC.DTLSTransport.handle_ice_data/2

(ex_webrtc 0.4.1) lib/ex_webrtc/dtls_transport.ex:287: ExWebRTC.DTLSTransport.handle_info/2

(stdlib 6.0.1) gen_server.erl:2173: :gen_server.try_handle_info/3

(stdlib 6.0.1) gen_server.erl:2261: :gen_server.handle_msg/6

(stdlib 6.0.1) proc_lib.erl:329: :proc_lib.init_p_do_apply/3

Last message: {:ex_ice, #PID<0.992.0>, {:data, <<21, 254, 253, 0, 1, 0, 0, 0, 0, 0, 1, 0, 26, 0, 1, 0, 0, 0, 0, 0, 1, 174, 202, 11, 139, 249, 54, 50, 51, 36, 148, 29, 72, 144, 63, 215, 19, 63, 224>>}}

12:18:34.765 [error] GenServer #PID<0.1051.0> terminating

** (stop) {[], []}

Last message: {:ex_ice, #PID<0.1050.0>, {:data, <<21, 254, 253, 0, 1, 0, 0, 0, 0, 0, 1, 0, 18, 11, 40, 203, 221, 56, 144, 220, 98, 1, 229, 33, 223, 76, 248, 36, 232, 117, 65>>}}

12:18:34.773 [error] <0.325.0>/:webrtc_sink/ Terminating with reason: {:membrane_child_crash, :webrtc, {:timeout_value, [{:gen_server, :loop, 7, [file: ~c"gen_server.erl", line: 2078]}, {ExDTLS, :handle_data, 2, [file: ~c"lib/ex_dtls.ex", line: 168]}, {ExWebRTC.DTLSTransport, :handle_ice_data, 2, [file: ~c"lib/ex_webrtc/dtls_transport.ex", line: 313]}, {ExWebRTC.DTLSTransport, :handle_info, 2, [file: ~c"lib/ex_webrtc/dtls_transport.ex", line: 287]}, {:gen_server, :try_handle_info, 3, [file: ~c"gen_server.erl", line: 2173]}, {:gen_server, :handle_msg, 6, [file: ~c"gen_server.erl", line: 2261]}, {:proc_lib, :init_p_do_apply, 3, [file: ~c"proc_lib.erl", line: 329]}]}}

12:18:34.773 [error] <0.325.0>/ Terminating with reason: {:membrane_child_crash, :webrtc_sink, {:membrane_child_crash, :webrtc, {:timeout_value, [{:gen_server, :loop, 7, [file: ~c"gen_server.erl", line: 2078]}, {ExDTLS, :handle_data, 2, [file: ~c"lib/ex_dtls.ex", line: 168]}, {ExWebRTC.DTLSTransport, :handle_ice_data, 2, [file: ~c"lib/ex_webrtc/dtls_transport.ex", line: 313]}, {ExWebRTC.DTLSTransport, :handle_info, 2, [file: ~c"lib/ex_webrtc/dtls_transport.ex", line: 287]}, {:gen_server, :try_handle_info, 3, [file: ~c"gen_server.erl", line: 2173]}, {:gen_server, :handle_msg, 6, [file: ~c"gen_server.erl", line: 2261]}, {:proc_lib, :init_p_do_apply, 3, [file: ~c"proc_lib.erl", line: 329]}]}}}

Is this the expected behavior?

If yes, then how would I use that in production? Should I reserve a bunch of ports, and start a pool of pipelines and assign my clients to them?

What about authentication of users, I guess I would need to generate the token that they would pass in the websocket URL but how do I hook up the code server-side to validate it?

Any hints much appreciated <3