Hi all,

I’ve run the Techempower fortune benchmark locally, Elixir/Phoenix and Golang/fasthttp.

Removed all unnecessary code in both Elixir and Go.

I’m trying to get the best performance out of the Elixir/Phoenix version.

Tuning for Elixir/Phoenix:

-

disabled all unnecessary plugs in router.ex:

pipeline :browser do

plug :accepts, [“html”]

end -

set DB adapter pool size to 40

-

used the same HTML template as Go

-

set logging to error

-

compiled protocols

-

start server: MIX_ENV=prod PORT=4000 elixir -S mix phx.server

No tuning for Go.

I use wrk to perform the load tests:

wrk -t6 -c12000 -d60s -T10s http://.../fortune

Client is physical and server is a domU/2vcpu/4GB. OS: FreeBSD. 10 runs for each load test.

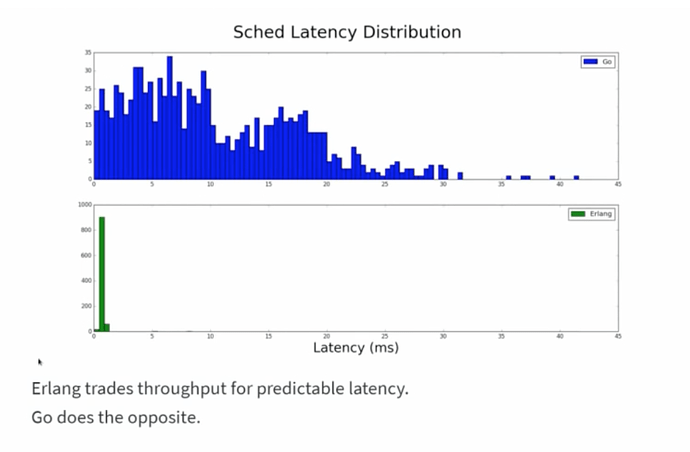

For Elixir/Phoenix:

- Average latency 408ms, max 8s

- Req/s 2400

For Golang:

- Average latency 123ms, max 1s

- Req/s 8600

I did my first Go tests with net/http and peaked at 4000 req/s so I replaced it with fasthttp and got 8500+ req/s

Are there faster alternatives to Cowboy ?

How do you fine tune production environment ? What OS is best suited for the Erlang VM ? What FS ? specific OS tunables ?

Thanks