We are running 6 nodes with 16 cores and 26GB RAM to serve our app to about 100k concurrent users. Our business logics are depended heavily on Phoenix Channel.

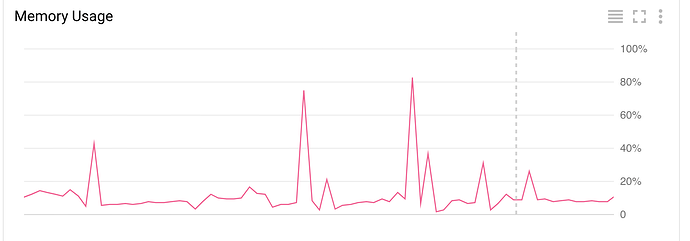

We are experiencing serious issues with app stability recently. Running nodes suddenly spike up to 100% ram within minutes and crash without any clear trace. Previously, I can trace and solve all issues using erl_crash.dump but this time it does not work anymore. I tried to double CPU and RAM and still experience same issue

crashdumps usually point out this

Slogan: eheap_alloc: Cannot allocate 36584552816 bytes of memory (of type "heap_frag").

System version: Erlang/OTP 20 [erts-9.3] [source] [64-bit] [smp:14:14] [ds:14:14:10] [async-threads:1024] [hipe] [kernel-poll:true]

Compiled: Mon Mar 19 14:58:42 2018

Taints: asn1rt_nif,crypto,Elixir.Comeonin.Bcrypt,dyntrace,erl_tracer,zlib

Atoms: 48690

Calling Thread: scheduler:11

=scheduler:11

Scheduler Sleep Info Flags:

Scheduler Sleep Info Aux Work: DD | DD_THR_PRGR | FIX_ALLOC_DEALLOC | FIX_ALLOC_LOWER_LIM | THR_PRGR_LATER_OP | CNCLD_TMRS | CNCLD_TMRS_THR_PRGR | MISC | MSEG_CACHE_CHECK

Current Port:

Run Queue Max Length: 0

Run Queue High Length: 0

Run Queue Normal Length: 0

Run Queue Low Length: 0

Run Queue Port Length: 0

Run Queue Flags: NONEMPTY | EXEC

Current Process: <0.21328.3>

Current Process State: Running

Current Process Internal State: ACT_PRIO_NORMAL | USR_PRIO_NORMAL | PRQ_PRIO_NORMAL | ACTIVE | RUNNING | ON_HEAP_MSGQ

Current Process Program counter: 0x00007fe09fbd4eb0 ('Elixir.Ecto.Repo.Preloader':split_while/4 + 24)

Current Process CP: 0x0000000000000000 (invalid)

Current Process Limited Stack Trace:

0x00007fe003a0d800:SReturn addr 0x710AE820 (proc_lib:init_p_do_apply/3 + 72)

0x00007fe003a0d818:SReturn addr 0x914BA8 (<terminate process normally>)

but sometimes, it just returns zero calling thread scheduler

Slogan: eheap_alloc: Cannot allocate 9794840320 bytes of memory (of type "heap").

System version: Erlang/OTP 20 [erts-9.3] [source] [64-bit] [smp:12:12] [ds:12:12:10] [async-threads:1024] [hipe] [kernel-poll:true]

Compiled: Mon Mar 19 14:58:42 2018

Taints: asn1rt_nif,crypto,Elixir.Comeonin.Bcrypt,dyntrace,erl_tracer,zlib

Atoms: 48683

Calling Thread: scheduler:0

I tried few logging tools like scout and recon but when the crash is about to happened, the request is always hanged and crashed together with the node

Did anyone experience this situation? Any suggestion is welcomed. Thank you for reading!