Hello there,

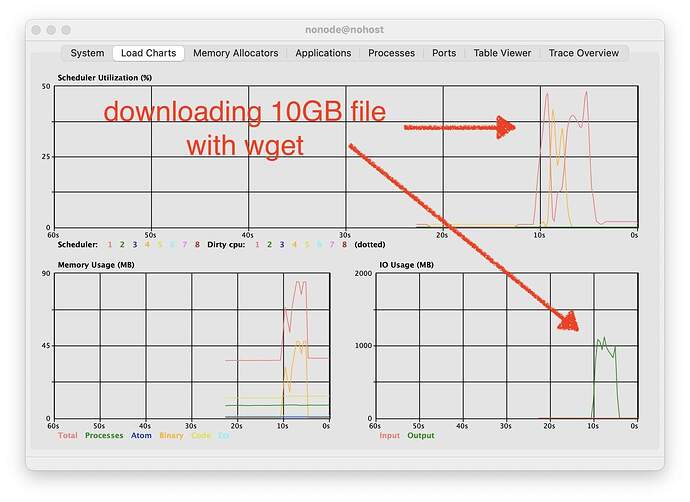

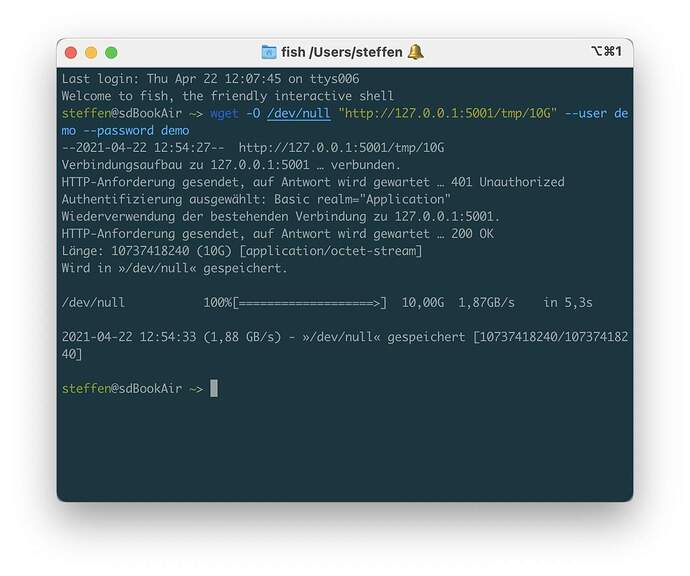

I’m currently working on implementing a WebDAV server using Plug/Cowboy, inspired by the great Python wsgidav library. So far read only access works fine and when downloading files using Plug.Conn.chunk I’m seeing very good performance (1,88GB/s - GigaBYTES from local SSD to /dev/null) with less than 50% scheduler utilization:

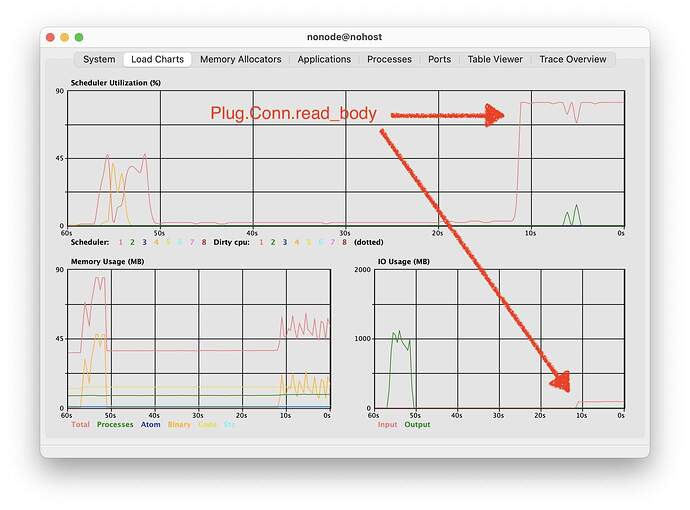

But while implementing initial write support I’ve noticed that the other direction, using Plug.Conn.read_body maxes out at about 200MB/s - even when just discarding the output:

You can try this yourselves by cloning the repository (write_support branch) and running the demo project from the demo folder. Then starting the server is as easy as using iex -S mix after the usual mix deps.get step.

For uploading, you can use curl -X PUT -F 'data=@/dev/random' http://demo:demo@127.0.0.1:5001/tmp/test.null.

For downloading, first create/download a sufficiently large file and then use wget -O /dev/null "http://127.0.0.1:5001/tmp/10G" --user demo --password demo (replace /tmp/10G with the file path).

I tried tweaking the Plug.Conn.read_body opts, but did not find anything that improved this.

Maybe someone with deeper insight into plug/cowboy has an idea why the Plug.Conn.read_body direction performs so bad. I’d expect both directions to have roughly the same performance and scheduler usage.

Thanks for any hints!