I’m glad to release 2 Elixir libraries related to HTTP caching.

PlugHTTPCache is a simple plug that automatically caches HTTP responses, and returns them directly when they’re present in the cache.

pipeline :cache do

plug PlugHTTPCache, @caching_options

end

...

scope "/", PlugHTTPCacheDemoWeb do

pipe_through :browser

scope "/some_route" do

pipe_through :cache

...

end

and HTTP caching is enabled.

TeslaHTTPCache, you guessed it, is a Tesla middleware that caches HTTP responses and is capable of efficiently requesting resources (= revalidating), using HTTP validators (if-modified-since and if-none-match headers).

iex> client = Tesla.client([{TeslaHTTPCache, store: :http_cache_store_process}])

%Tesla.Client{

adapter: nil,

fun: nil,

post: [],

pre: [{TeslaHTTPCache, :call, [[store: :http_cache_store_process]]}]

}

iex> Tesla.get!(client, "http://perdu.com")

%Tesla.Env{

__client__: %Tesla.Client{

adapter: nil,

fun: nil,

post: [],

pre: [{TeslaHTTPCache, :call, [[store: :http_cache_store_process]]}]

},

__module__: Tesla,

body: "<html><head><title>Vous Etes Perdu ?</title></head><body><h1>Perdu sur l'Internet ?</h1><h2>Pas de panique, on va vous aider</h2><strong><pre> * <----- vous êtes ici</pre></strong></body></html>\n",

headers: [

{"cache-control", "max-age=600"},

{"date", "Wed, 29 Jun 2022 12:23:18 GMT"},

{"accept-ranges", "bytes"},

{"etag", "\"cc-5344555136fe9\""},

{"server", "Apache"},

{"vary", "Accept-Encoding,User-Agent"},

{"content-type", "text/html"},

{"expires", "Wed, 29 Jun 2022 12:33:18 GMT"},

{"last-modified", "Thu, 02 Jun 2016 06:01:08 GMT"},

{"content-length", "204"}

],

method: :get,

opts: [],

query: [],

status: 200,

url: "http://perdu.com"

}

iex> Tesla.get!(client, "http://perdu.com")

%Tesla.Env{

__client__: %Tesla.Client{

adapter: nil,

fun: nil,

post: [],

pre: [{TeslaHTTPCache, :call, [[store: :http_cache_store_process]]}]

},

__module__: Tesla,

body: "<html><head><title>Vous Etes Perdu ?</title></head><body><h1>Perdu sur l'Internet ?</h1><h2>Pas de panique, on va vous aider</h2><strong><pre> * <----- vous êtes ici</pre></strong></body></html>\n",

headers: [

{"cache-control", "max-age=600"},

{"date", "Wed, 29 Jun 2022 12:23:18 GMT"},

{"accept-ranges", "bytes"},

{"etag", "\"cc-5344555136fe9\""},

{"server", "Apache"},

{"vary", "Accept-Encoding,User-Agent"},

{"content-type", "text/html"},

{"expires", "Wed, 29 Jun 2022 12:33:18 GMT"},

{"last-modified", "Thu, 02 Jun 2016 06:01:08 GMT"},

{"content-length", "204"},

{"age", "4"}

],

method: :get,

opts: [],

query: [],

status: 200,

url: "http://perdu.com"

}

(Note the age header in the second response - that response is returned directly from the cache.)

Both these libraries rely on the lower level http_cache Erlang library that deals with

- analyzing response (cacheable or not)

- returning the right response (taking into account freshness and the

varyHTTP header) - replying to conditional requests

- transforming the response: (de)compression & range requests

This library is stateless and not capable of storing responses (except in the process dictionary for tests - you might have noticed the http_cache_store_process option value store above), so these libraries also come with a store: http_cache_store_native.

This is a stateful LRU store that uses native BEAM capabilities for storing responses and, optionally, broadcast cached responses within a cluster. Cached responses are stored in memory (ETS). This store also supports invalidating by URL and alternate key (that is, a key that you can attach to a response to, for example, invalidate all images at once). And of course, it deals with invalidating expired responses and nuking oldest cached responses when the cache is full.

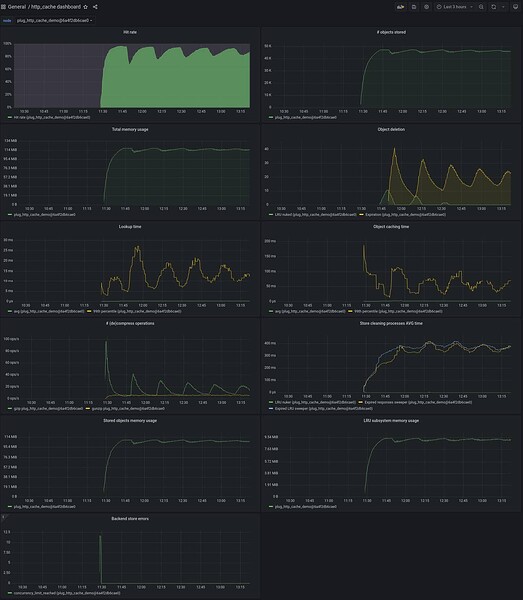

All these libraries emit telemetry events to build nice dashboards (and better diagnose production problem, should these be deployed in prod one day):

screenshot taken from the

plug_http_cache_demo app

This has not been used in production, so use it at your own risk. Feedback is welcome. I’ve opened issues for each of these libraries, so feel free to contribute! It can been particularly interesting if you come from Elixir and want to write some Erlang ![]() They’re some very interesting problems to deal with, including:

They’re some very interesting problems to deal with, including:

- implement a disk backend for

http_cache_store_native - benchmark ETS options for

http_cache_store_native - implement request coalescing for

http_cache - …and more beginner-friendly ones as well

Cheers