Hi, @Asd!

(@hst337 ?  )

)

First of all, thank you very much for reading the code and describing several problems you found! Writing a project without a code review was quite tricky, and I no longer saw the issues you wrote about.

Why did you name the option pool_id instead of more common name for name registration?

Initially, I used only atom() as the first parameter since I did not see the need to complicate it. I hadn’t thought about using Registry, and it turns out that in my years of working on Elixir, I’ve never had to use anything other than atoms. In general, when a pool was intended to have a unique atom as its identifier, pool_id was an appropriate name. Most likely, I made a mistake by not changing the name of this option when supporting the GenServer.name() type. But I don’t see any great criticality in this. I will make an alias :name, deprecate the old key :pool_id, and then slowly migrate it.

poolboy uses high priority for the pool owner genserver process. Why didn’t you implement the same here?

Unfortunately, I didn’t understand what we were talking about. Can you please describe it in more detail?

Why do you use Agent for monitor references storage. It seems that it is redundant and just decreases the performance of the whole pool (I am preparing a PR with change to plain map).

You’re right! Thank you! It seems to me that there was a similar reason in one of the previous implementations because not in all cases, when working with monitoring, I had a State available. It looks like there are no more reasons, but I forgot to check this and remove the public storage for monitoring.

I’ll be waiting for your PR

There’s a very bad bug with monitor_caller function which spawns a monitoring process for every run call what goes against the idea of having a pool of processes in the first places (since you end up spawning process for every call anyways).

These new processes are very lightweight and not linked to the caller process. In any case, we need to monitor the caller, and I don’t yet understand why a process that waits to see if the caller will crash is not suitable for this task.

Overall, following checkout/checkin pattern would solve the problem.

It seems that this is excessive control over the execution logic on the call side. When using a pool, you should just perform some operation on it instead of juggling worker processes. What do you think?

Plus, these processes will live forever while the caller is alive.

I’m afraid that’s not right. The monitoring process is always killed after the worker is released.

Pay attention to this line: poolex/lib/poolex.ex at develop · general-CbIC/poolex · GitHub

Please tell me where I’m wrong if I don’t see something.

Poolex is not handling worker start errors and whole pool will die with MatchError if some extra worker fails to start. It makes this pool inapplicable for usage in environments where workers connect to external services (like databases or HTTP services which are most pooling use-cases) which can be unavailable or return 422 for example.

This is an excellent observation. Thank you very much! I focused on carefully handling errors from an already running worker and forgot about controlling their launch.

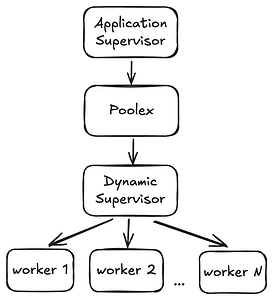

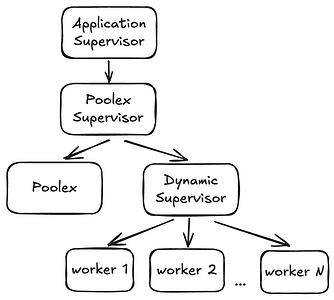

Starting and stopping supervisor manually is a strange approach. I’d suggest to start one_for_all supervisor which has Poolex and Dynamic as it’s children instead of starting a Dynamic supervisor as a direct link to Poolex.

I didn’t understand

Why is this behavior strange, and how is it conceptually different from general supervisory behavior?

![]()