Hello everyone, I’ve been studying Nx for a while by now, and have been writing an article about it.

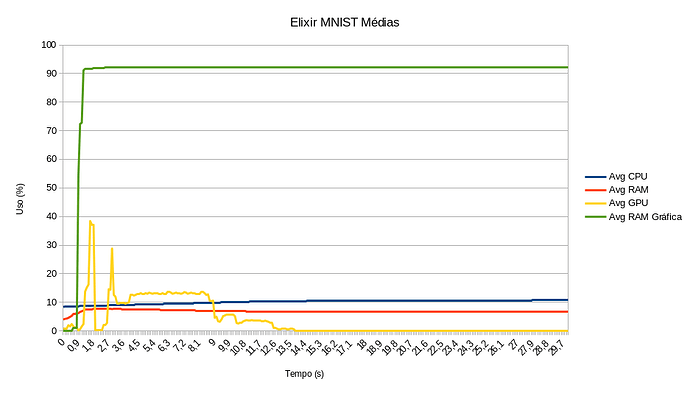

When analyzing its performance, I’ve noticed that EXLA seems to not be deallocating the graphic card RAM (not sure if it is EXLA’s responsibility), and I would like to share this at the forum to check if I’m doing something wrong, or if it is actually a known issue.

I noticed this behavior when I was measuring some performance metrics with the project UnixStats, an authorial project, and it was able to measure CPU, RAM, GPU and Graphic RAM, when I’ve noticed that the Graphical RAM was never deallocated after my process finished training a simple NN (Neural Network) using Axon to solve MNIST.

Here is the graphic plot I’ve made with these measurements when training MNIST in Elixir, using Axon, Nx, and EXLA:

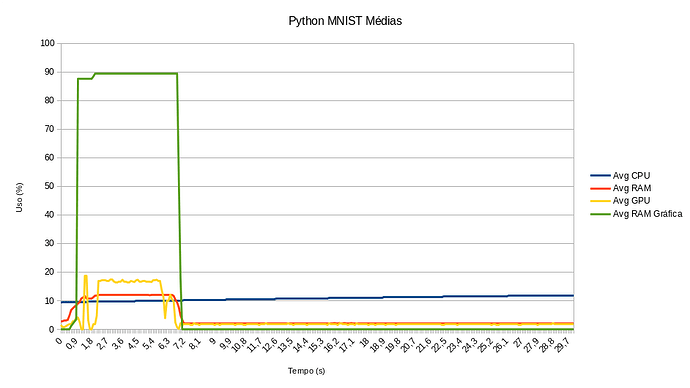

Also, I’ve done the same measurement solving MNIST in Python with NumPy, and the deallocation issue was not present in this scenario, as can be seen in this plot:

By now I will be not providing all my setup details just to not make this issue too long, but if any questions are needed about the current setup or env that my machine is using to run these tests, ask me in the thread I’ll answer them, okay?

2 Likes

XLA (which EXLA binds to) preallocates 90% of system VRAM for use for performance reasons. So what you’re seeing is the EXLA CUDA client process holding on to that for as long as it is alive. You can disable preallocation by setting preallocate: false To your CUDA client settings

You can also adjust the preallocation percentage with memory_fraction: 0.5 for example to only use 50% of available VRAM

5 Likes

Just testes with preallocate: false at my EXLA config, following the EXLA documentation, and it worked fine!

I’ve configured my EXLA like this:

config :exla, :clients,

host: [platform: :host],

cuda: [platform: :cuda, preallocate: false],

rocm: [platform: :rocm],

tpu: [platform: :tpu]

But I also have another question… Is it possible to manually deallocate this memory in Elixir, or maybe config EXLA to do this automatically?

I ask this because it continues to only deallocate the memory only when the beam’s process ends, and when analyzing graphically Elixir’s performance training a neural network, in comparison with Python, it would be nice to be able to see it only allocating the video RAM only when using, but I’m not sure if it is actually possible.

This behavior is easier to have in Python since the neural network I’m training in Python is executed as a script, therefore the process naturally ends and CUDA deallocates the video RAM

@seanmor5 do you know if this is possible currently at our EXLA library? Also, sorry for giving you work with these questions, I’m asking only because this would be really nice for the article I’m writing

Unfortunately there is no direct way to guarantee that XLA lets go of the memory allocated to it. You can transfer buffers from the GPU which will “deallocate” the memory in use (as in memory XLA knows it has available to it) but there is no way to guarantee XLA gets rid of memory it takes ownership of until the XLA process dies.

Preallocation is really the more performant option, is there a specific reason you want to deallocate manually?

1 Like

BTW you can probably observe the same with JAX/TensorFlow, as our client and defaults are the same as theirs. Although our client is persisted as long as the BEAM is alive, unless you explicitly kill the client process

The main reason behind freeing video RAM would be to share the memory with other processes when necessary.

I’m not sure if this is actually an issue in the community, but this is a temporal coupling, allocating the memory to train a network and then not deallocating it can be a harmful effect on the system, but I’ve understood this is the default behavior from CUDA.

An example of how this can affect a system came when I had a beam process running, it had allocated 90% of my video RAM (when with preallocate: true) and this caused other high memory use processes in my machine to receive the CUDA_OUT_OF_MEMORY error.

But this was just to explain these questions, I would say you’ve already helped me a lot to understand these behaviors and now I’ll be already able to explain this behavior in my article, instead of thinking and explaining this as some kind of “RAM deallocation bug”.

Thank you a lot for your help @seanmor5